Welcome back everyone 👋 and big thanks to all new subscribers - thanks for joining along for the ride!

This is the weekly Gorilla Newsletter - we have a look at everything noteworthy from the past week in generative art, creative coding, tech and AI. As well as a sprinkle of my own endeavors.

Enjoy - Gorilla Sun 🌸

All the Generative Things

Cloudscapes with Volumetric Raymarching

Let's kick things off with something that I've been eagerly awaiting - Maxime Heckel returns with another stellar article, a deep dive into Volumetric Raymarching and how it can be used for creating beautiful shader cloudscapes in real-time:

One fascinating aspect of Raymarching I quickly encountered in my study was its capacity to be tweaked to render volumes. Instead of stopping the raymarched loop once the ray hits a surface, we push through and continue the process to sample the inside of an object

And just like before the article is a true treasure trove of information and resources:

Also make sure to check out Maxime's earlier articles - resources like this one a far and few between and deserve every possible spotlight.

Ana María Caballero's Paperwork

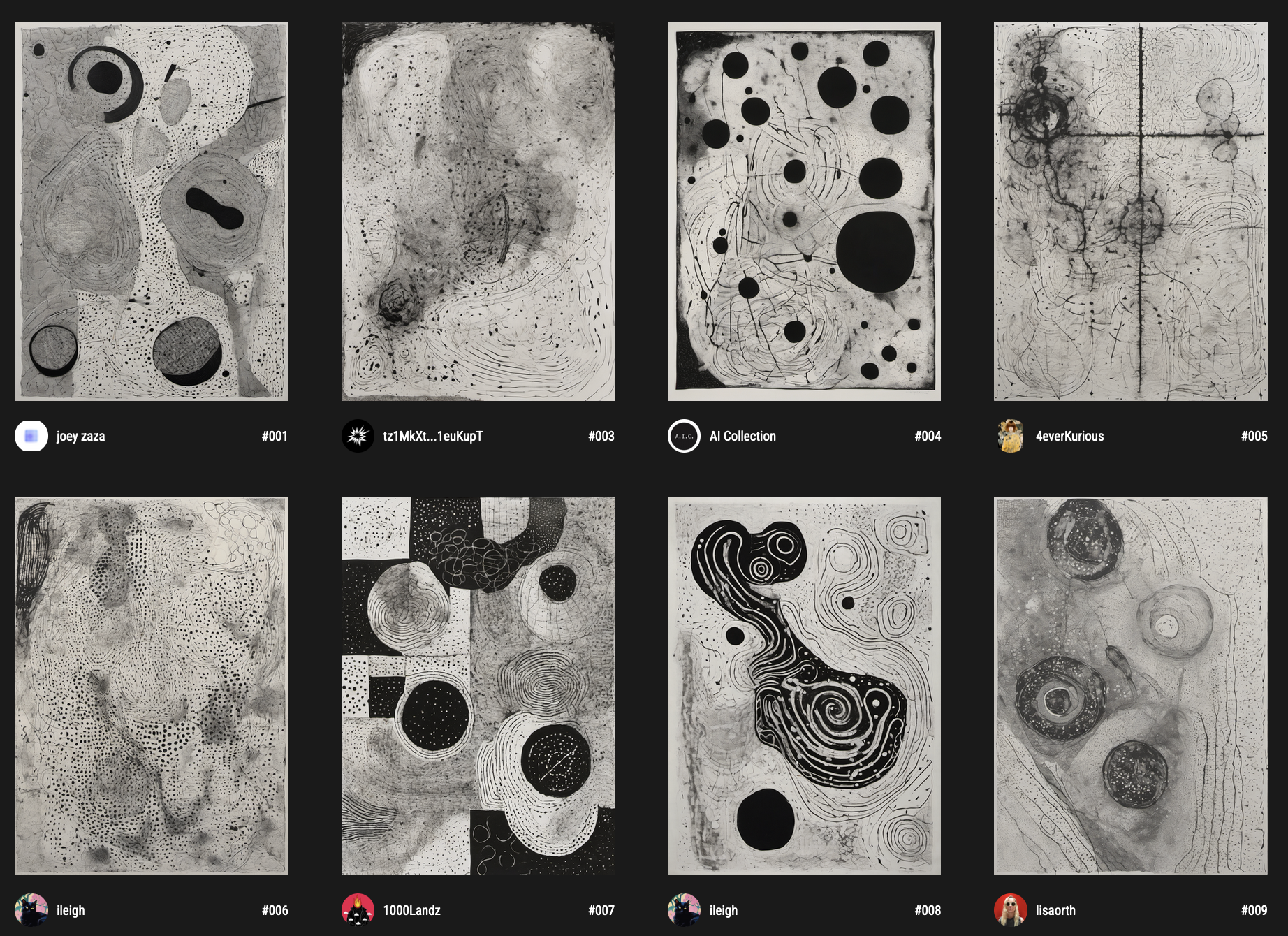

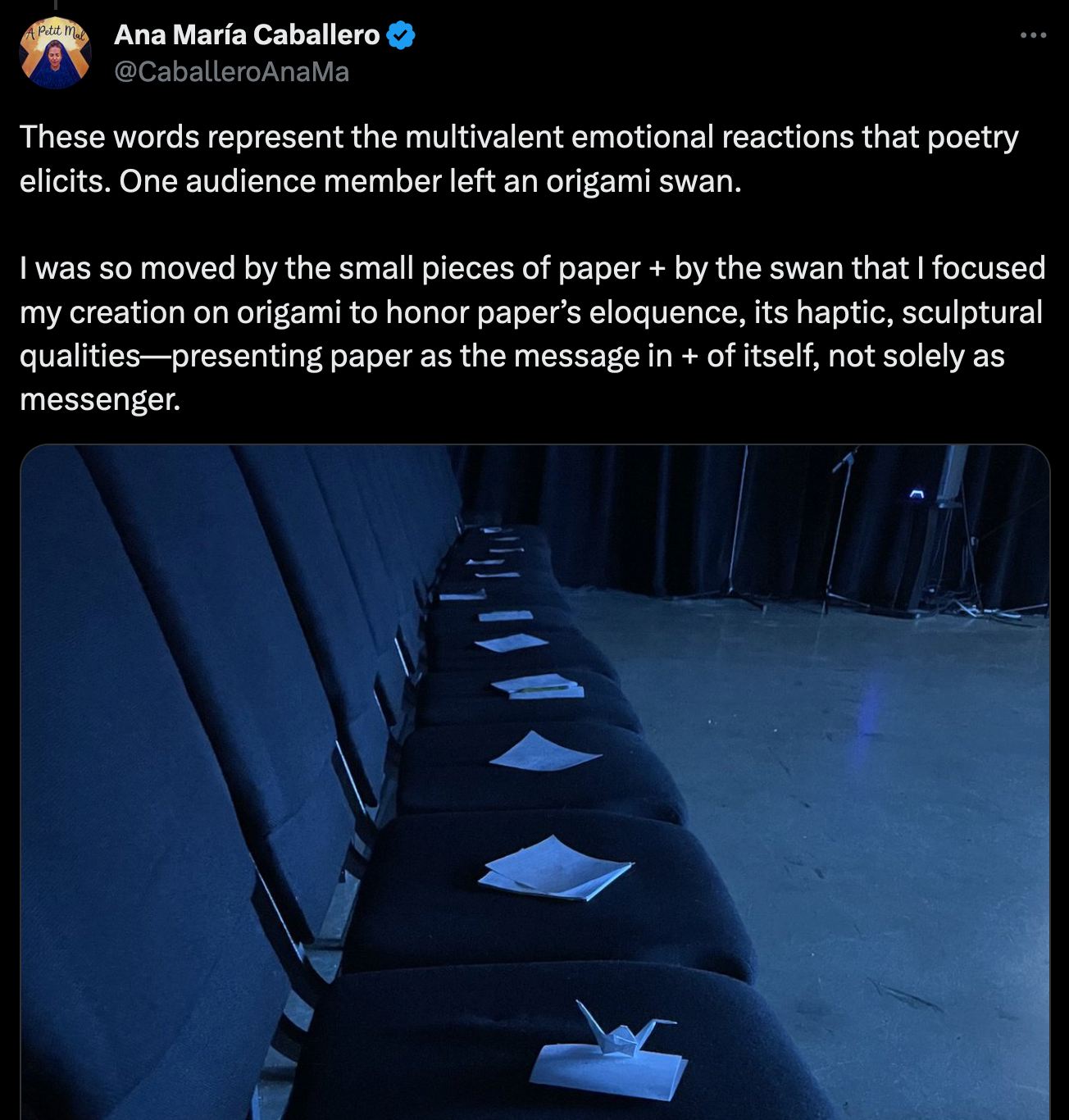

During a creative tour de force across different venues around the world, Ana María Caballero invited her audience members to leave her a single word in written form on a slip of paper after each performance. Having gathered these 'distilled, private moments of human connection' as her in her Bright Moments bio captures so beautifully, they serve as the foundation for her most recent piece titled 'Paperwork', released in collaboration with BM, and brought to life with the Emprops platform:

For those who're not familiar with Emprops yet, it's a relatively new platform that's been making ripples over on Twitter in recent weeks - the platform is a first of its kind really: blending between prompt based generative AI and a long-form approach, it makes collectible long-form AI art possible. Here the term Emprops is an abbreviation for 'Emergent Properties'.

The only resource that I could find which provides insight into how the platform works is by Claus Wilke, published as an fx(text):

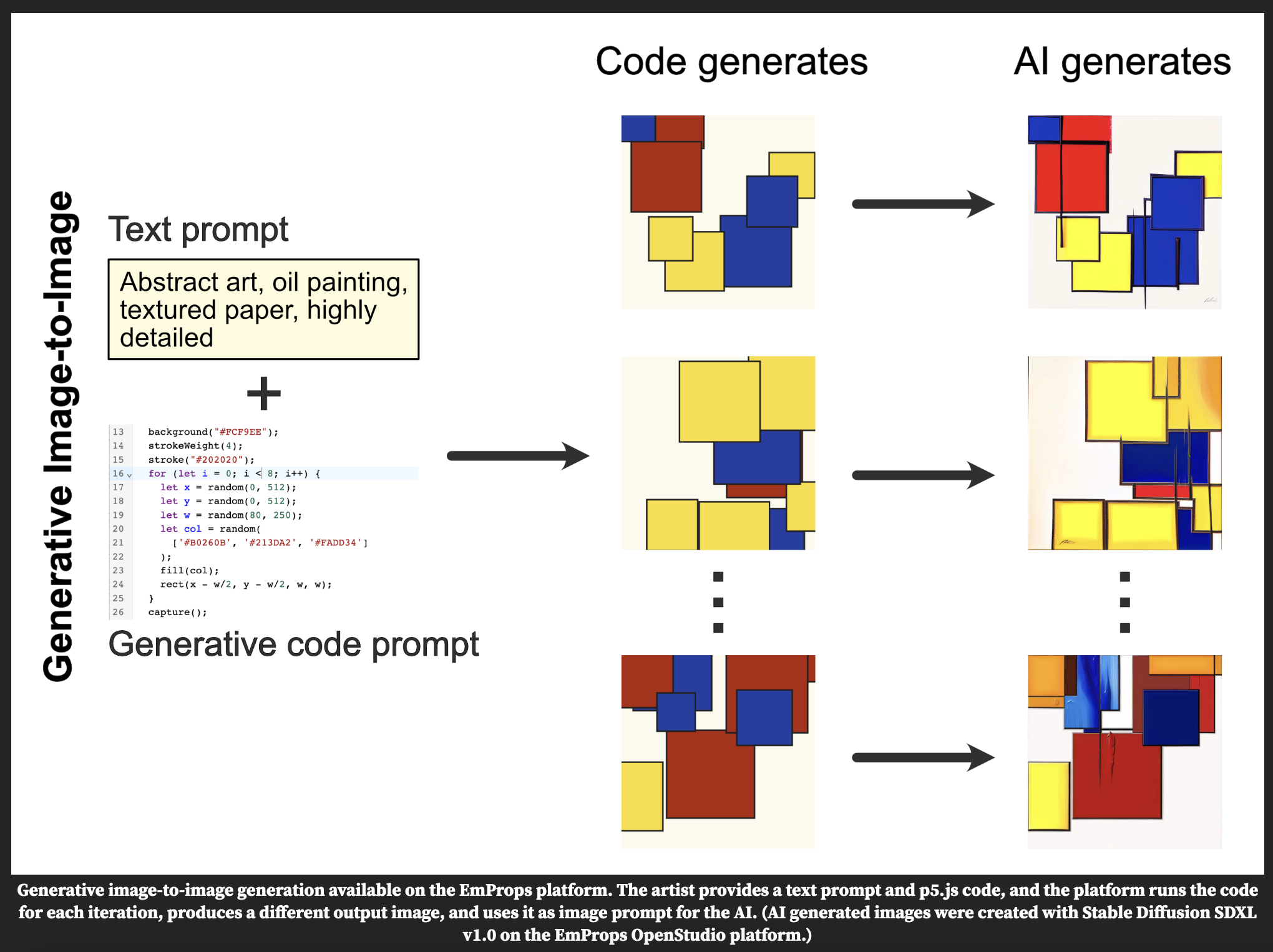

And as far as I understand it, the platform allows the artist to create an AI generated collection based off of a prompt. Generative image models usually incorporate some form of noisy input which leads them to be able to generate various different outputs that still 'align' with the same original input prompt. Here's an example:

Moreover, it's also possible to feed images into Stablediffusion (the model that Emprops makes use of), making it possible for generative artists to place a traditional code based generative artwork at the helm of this pipeline and feed these outputs into stablediffusion, in addition to some prompt that further modulates the resulting images in some way, Claus Wilke present a good visualization of this in his article:

Returning from our excursion into the Emprops platform - this is how Ana María's Paperwork comes to life.

LeRandom, having also taken part in Bright Moments Buenos Aires and exhibited their extensive collection of generative art; they gave Ana María the opportunity to use an entire page on the site as a blank canvas to do with as she wishes - she decided to showcase the 100 one-word notes that her audience wrote down after her performances, subsequently serving as inputs for the 100 Emprops outputs of paperwork:

The aesthetic inspiration for Paperwork stems from an Origami bird that one of the audience member left her:

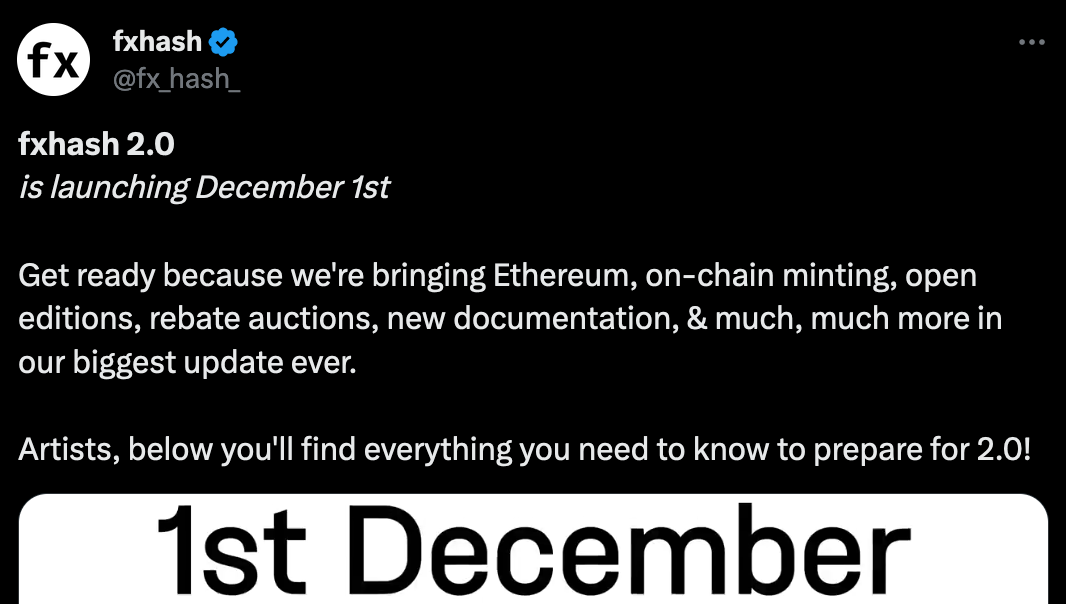

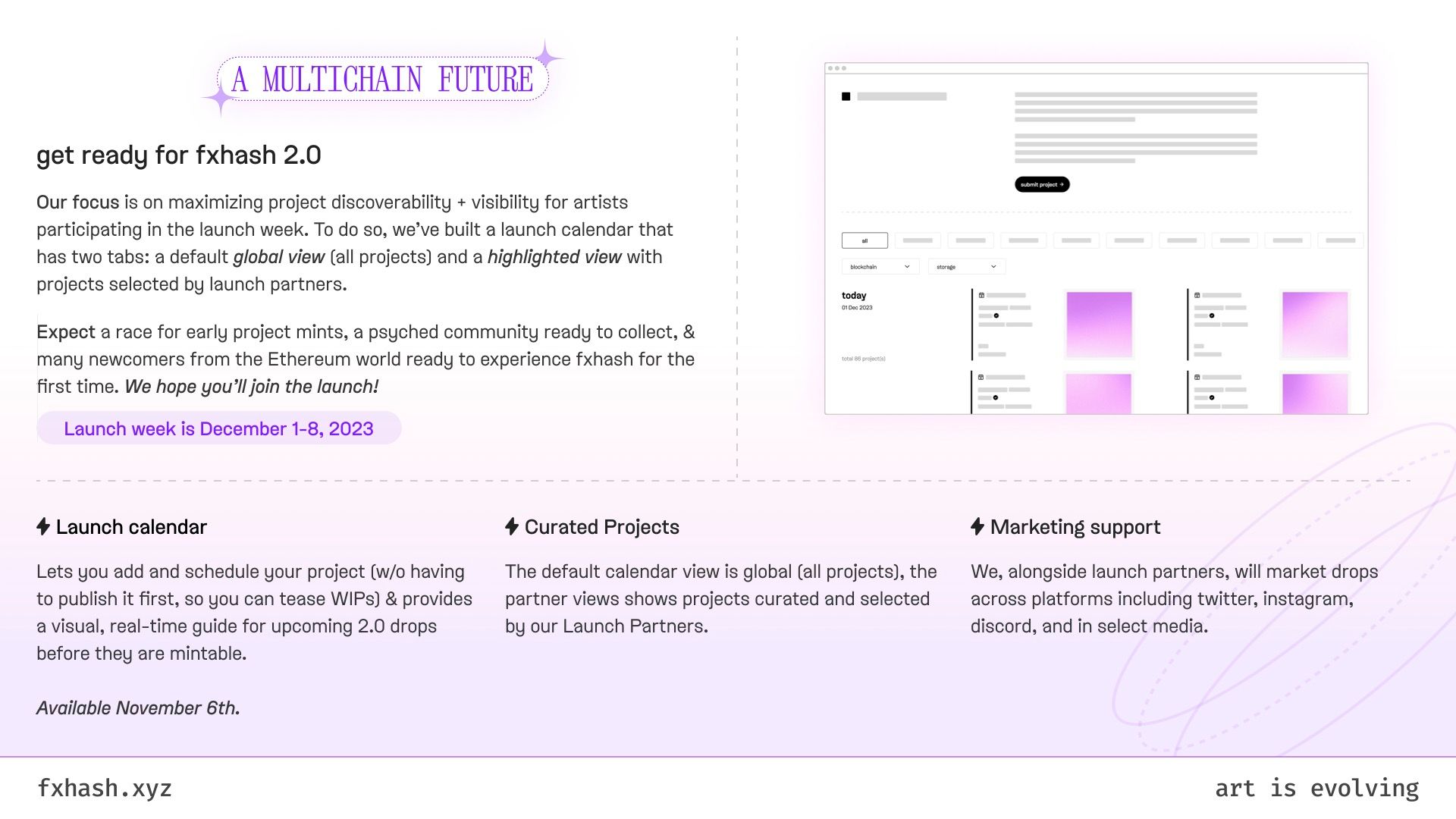

fx(hash) gearing up for 2.0

Last week fx(hash) dropped a big update thread detailing everything that's coming for their 2.0 release in December:

It's impressive that almost all features we know and love on TEZ are also coming to ETH.

If you're an artist you probably also want to get a head start and get in on that launch calendar (coming out the same day that this newsletter finds its way into your inbox):

It looks like it's definitely going to be a crazy first December week!

ComplexCity by John F. Simon

John F. Simon's ComplexCity is a generative artwork that finds it's beginnings 20 years ago, originally created as an autobiographical ode to life in New York city. Today it releases as a generative on-chain project on Artwrld via the ArtBlocks engine. Nato Thompson from RCS does the heavy lifting for us in this interview:

Simons touches on several important topics, how the internet has made it easier than ever to make meaningful connections without needing to be in specific physical location to do so, how blockchain tech has revolutionized the distribution of digital artworks, and how coding is a form creative writing:

The instructions in a program say what they do, but in their binary form, running on a computer, they do what they say. Coding is creative writing because the writing creates.

Studio Yorktown's Journal

As a big fan of Bruce's generative art and approach in general, I was excited to see him publish a 'Journal' section over on his site:

The writing is as thoughtful as you'd expect from Bruce - philosophically inspired, and without being specifically aimed at generative artists, there's many insights that'll make you rethink your creative process. You can give them a read here:

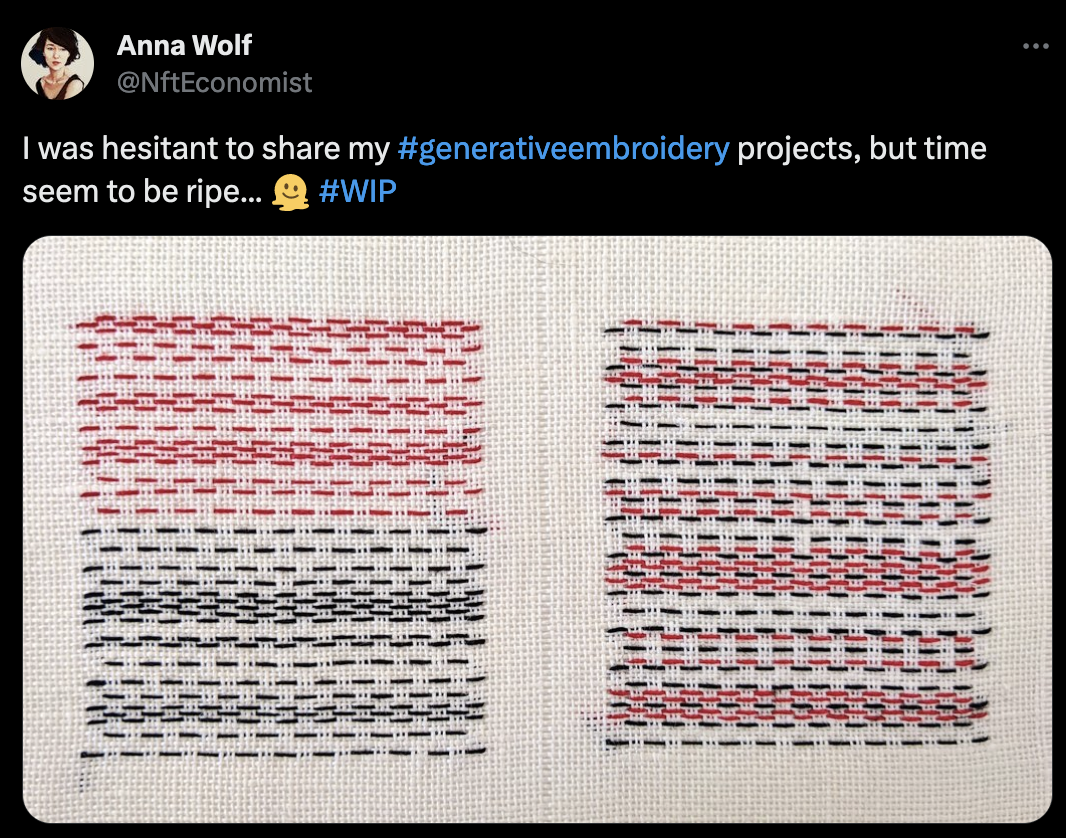

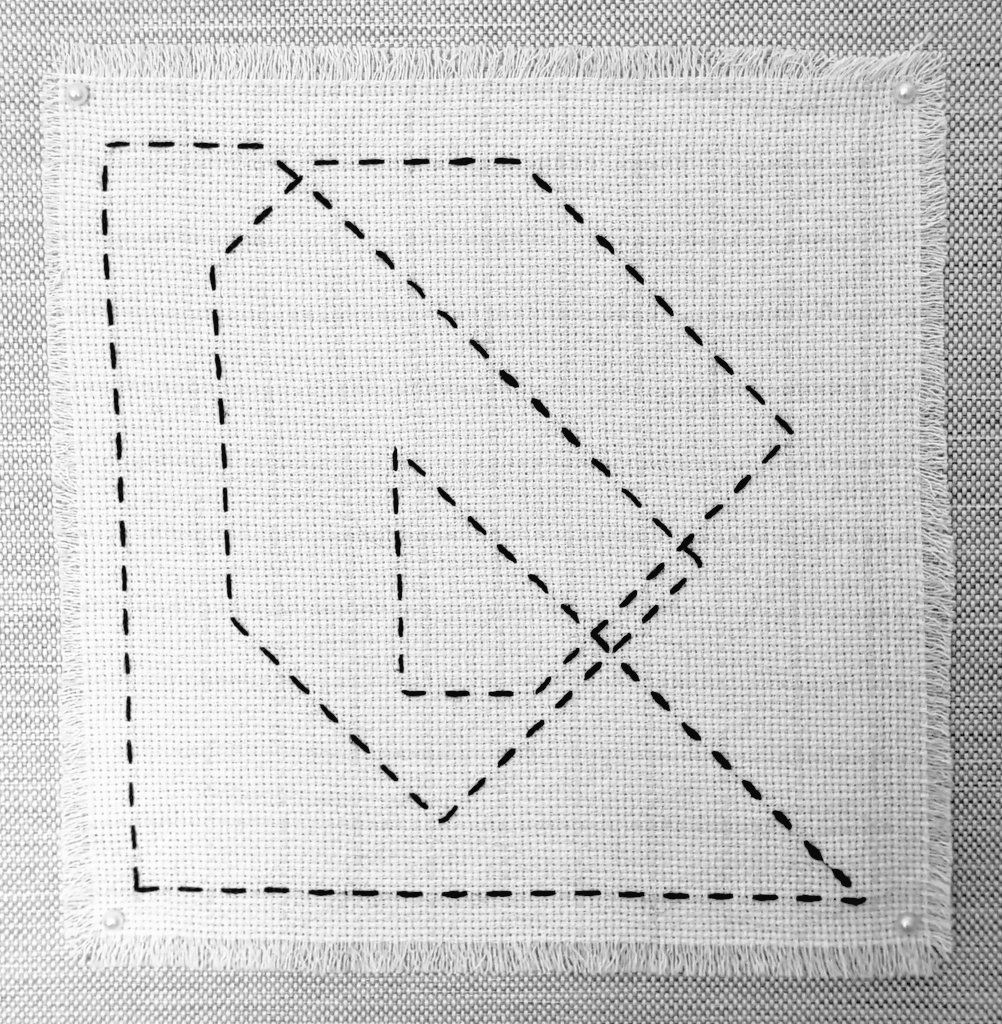

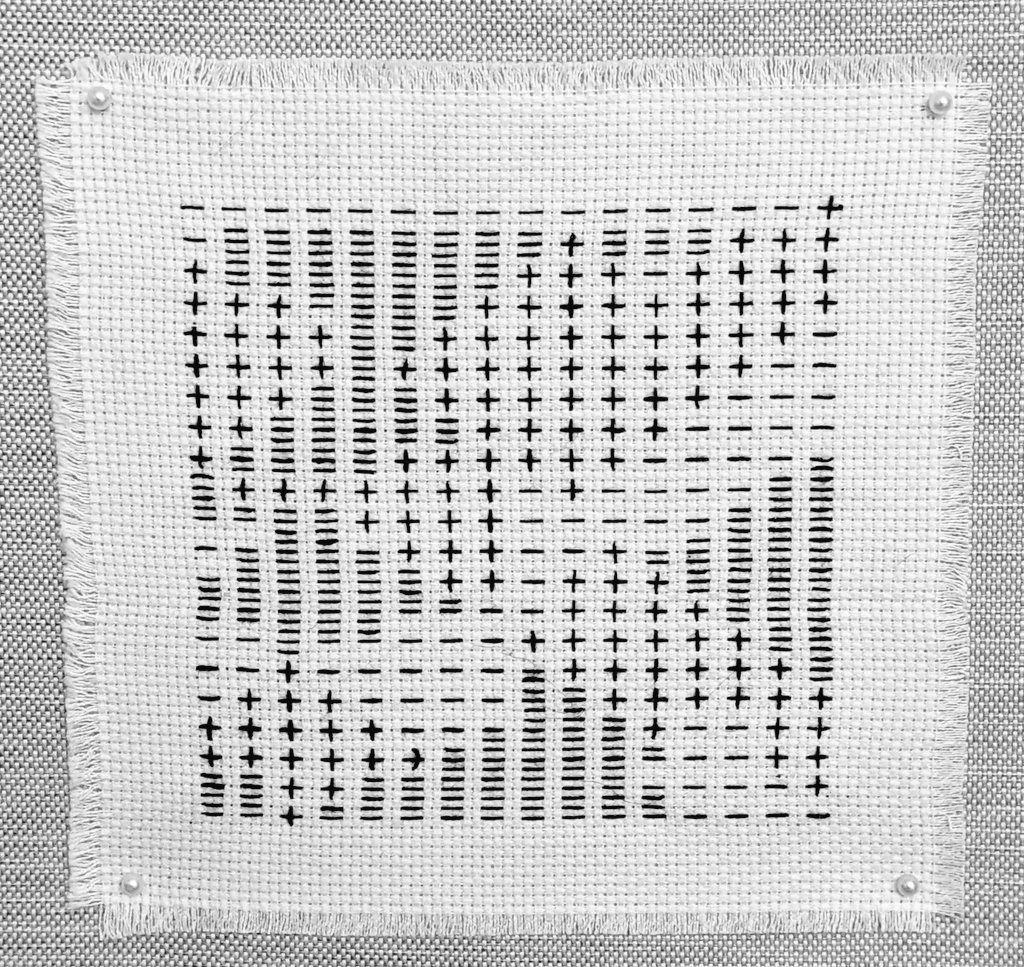

Generative Embroidery

Something I've been seeing a lot more of recently are generative embroideries, mixing a generative approach with a physical medium. Anna Wolf has recently started sharing her lovely creations, and has already shared quite a few interesting stitch compositions:

Coincidentally I also came across a creation by Luis Fraguada, something made back in 2006:

I'm wondering if this could become a new mainstream physical avenue for generative art - who's building the newest plotterized sewing machine to automate the process?

How graphic designers are creating their own AI tools

Stan Cross from WePresent wrote about 6 generative artists that share the common denominator of having built their own digital design tools:

Although I love the article and the insights that it provides into the artists' processes, I feel that there's a little bit of a disconnect between the title and the rest of the text - some of the presented artists don't actually use AI within their approach to making generative art. It's a bit like saying all generative art is AI art, which isn't the case. It's a lovely read nonetheless.

Weekly Web Finds

The hardest problems at the Intersection of Tech and Society

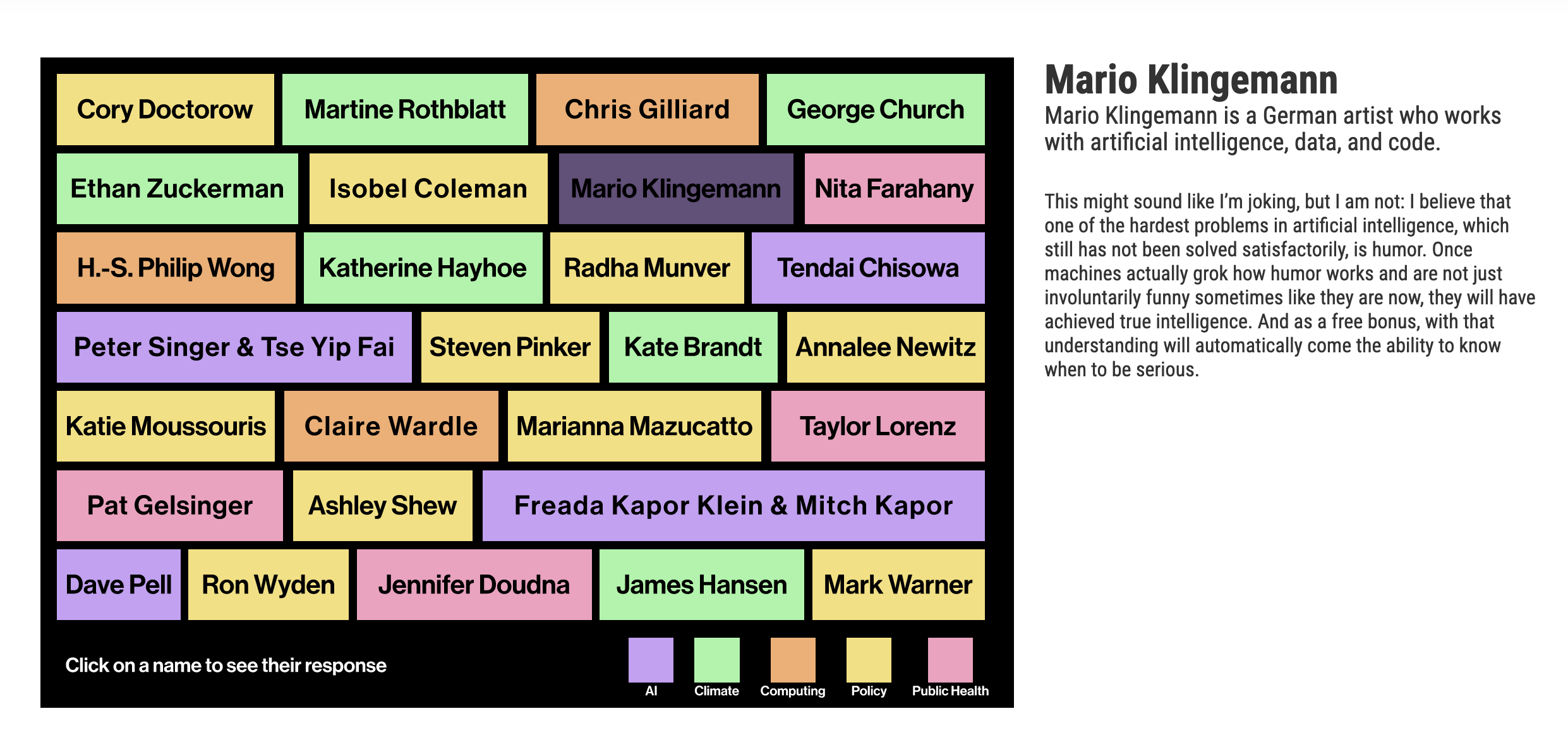

Mario Klingemann shared a tweet in which he draws attention to a recent piece by the MIT Technology Review:

Having been asked what he thinks is the 'hardest unsolved problems that lies at the intersection of technology and society' he gave the following answer:

I believe that one of the hardest problems in artificial intelligence, which still has not been solved satisfactorily, is humor. Once machines actually grok how humor works and are not just involuntarily funny sometimes like they are now, they will have achieved true intelligence.

And it makes sense, humor is a profound social phenomenon that requires a deep understanding of cultural, social, and individual factors - context us something that is hard to achieve with AI. Moreover it is also highly subjective, and an avenue in which AI is still performs very poorly. Trying get to the crux of it might bring us closer to 'true' artificial intelligence.

Here's a link to the piece, there's other interesting answers by other leading characters. I also really like the creative interface:

How Video-games render Fur

I recently came across Acerola's channel and he's quickly become one of my favorite Youtubers. Not only are the videos very entertaining but they also teach you a lot about the tech behind video games - and I believe that lot of it carries over into generative art:

In this particular video Acerola takes a deep dive into the computational difficulties of rendering hair and fur in video games, and presents some of the strategies that have been conceived for it.

CSS is Fun again

CSS is undergoing a quiet renaissance, with new features being added regularly nowadays one might even dare say that CSS has become fun again - with many previously tedious tasks becoming easier than ever. Jeff Sandberg tackles this in his essay:

A Visual Guide to CSS Selectors

In a related note, here's a comprehensive visual guide to CSS selectors by Sébastien Noël on fffuel:

If you've felt overwhelmed by the number of CSS selectors out there, and need a quick lookup for it, this is your solution. Furthermore, fffuel also boasts a massive list of different CSS tools that are worth checking out.

Why you should never nest you Code

We resume our excursion into writing clean code, this time addressing the topic of nesting that often sits at the root of convoluted pieces of code. Reading nested structures can often quickly become a nightmare, the deeper you get the more memory you have to allocate in your brain to keep track of what the code is trying to do:

CodeAethetic's video tackles some solutions to this end, such as extraction, where inner parts of nested blocks are isolated within their own functions, and inversion, where return blocks are checked for first instead of appending them as else clauses towards the end of a daisy-chain of if statements.

This makes me curious, what are some of your favorite tricks to writing clean code? I never really thought about trying to optimize how I write code, but I'm realizing that having some standardized personal approach could go a long way, especially when I want to find my way back into older projects.

AI Corner

xAI releases Grok

Elon Musk is up to no good again, recently announcing the release of X's very own Large Language Model:

Grok [...] is modeled on "The Hitchhiker's Guide to the Galaxy." It is supposed to have "a bit of wit," "a rebellious streak" and it should answer the "spicy questions" that other AI might dodge

What's interesting is that the model has access to Twitter data, which might lead to it behaving differently in comparison to the other big LLMs. But at the same time, it will also be locked behind the X Premium pay-wall.

Understanding LLMs from 0 to 1

Ever wanted to really understand how LLMs work? A good starting point is AlphaSignal's ongoing series on the topic:

There's only two parts to it so far, but it's very approachable - the first part of the series focuses on tokenization and embeddings, which are some of the essential methods required to feed text into machine learning models.

Music for Coding

Lamp (ランプ, Ranpu) is a japanese indie band that was formed in the 2000s. In 2004 they released their second album titled For Lovers (恋人へ), and although it sold less copies than their first release it became a fan favorite over the years.

It's pretty difficult to categorize their style of music, since it's a mix of many different elements borrowed from jazz, pop, and also has some heavy bossa-nova undertones.

They're still active up until the present day, having released their ninth album just last month on the 10th of October 2023, titled Dusk to Dawn (一夜のペーソス).

And that's it from me this week again, while I'm jamming out to some these tunes, I bid my farewells, and I hope this caught you up a little bit with some of the events in the world of generative art, tech and AI in the past week.

If you enjoyed it, consider sharing it with your friends, followers and family - more eyeballs/interactions/engagement helps tremendously - it lets the algorithm know that this is good content. If you've read this far, thanks a million!

Cheers, happy sketching, and hope you have a great start into the new week! See you in the next one - Gorilla Sun 🌸