Welcome back everyone 👋 and a heartfelt thank you to all new subscribers that joined in the past week!

This is the 39th issue of the Gorilla Newsletter - a weekly online publication that sums up everything noteworthy from the past week in generative art, creative coding, tech and AI - as well as a sprinkle of my own endeavors.

Hope you're all having a great start into the week! That said, let's get straight into it!👇

All the Generative Things

Golan Levin on the Potentiality of Blobs

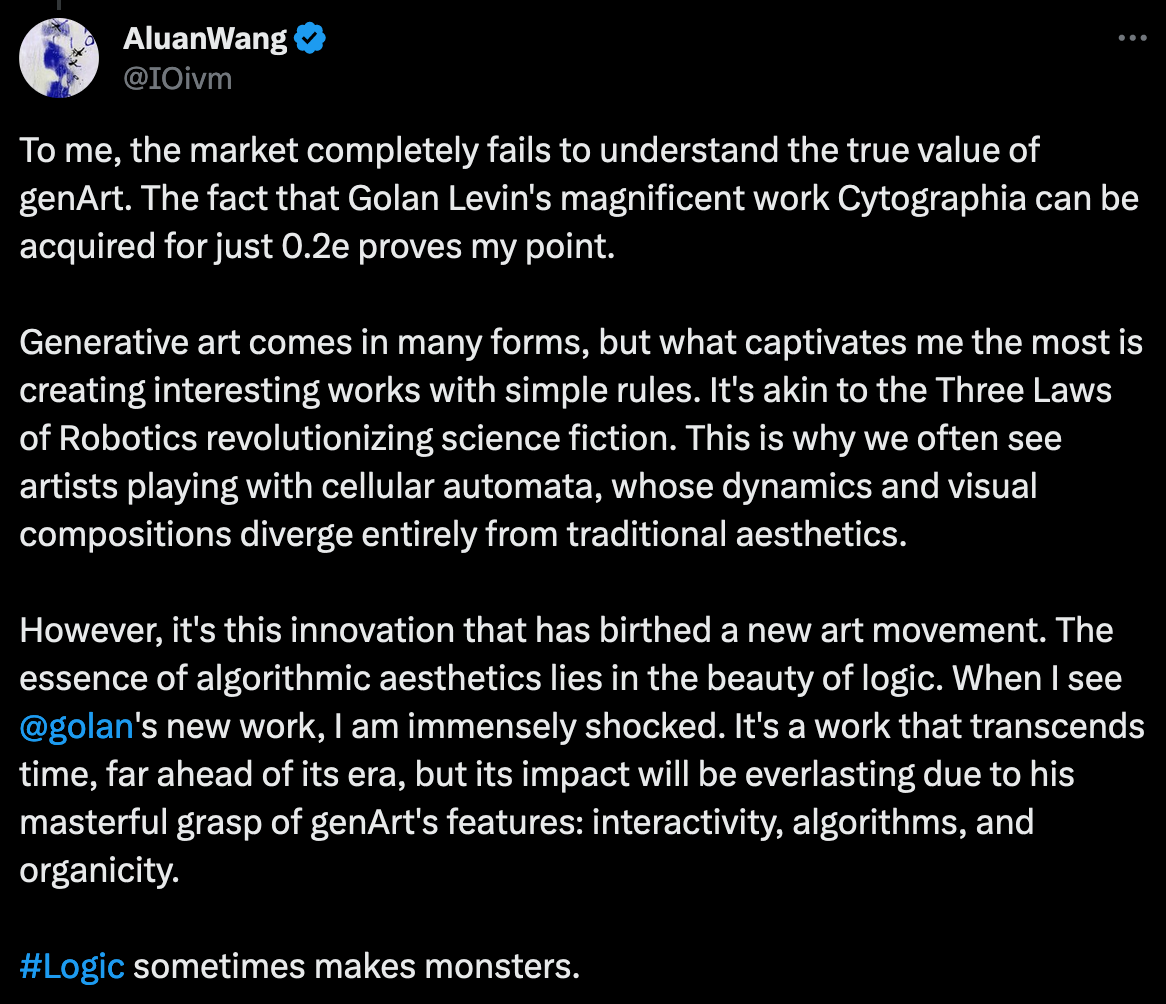

Le Random is back with their very first article of 2024, getting a hold of Golan Levin for an interview in light of his very first ETH release over on Art Blocks titled 'Cytographia' - a project that's been two years in the making.

And as always, Monk knocks it out of the park; with his thoughtful questions he provides us with the bigger picture, inquiring about the inspirations behind the generative system, how it came to be, and what it is that Golan wanted to express - we learn that there's a lot more to blobs than just being squiggly digital shapes:

We also featured Golan in last week's issue.

Cytographia occupies an interesting space in the current long-form generative art meta - it places itself in juxtaposition to many other contemporary projects that adhere to a general trend of static canvases that display artful graphics. Cytographia doesn't go down this route, brings animated and interactive elements into the mix, while still leaning into a skeuomorphic aesthetic to emulate the faded look of old science books. In that manner, Cytographia accomplishes something that not many other projects do, threading a fine line between the arguably controversial skeuomorphic aesthetics and the capabilities of the modern digital medium that it is created in, to create little digital organisms that feel very alive.

Levin also lets us in on his fascination with blob shapes, and how they essentially are a product of the medium. When processing made it possible to create shapes by means of placing and connecting individual vertices, it greatly extended the capabilities of artists to create completely new shapes and forms that were only achievable with difficulty before. Which leads us to blobs - if you're long enough into creative coding, it's very likely that you'll have attempted the creation of these kinds of shapes at some point - and they're very special and expressive little creatures, since there isn't a single correct way of generating them with code:

Everyone is going to make blobs that are really uniquely theirs, that are going to be, however you're going to make it, because there's no right answer; there's no correct way to do it. There's no single algorithm to make a blob. I could list six different ways of doing it. That’s what drew me to these quirky forms.

And I find it infinitely fascinating how the tools, methods and algorithms, that the original Processing made available to code artists out of the box, have had a profound impact on the aesthetic of the artworks created with them - would the generative art of today have a completely different aesthetic if the original versions of Processing included a different set of tools? For instance, would Perlin noise not be as much of an integral part of generative art as it is today if it wasn't made readily available with Processing and P5?

On a related note, this also makes me think of modern video games, where we find many that attempt to recreate the aesthetics of the past decades when machines weren't as powerful as today. Objectively these pixelated graphics might not look great, but the familiarity that they evoke has a profound impact on the experience that they leave the player with. In that scenario, the graphics were most definitely a product of the limitations of that era, and nowadays there is absolutely no need for this aesthetic except for stylistic reasons.

On the other hand there's many games today that go into the complete opposite direction, trying to look as realistic as possible - when that actually isn't a defining factor in it being a good or bad game. It becomes a question of aesthetics and stylism, and similarly we shouldn't judge artworks based on their aesthetics alone.

What I've noticed, is that many of the more negative stances towards skeuomorphism in generative art come from the established artists whose careers span more than a decade or two - maybe this disinclination towards it stems from having had a formative experience with those tools of a previous era of creative coding, and it might just seem strange when a younger generation tries too hard to emulate an aesthetic that isn't nativel digital. But if the medium allows for it, why not?

In this manner blob shapes have been a career long endeavour that Levin has pursued and brought to life with Cytographia.

If you're interested in creating your own blobs, here's something I wrote on the topic - fittingly for today's genuary prompt 'Using a physics engine', you might want to have a look at this tutorial of mine where I've written about programming soft body physics, one of the many methods to make little interactive blobby shapes:

If you're interested in creating your own blobs - here's something I wrote in 2023 on the topic.

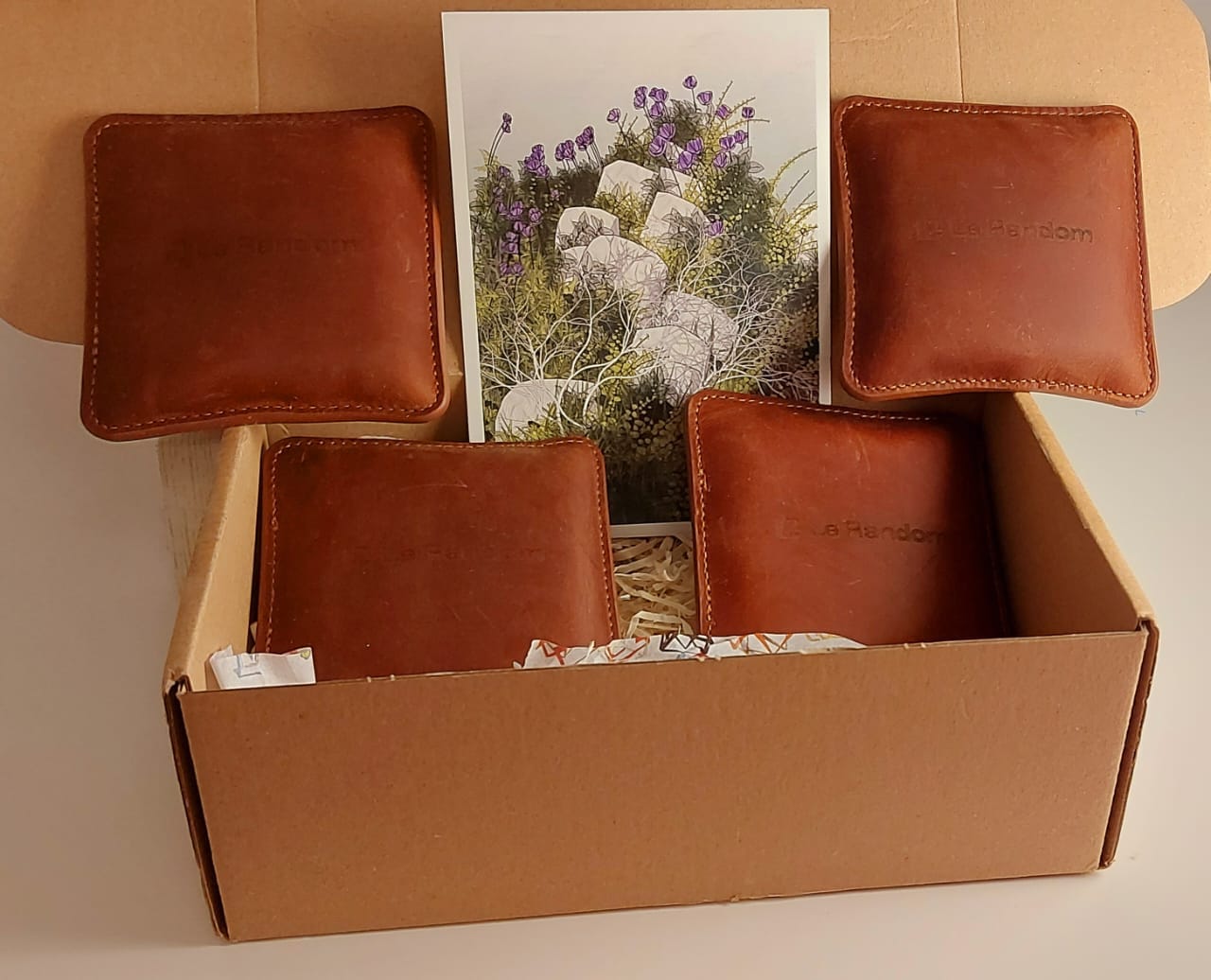

In other news from LeRandom, just two days ago I received this gift from them:

Thanks a million for the generous gifts, it made my week, and I might just have to start my print business now with those beautiful paperweights - been putting it off for far too long. And as always, kudos to Monk, for the stellar article and for putting these slices of generative art history into written form.

Wobbly Functions by Piter Pasma

While we're on the topic of blobby and wobbly shapes, Piter Pasma re-published on of his previously unfinished articles on the topic for the 13th Genuary prompt Wobbly functions:

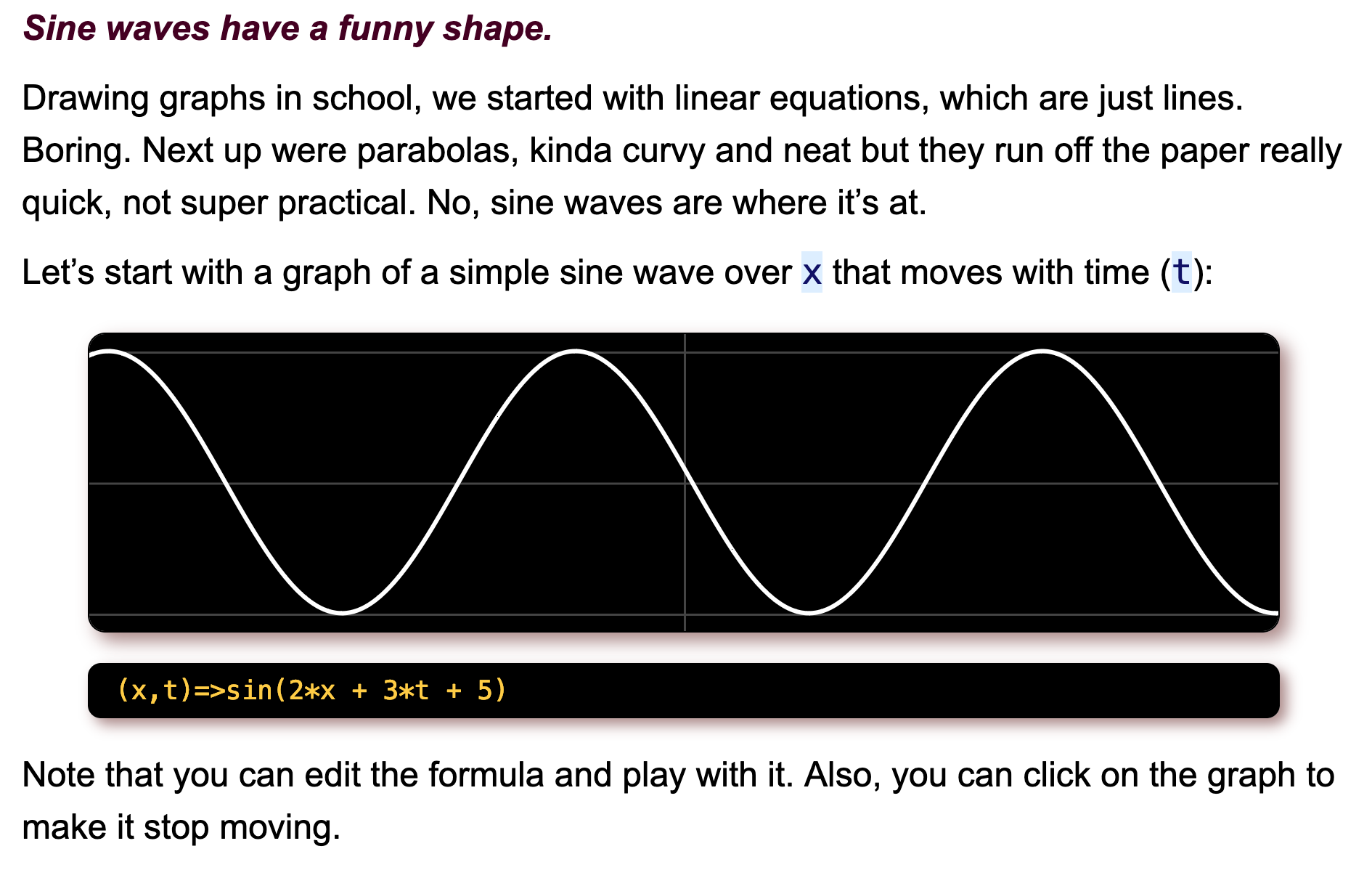

The article features several interactive examples showcasing some of the methods with which sine waves can be combined to create all sorts of interesting wobbly functions:

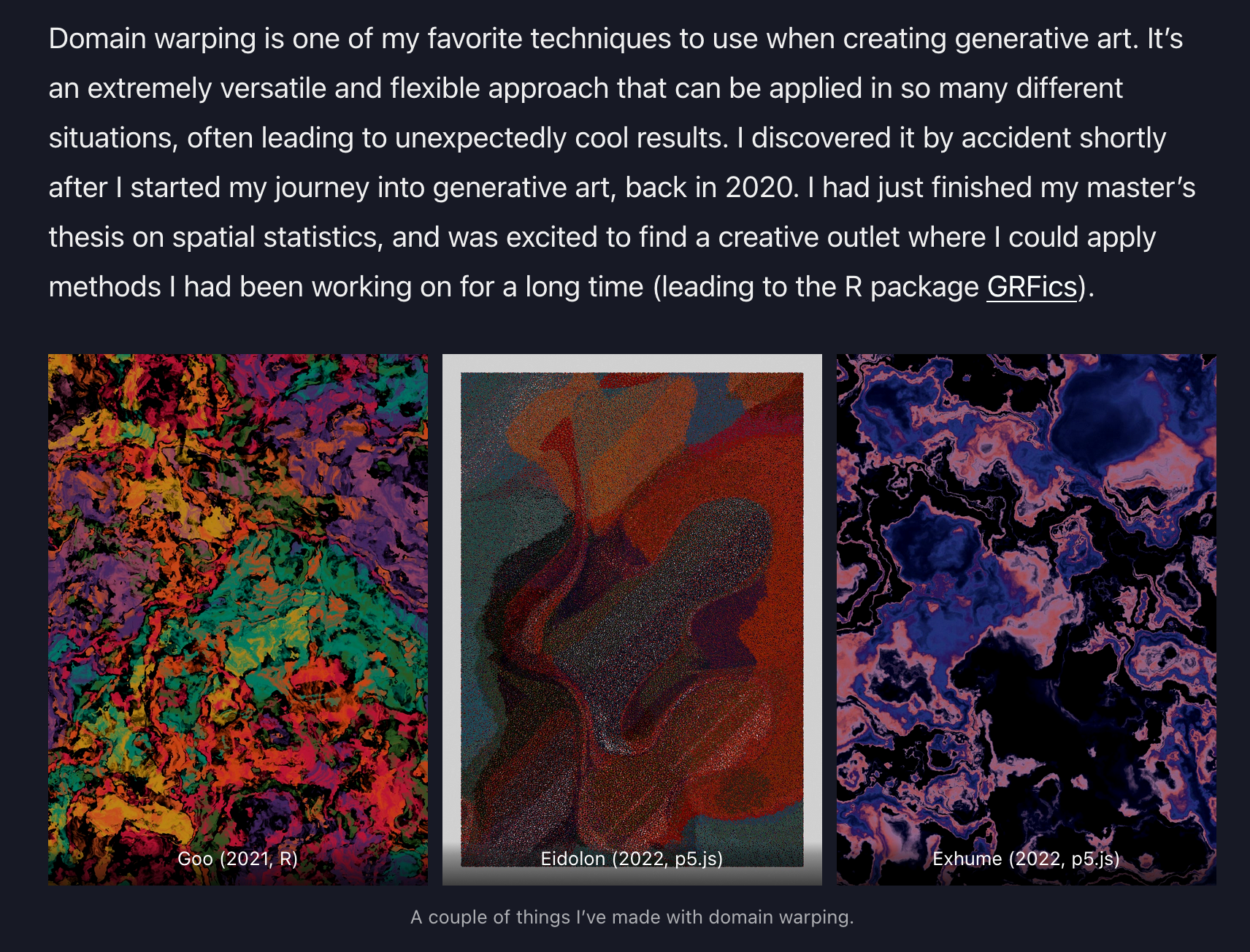

If you find this interesting you should also look into domain warping, a method which combines different noise and displacement functions to create interesting effects - here's an interactive primer by Mathias Isaksen:

RCS interview with Jared Tarbell

Besides Golan Levin, another very influential figure in generative art is Jared Tarbell, having worked with the earliest versions of Processing and created many an iconic artwork that still inspire today. Just a couple of days ago, Right Click Save released an interview with him, giving us insights into his newest artwork created for the Kate Vass Gallery, as well as some reflections on the early days of creative coding:

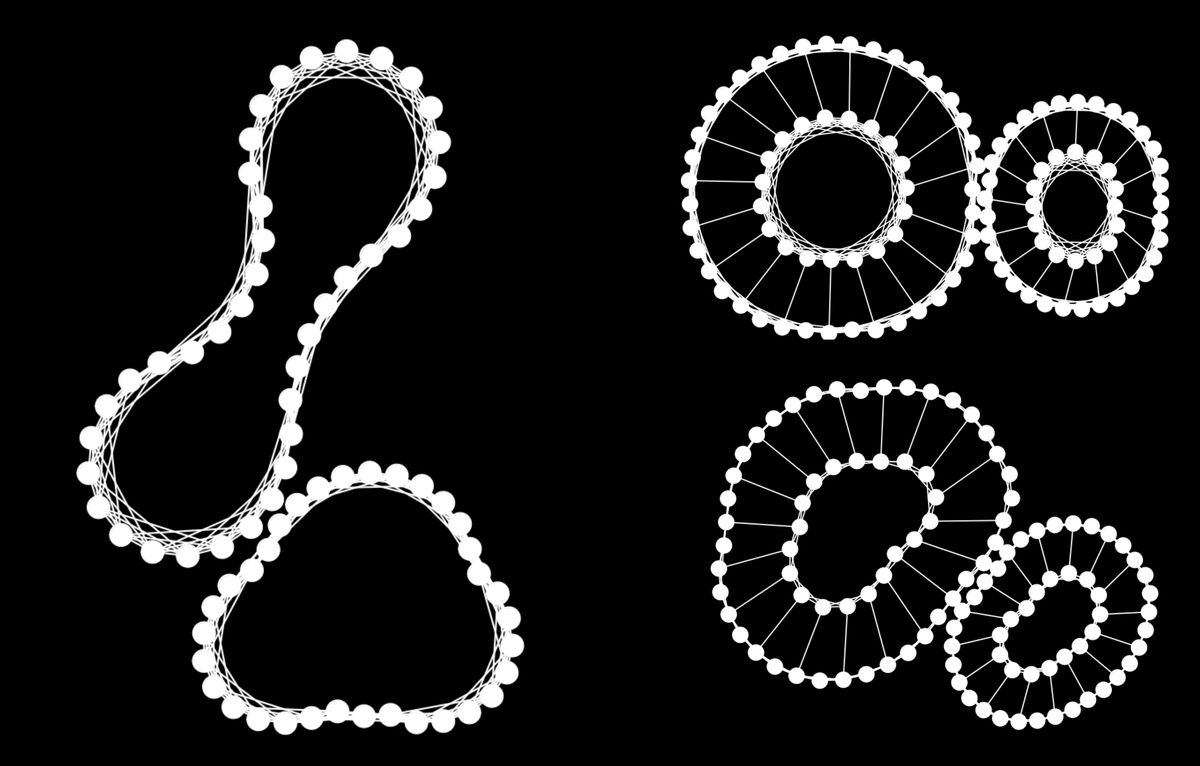

There's so many interesting points throughout, that I found it difficult to pick one to give commentary on - I found it really interesting when he addressed the question why his works are primarily in black and white rather than using colors; he explains that is to put emphasis on the emergent structure of his generative systems:

I stick to black and white because I’m most interested in the structure and less the vibrancy or artistic quality of the piece. I want to expose the structure. Whenever possible, I try to reduce it to the heart of the phenomena that I’m exploring.

There's actually quite a few artists that have dedicated themselves to only working in black and white, such as Etienne Jacob, Julien Gachadoat, and loackme for instance. All of them create highly geometric works.

In my own generative art, I like to think about generative compositions in two parts: structure and texture. I always start with the structure, such that one part of the algorithm focuses on constructing an interesting layout which is then used as a scaffold to fill in textures and colors. Sometimes the structure can imply and guide the texture and vice versa, so naturally the two aren't always completely separable. A good example for this is Daniel Aguilar's recent project Tetra. Assessing the interplay between the two has been a meaningful way for me to evaluate if my project is doing something interesting. I tend to struggle with texture and color, because it's more than often very subjectively perceived by different individuals.

If you enjoyed this interview with Jared Tarbell, you should definitely also check out Artnome's interview released in 2020:

I also recently discovered that RCS have their own podcast - highly recommend listening in to this episode where Danielle King talks to many of the people currently involved in the generative AI scene:

LittleJS 2 year Anniversary

Frank Force is a force of nature when it comes to creative coding and game dev things - I've already featured him a couple of times on the newsletter - in issue #23 for his cool generative pottery project on fxhash and in issue #25 for a size coding talk that he gave at the JS game development summit. This week we're celebrating the 2 year anniversary of a lightweight HTML5 game engine that he's built and actively developing:

Javascript gets a lot of bad rep for being on the spectrum as one of the slower programming language, but I actually think that it's a wonderfully expressive language that's suitable for building all sorts of things - especially for video games. Building them directly for the browsers makes it super easy to share them with others, which is ultimately the entire point of games. If you're comfortable with Javascript and want to build your own little game, LittleJS might be a perfect starting point for that, here's a link if you want to check it out for yourself:

From Hexcodes to Eyeballs by Jamie Wong

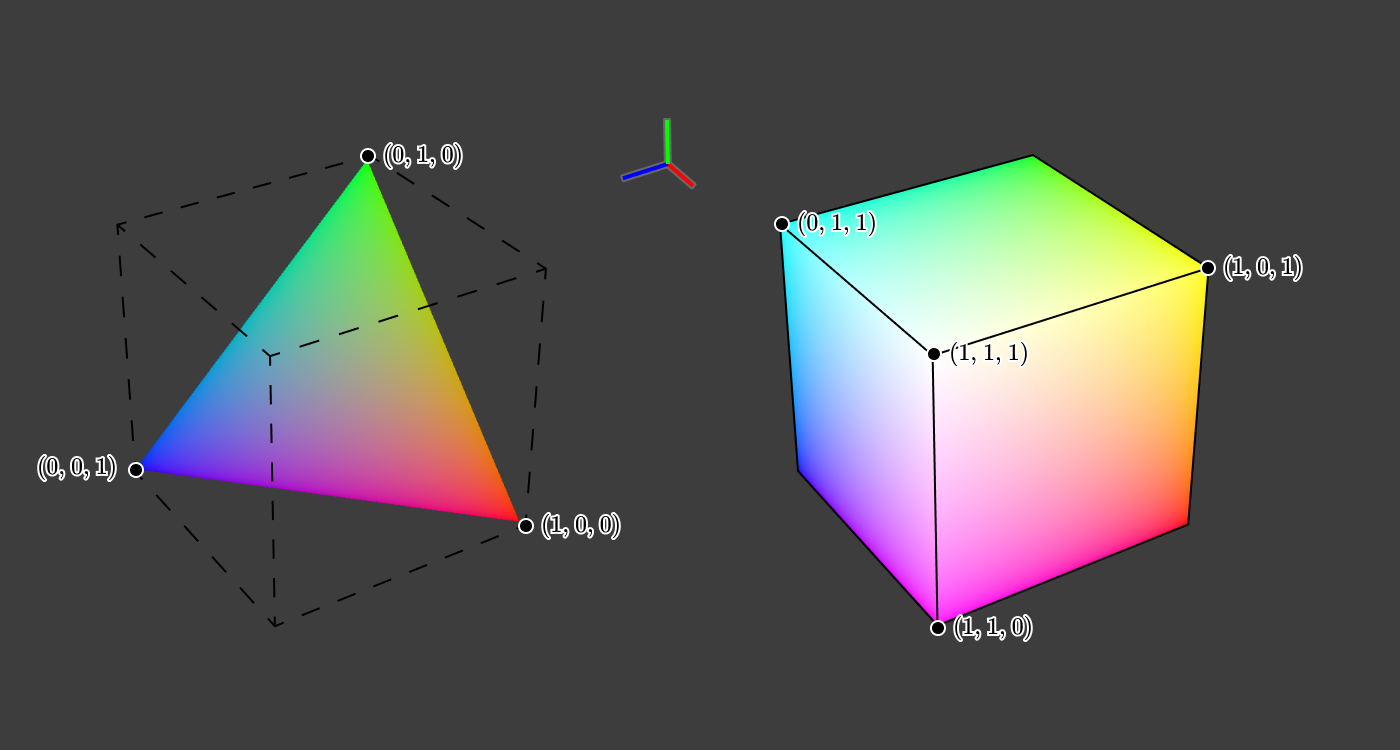

Have you ever wondered about how we perceive color with our eyes? How displays translate hexcodes into colors? And what color actually is in the physical sense of the word? Jamie Wong provides us with all of the answers in this massive article of his back from 2018:

Generative Minis

- Video games in P5 with P5Play: P5Play is yet another game engine that builds on top of P5 (for the graphics) and enables quick and easy development of video games in the browser. Quinton Ashley the creator of P5Play also documents his work on the library here, where he talks about all sorts of different programming challenges.

- Ben Moren makes interesting installations, experiments and artworks that lie somewhere at the intersection of creative coding, technology and nature.

Tech and Code

Age of Empires, mostly written in Assembly

I'm a bit too young for the original Age of Empires (weird flex but okay), but AOE 2 was one of my favorite childhood games. I remember spending many Saturdays in the sandbox mode, building gigantic fortresses and pitting massive armies against each other - I think I spent more time doing that than playing the actual game.

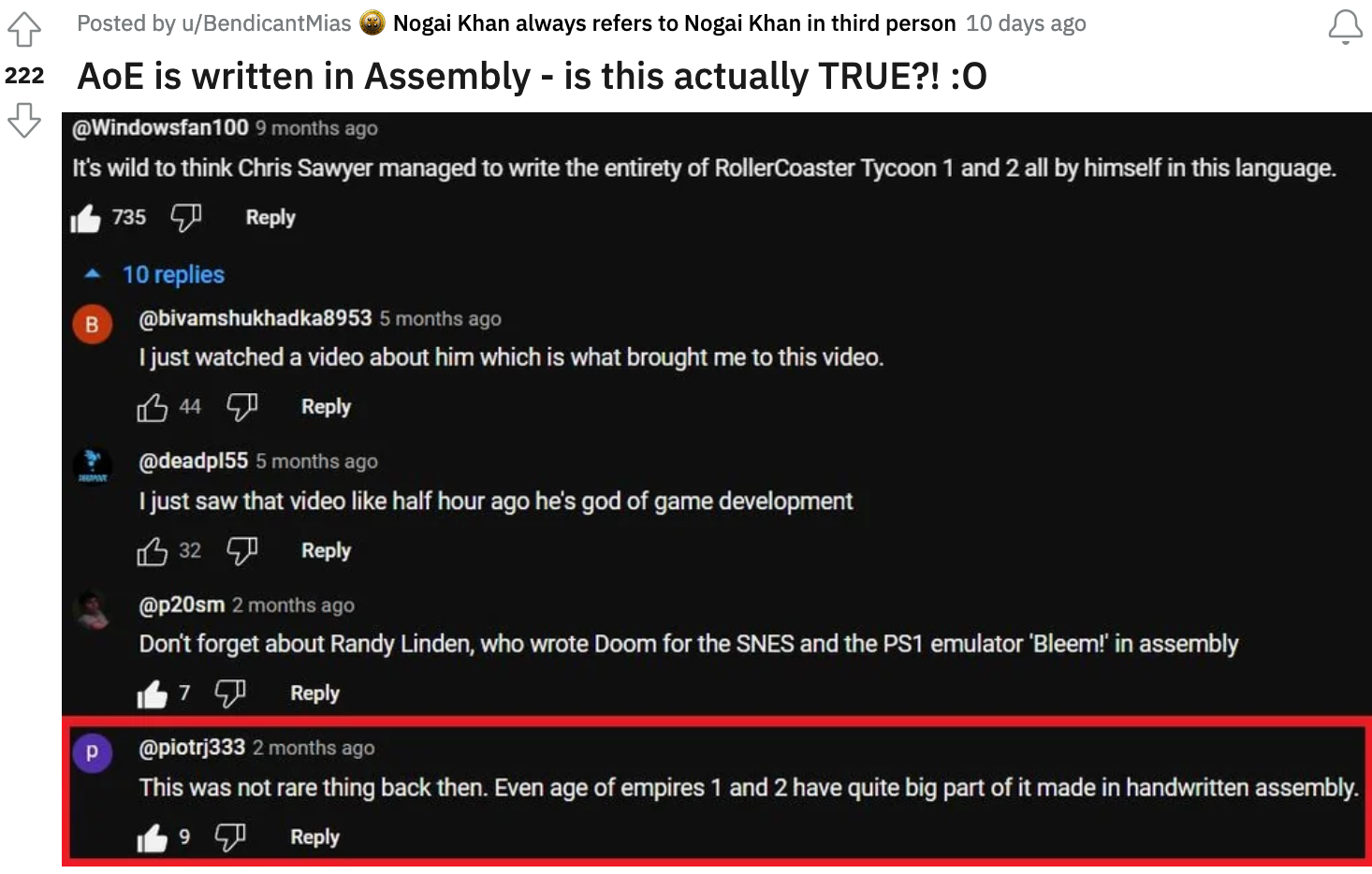

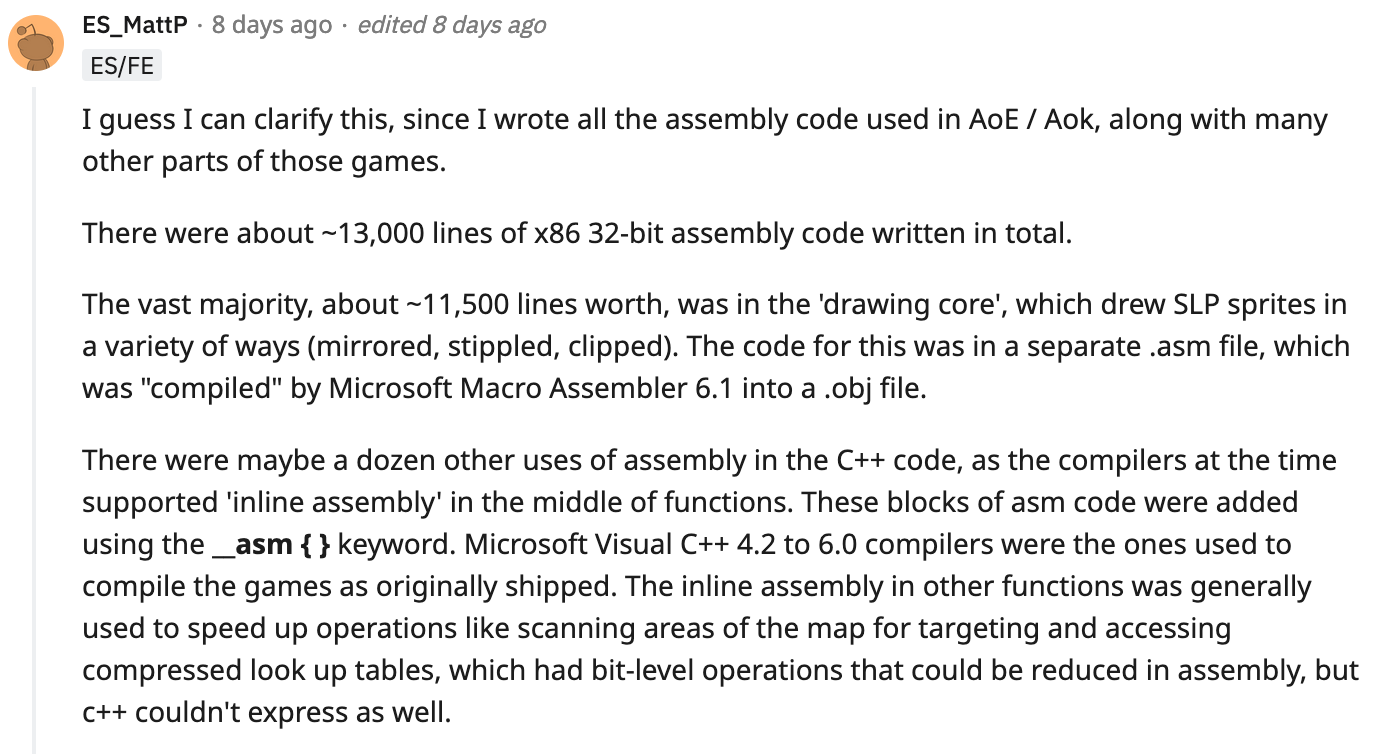

And at that point in time I had yet to write my first line of code. I recently came across an interesting article by PC Gamer that recounts an interesting story from the original games that recently emerged, it turns out that the original games were to a big extent written in low-level Assembly code, making the iconic graphics possible:

PC Gamer needs to chill with their headlines

This came to light in a recent reddit post that blew up after one of the original AOE devs left a reply, elaborating the claim and providing a detailed answer on the matter - here's the post:

And here's Matt Pritchard, one of the founding members of Ensemble Studios, who was in charge of graphics and optimisation on the early games. He chimes in:

Games from that era really don't get enough credit for the massive feats of programming that they were.

What's actually Declarative programming?

You've probably heard the term "declarative programming" at some point now, but can you actually clearly explain what it entails? For this, we first need to understand what a programming paradigm is. According to wikipedia, programming paradigms "are a way to classify programming languages based on their features", which is technically correct, but I think that it's easier to think of programming paradigms as "problem solving methodologies" or even "programming styles".

This might sound a bit esoteric, but it's essentially a bunch of commonly agreed on strategies to solve specific programming tasks by leveraging the features of the language that's used. Some languages lend themselves to specific paradigms, because they're designed for that purpose, but then you also have languages that can do a little bit of everything, like Javascript for instance.

Two terms that are often thrown around in that context are the "imperative" and "declarative" paradigms, and in recent years we've been gushing a lot about how good and easy declarative programming is, when it's not actually so clear what that actually entails. While "imperative" simply means that we spell out every minute instruction to achieve a certain task, the declarative approach, that is often described as telling the computer "what to do", is not as obvious in comparison.

The cool people from UI.dev put together an in-depth article explaining the difference, while providing a good idea what declarative programming is all about:

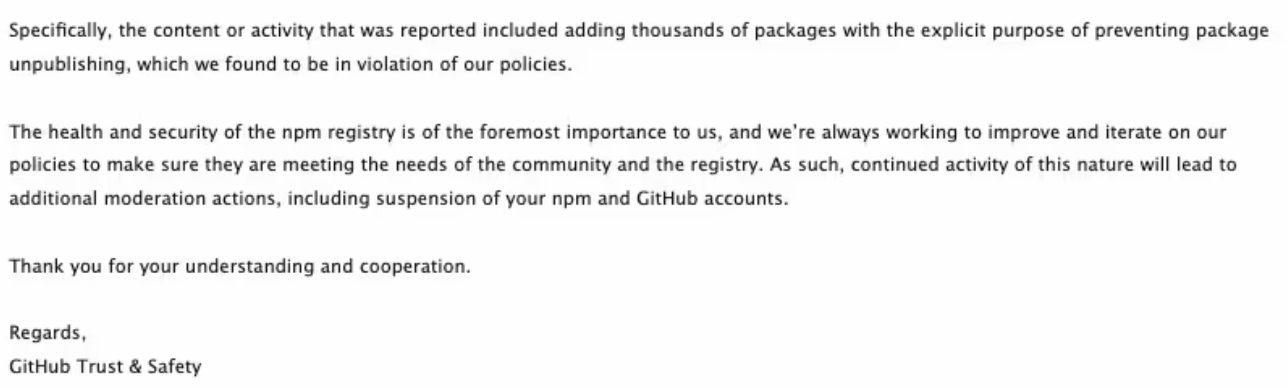

The Package that broke NPM

In their bizarre quest (or act of digital mischief) for publishing an everything-package on npm, a package that depends on every other package on npm, high school student uncenter and his collaborators stress tested the limits of github and the node package manager while making quite the news in the process:

Alebti the digital logistics of this endeavour being quite fascinating, github ultimately decided in the aftermath to not allow this, with the main issue of it being that the everything package, being dependent on all other packages, makes it so that these can't be unpublished anymore. Which would not be the case if they weren't dependencies.

AI Corner

LLMs and Programming in the first days of 2024

I don't know who antirez is, but from what it looks like it seems that they've been around for a while, with their very first piece of writing dating back 4072 days (as of writing this) which is just tad bit more than 11 years. I came across a recent piece of his in which he talks about his experience with LLMs and how they greatly simplify many of the tedious programming tasks today:

And overall it reflects my own experience, and I'd like to assume that of many programmers that have been programming 'the hard way' prior to the advent of LLMs. LLMs are super powerful but at the same time also really dumb - as antirez states "an idiot who knows everything is a precious ally" which is a really good way to put it, LLMs fail miserably when you ask them to produce something novel or to reason with nuance about certain very niche tasks, but excel when asked about things that are general knowledge. Like doing task X with framework Y, in that setting LLMs can go a long way. Recommend giving the article a read - I'm curious how things will change in the future as these language models become more powerful and nuanced, but as of now I think most programming jobs are still not at danger.

Attention Mechanism in Transformers

In a TwitterX thread deep dive Eduardo Slonski elucidates the Attention mechanism in transformers - the main machine learning component that powers LLMs:

A transformer is essentially a neural network in of itself with a very specific structure. Transformers were initially introduced in a paper titled "Attention is all you need" by Vaswani et al. in 2017 - and as the title already reveals these innovative part of transformers is an "attention" mechanism, which allows it to weigh the importance of different parts of the input sequence that is fed into it, in contrast to previous types of neural networks that had been used up to that point.

In Eduardo's thread he showcases examples and visualizations of how this works and explains some of technicalities behind it. The thread is a bit esoteric without prior knowledge on transformers, I never actually took the time to learn how they work but I guess I should at this point. Definitely bookmarking it for later.

Gorilla's Genuary so far...

It's been yet another week full of genuary prompts, and it's been going surprisingly smooth for me so far. I've been able to get every prompt done in time and am actually very happy with all of my creations. Here's a recap of what I've made this week:

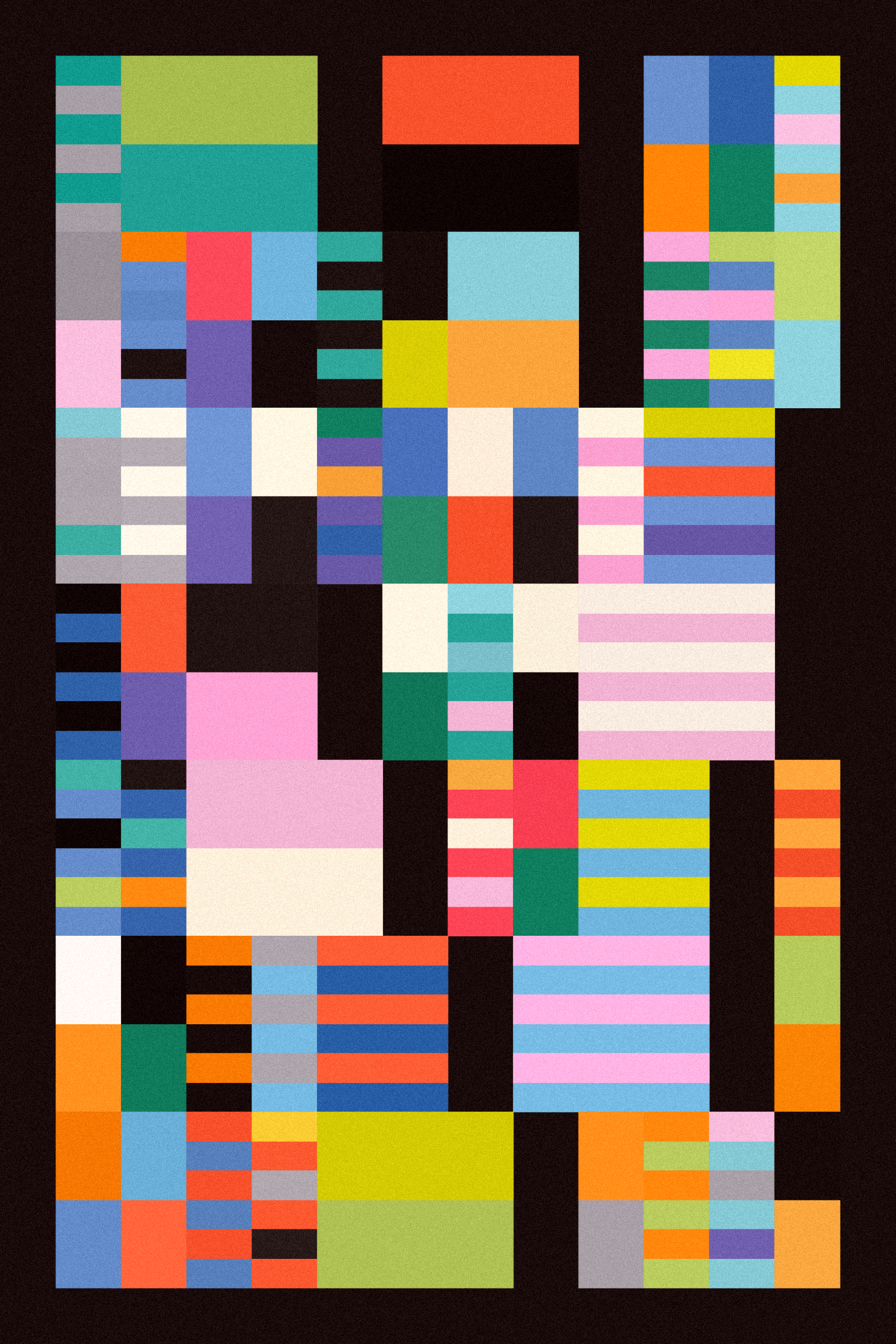

Genuary 8 - Chaotic System, similar idea to the previous genuary 'Loading Bar' prompt (see previous newsletter). Here I'm using Prim's maze generating algo to expand the pixelated regions and then doing an additional pass to clear the interiors based on some adjacency rules. It made for some really cool pixelated patterns, will definitely explore this one more at a later point in time.

Genuary 9 - ASCII. A simple circular SDF, selecting characters from an array based on a sine wave, where those characters are sorted by brightness in the array. Somehow looks like an eye staring back at me.

Genuary 10 - Hexagonal. This one simply borrows the same code from my generative artwork Hex Appeal, that also involves Prim's algorithm and an additional procedure that then traces the contours of the regions. Modified the code to animate how this works.

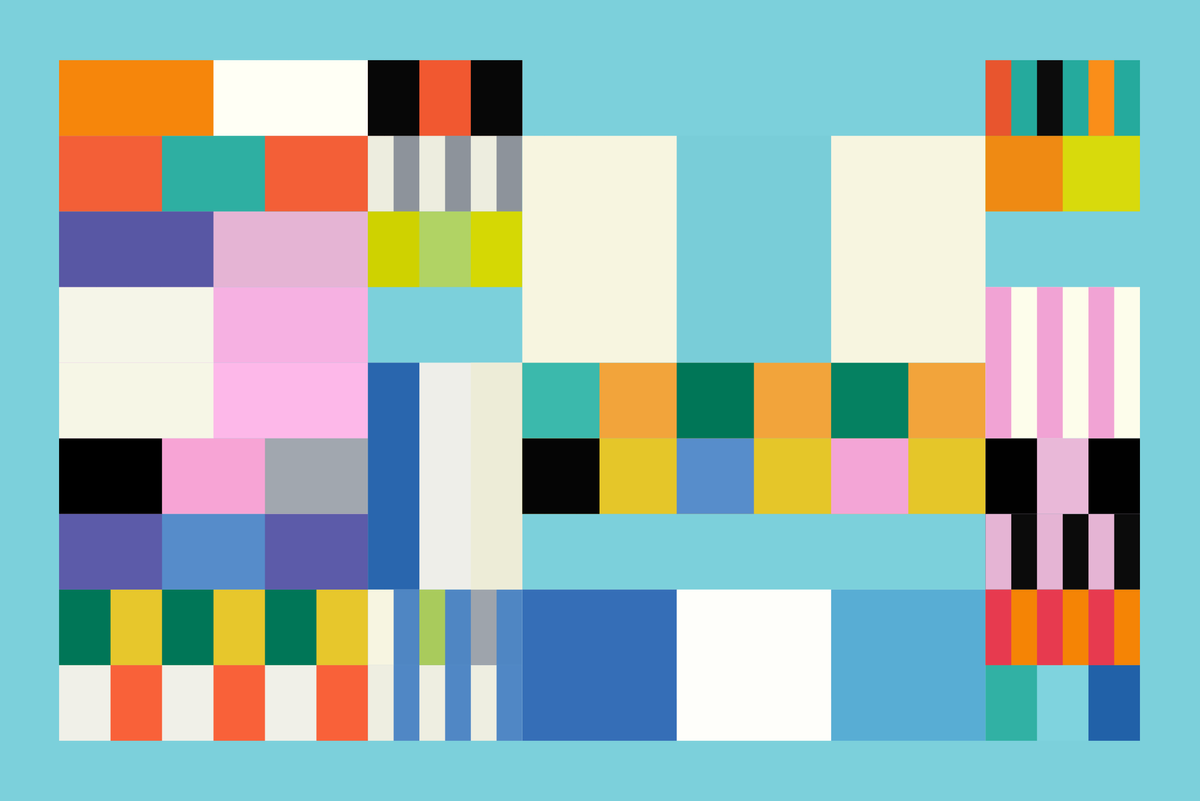

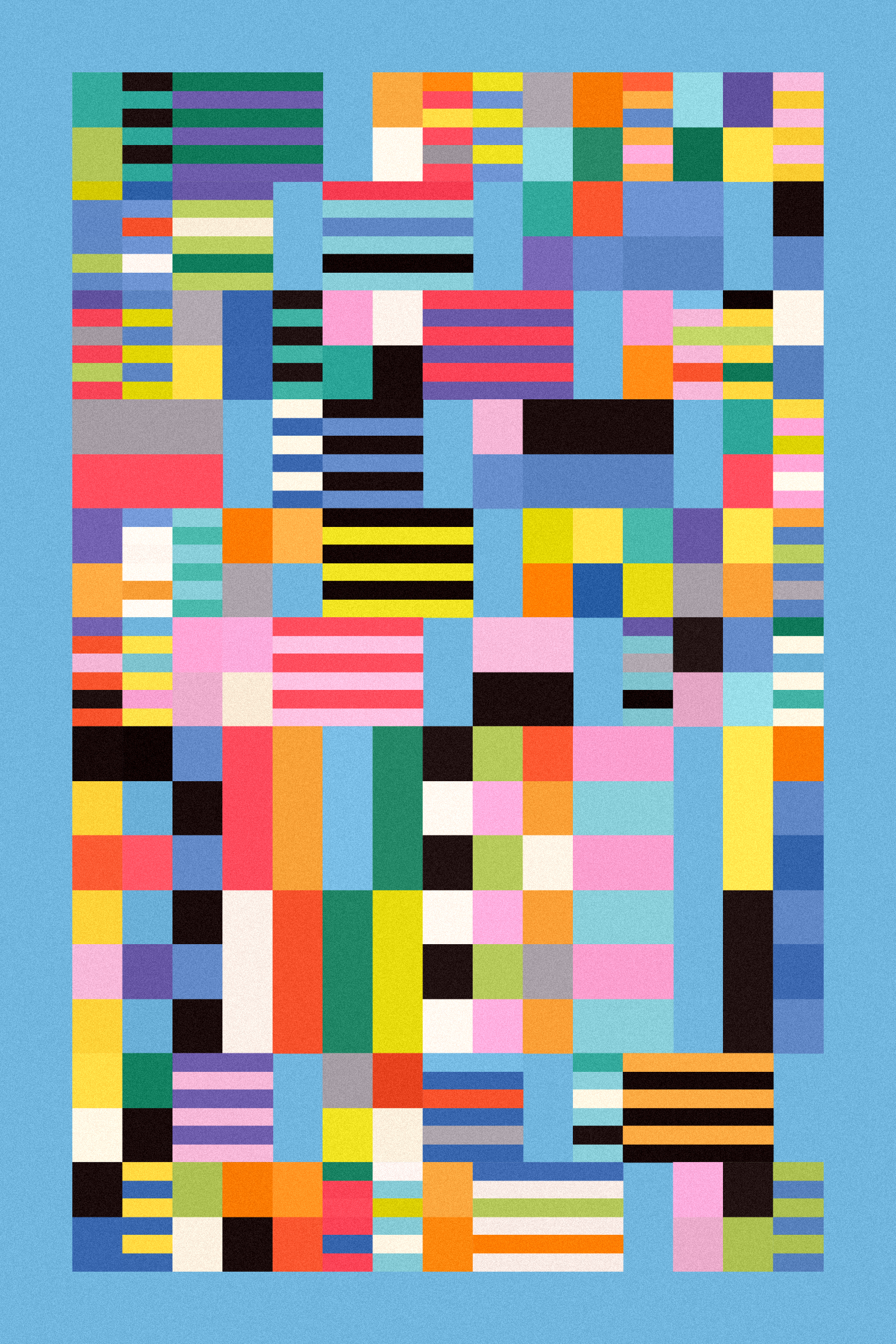

Genuary 11 - In the style of Anni Albers. The only static artwork that I made for Genuary so far, and people over on Twitter really enjoyed it. I don't know why but these turned out better than anticipated, it's simply a nested loop that places rectangles in a grid like manner, where some rows occasionally stretch a longer span. Individual rectangles also have the chance to be further subdivided into smaller rectangles. Using Roni's Ten palette, with some additional colors.

Genuary 12 - Lava Lamp. A little digital lava lamp powered with a ray-marching algorithm, shoutout to Patt Vira's cool tutorial on how to implement this. I've actually attempted this two years ago, but then never got back to it.

Genuary 13 - Wobbly Functions. This one was a lot of fun to make, a classic Gorilla spiral SDF with some additional wobble that modulates the outward traveling bands. I had this one in mind before, but never got around to figuring it out. It ended up being relatively straight forward.

Genuary 14 - Less than 1kb Artwork. Wanted to create a cute parametric animation, inspired yet again by some of beesandbombs stuff. As an additional challenge I wanted to do it purely in vanilla JS which ended up not being too difficult. Note to self, need to figure out how to run an animation loop at a specific frame rate, because exporting the gif was a bit of a hassle.

How's your genuary going so far?

Music for Coding

It's been a minute since I've enjoyed an album quite as much as this one - The Ironsides are a trio that make music inspired by Italian cinema, blending sounds from soul and jazz and creating something truly special in the process:

And that's it from me this week, while I'm jamming out to some of these tunes, I bid my farewells — hopefully this caught you up a little with the events in the world of generative art, tech and AI throughout the past week.

If you enjoyed it, consider sharing it with your friends, followers and family - more eyeballs/interactions/engagement helps tremendously - it lets the algorithm know that this is good content. If you've read this far, thanks a million!

If you're still hungry for more Generative art things, you can check out the most recent issue of the Newsletter here:

Previous issues of the newsletter can be found here:

Cheers, happy sketching, and again, hope that you have a fantastic week! See you in the next one - Gorilla Sun 🌸