Welcome back everyone 👋 and a heartfelt thank you to all new subscribers that joined in the past week!

This is the 42nd issue of the Gorilla Newsletter - a weekly online publication that sums up everything noteworthy from the past week in generative art, creative coding, tech and AI - as well as a sprinkle of my own endeavors.

Hope you're all having a great start into the week! That said, let's get straight into it!👇

Warpcast & Farcaster Frames

Let's start this week's issue with some words about Farcaster - a big chunk of the generative art community has recently joined the budding Web3 social media platform, and it's overall been gaining a lot of traction as a Twitter alternative for the Web3 crowd.

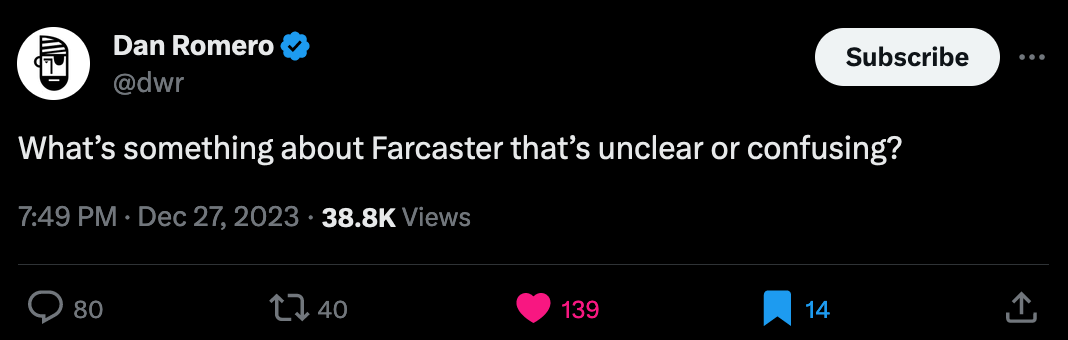

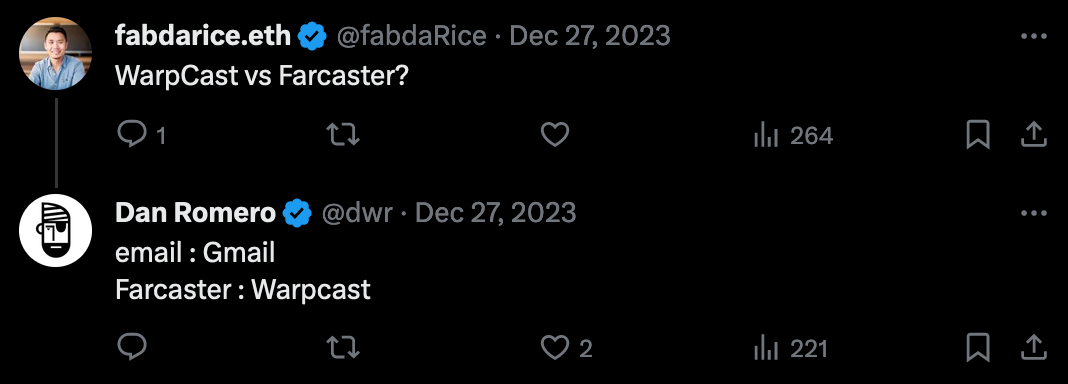

Warpcast is a relatively new social media platform that's built on top of Farcaster, which is a Web3 protocol for building decentralized social media apps, conceived and developed by Dan Romero - I actually signed up to Warpcast about a month ago, without knowing too much about the platform, after I saw a Tweet from Romero in which he attempted to address and clear up some of the notions that were cause for confusion:

Maybe it's erroneous to compare the two, but I think a good point of reference is the Fediverse if you're familiar with it. In the last two years we've seen a big migration of people over to Mastodon, in the aftermath of Musk's Twitter takeover, which is a decentralized social media platform that exists in the larger context of the Fediverse, in which the different entities make use of the ActivityPub protocol to communicate with each other. This means that things in the Fediverse are interoperable, due to them using the same underlying protocol.

Farcaster is similar in this manner, that it's also such a decentralized protocol, with the big difference that it's built on Ethereum, enhancing it with blockchain powered capabilities. For isntance, your identity on Farcaster is tied to your wallet address, and is also one of the primary ways to interact with apps and features that it integrates. In this scenario, Warpcast is simply the interface that you use to interact with the Farcaster protocol:

The following post provides a good explanation and some insights into all of this:

Picture it as a unified platform where social apps like X, Instagram, and Facebook coexist and users can connect them all with one decentralized ID. This means even if any app imposes restrictions, users still have their identity and can seamlessly migrate their connections to alternative apps on the network.

If this has piqued your interest and you're interested in joining Farcaster, you can use this invite link, which gives the both of us warps - a kind of token that can be redeemed within the Farcaster eco-system. It's important to note here that Farcaster costs 5 bucks a year, which is used to cover the associated gas fees.

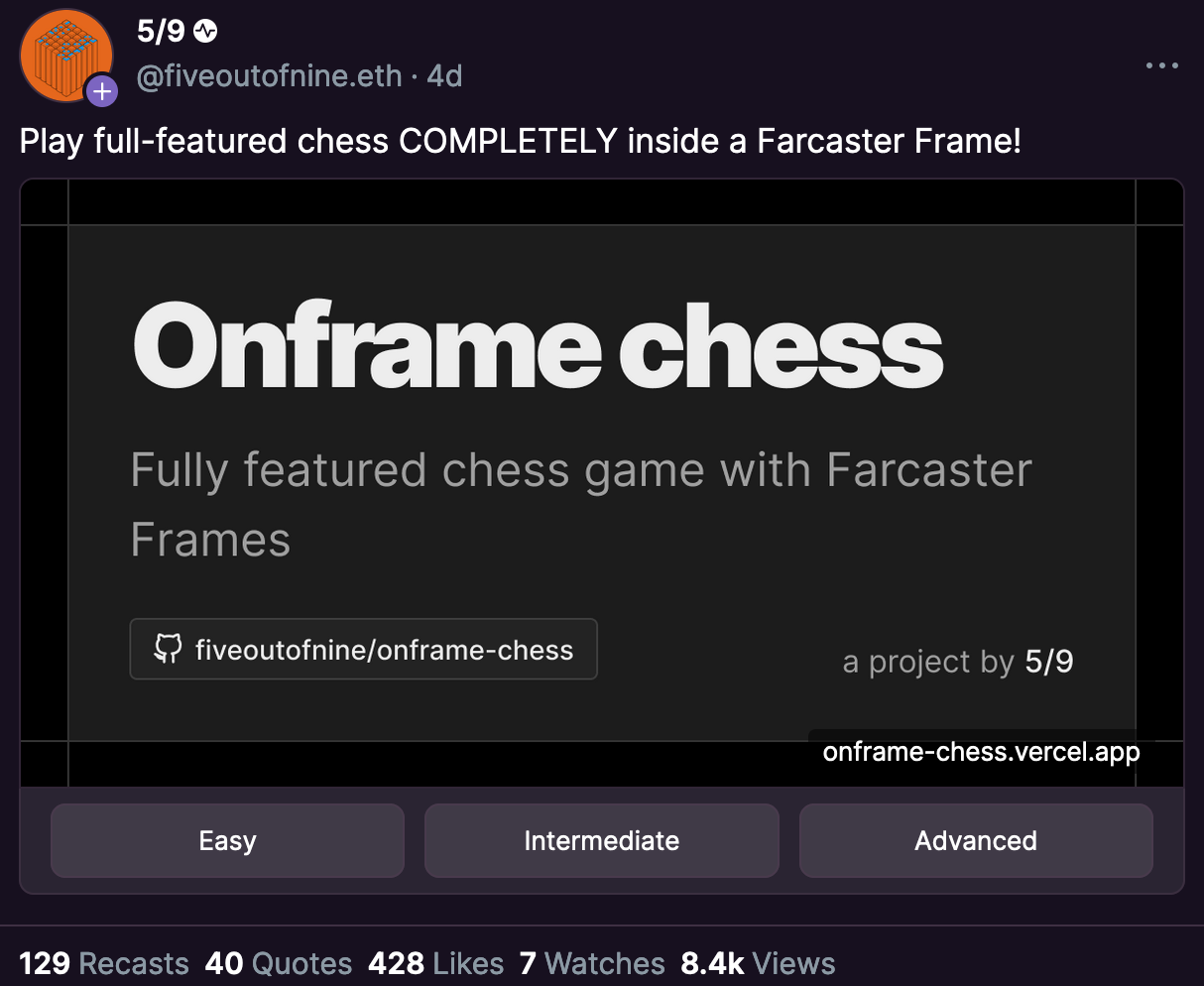

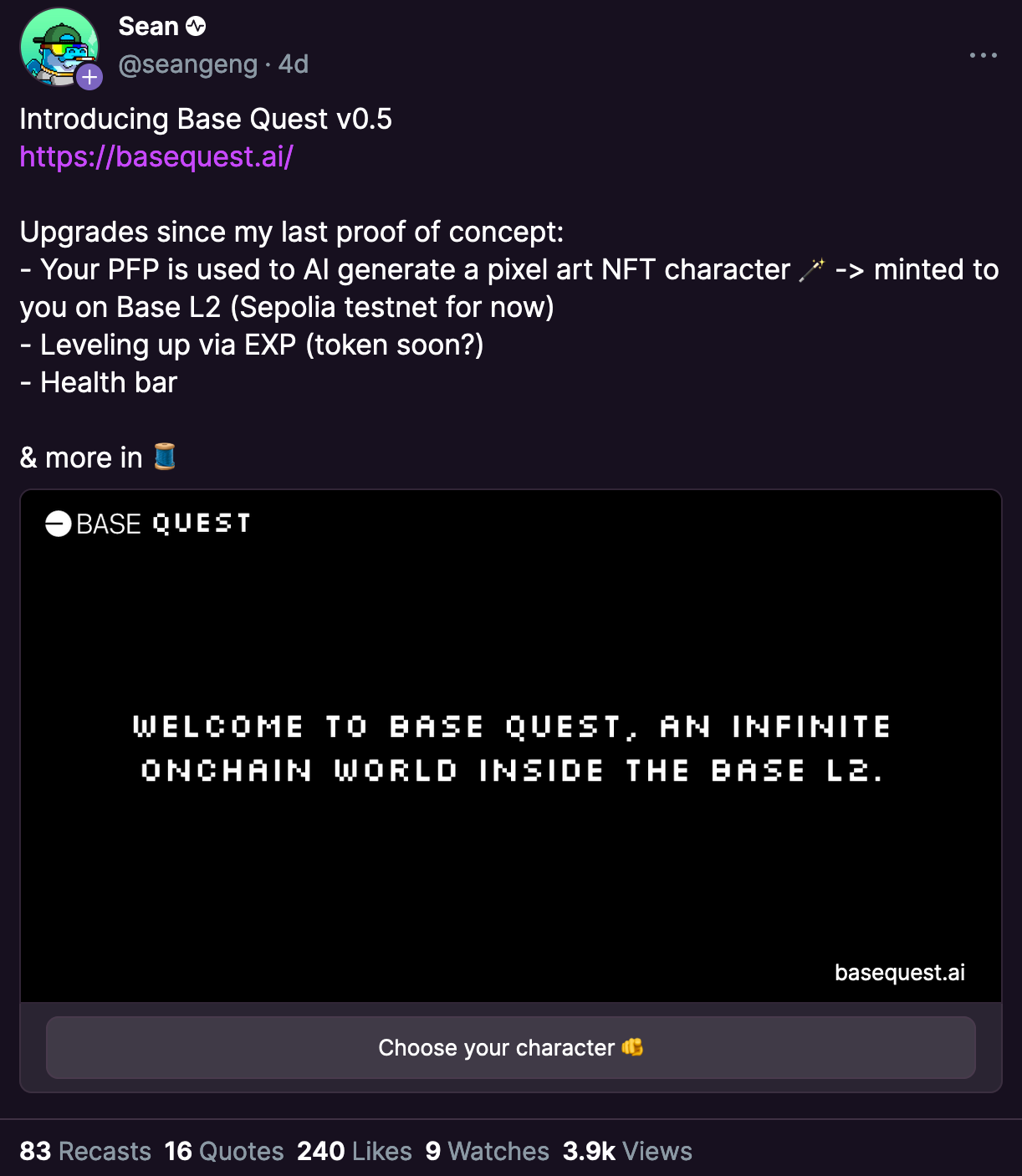

One of the biggest developments in the Farcaster eco-system so far happened last week, where a feature called Frames was introduced: a new kind of interactive embed that Farcaster users can build themselves and inject into the Farcaster feed. This probably sounds a little bit esoteric, but in other words, people can now code their own little embeddable apps and share them directly as casts on Farcaster - think of them as interactive media tweets. some notable examples of this are Onframe Chess by 5/9 and Base Quest by Sean:

Link to Onframe Chess Cast | Link to Base Quest Cast

Basically you can now play Chess as well as a text adventure directly in your Farcast feed, I believe that this really sets Farcaster apart from other social media apps. If you're curious about learning more about Frames, here's another recent article that introduces them with a little more context than I do:

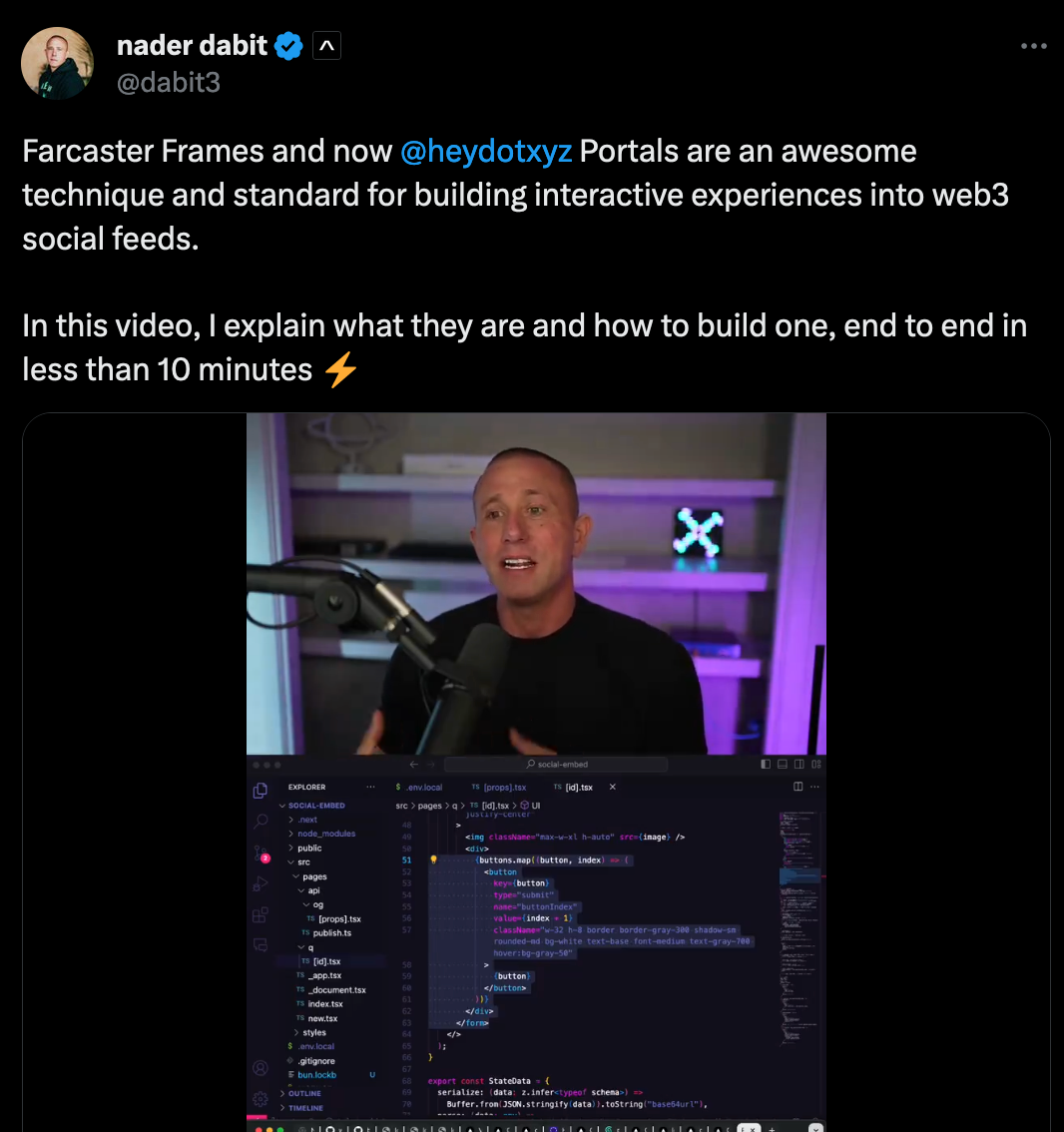

And if you're interested in building your own Farcaster Frame, you need to check out Nader Dabit's tutorial that he's posted over on TwitterX:

Alright, that's enough about Farcaster for starters, lest this sounds more and more like some made up technobabble straight from r/VXJunkies, I'll cut things off here and move on to the regular Newsletter segments. Let me know if you end up joining! And especially if you build some interesting Frame!

All the Generative Things

Of course there's also been a bunch of interesting developments in the genart space over the course of the past week 👇

Genuary 2024 comes to an End

Most notably, Genuary 2024 concluded on the 31st of January. With hundreds of participants and thousands of submissions across multiple platforms it's unarguably been the biggest iteration of the event yet. Albeit I'm a little sad that it's over, since it's such a great way to get so many ideas out of your system, I'm at the same time excited to spend some more quality time on some of my favorite ideas that came to be throughout the month.

For the occasion, the Le Random team came together with Joyn, organizing a Twitter space in which they invited Piter Pasma, who's in charge of organizing and selecting the prompts for Genuary every year, as well as some of the participating artists, such as Darien Brito, Ella, and yours truly, to talk a little about the entire experience - the Twitter space has been recorded and can be found in a shortened version (minus all of the fluff) over on Apple Podcasts:

I thoroughly enjoyed the thoughtful questions from the Le Random team as well as Piter's thoughtful answers to them, providing insights and reflecting on how Genuary has grown over the past 4 years and now occupies an important spot that ties the community together every year. As a platform independent event, generative artists get the opportunity to connect with each other simply for the joy of making generative art, without any other incentives.

Besides that, we also find Monk out in the wild with an exclusive article for Joyn, complementary to the Twitter space, covering some of his favorite artworks for 10 of the Genuary prompts that he found most interesting:

In the Twitter space I was asked about how the overall Genuary experience was like. And I had actually thought a bit about it beforehand - to me the beauty of Genuary lies in the creative interpretation of the prompts. It's not as much of a coding challenge as it simply a creative challenge, where you aim to make something that will surprise in the context of the given prompt. I try to not look at anyone else's creations before I've finished my own, while it keeps what I make authentic, it also makes discovering what others made so much more fun.

Piter tells us that the set of prompts that he had conceived for the very first Genuary mainly revolved around programming techniques and algorithms, which then changed quite a bit when he asked others for suggestions, pitching ideas that were not necessarily directly related to any programming specific things.

Another big factor is the time limit, of course there's no hard rule to do the prompts within their allocated day, but I believe that it adds another aspect to the challenge that will frequently push artists outside of their comfort zone. This makes me think back to an article that I've shared a couple of weeks ago, a piece by Ralph Amer, where he talks about the Creative Playground - "Creative limitations set our creativity free":

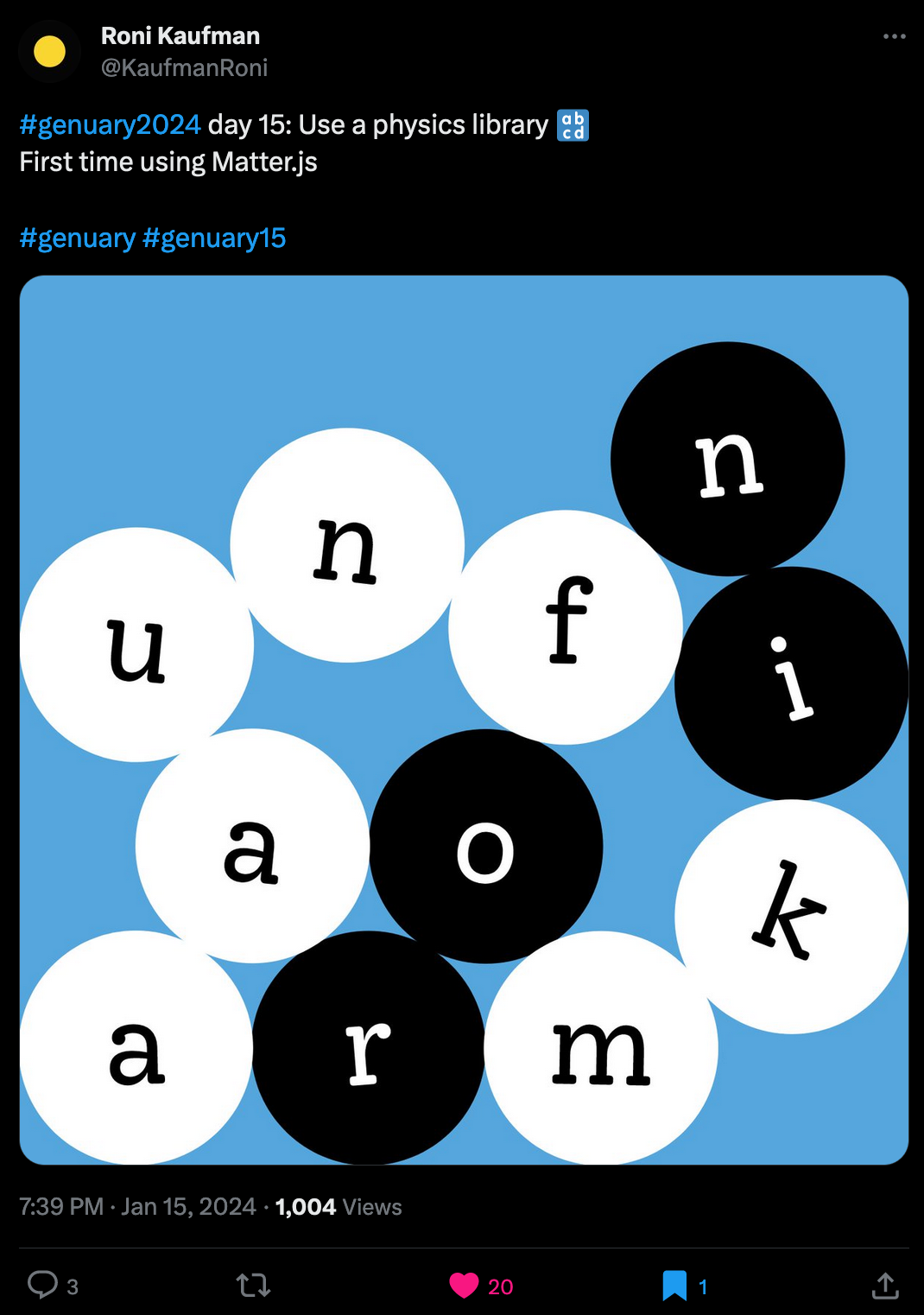

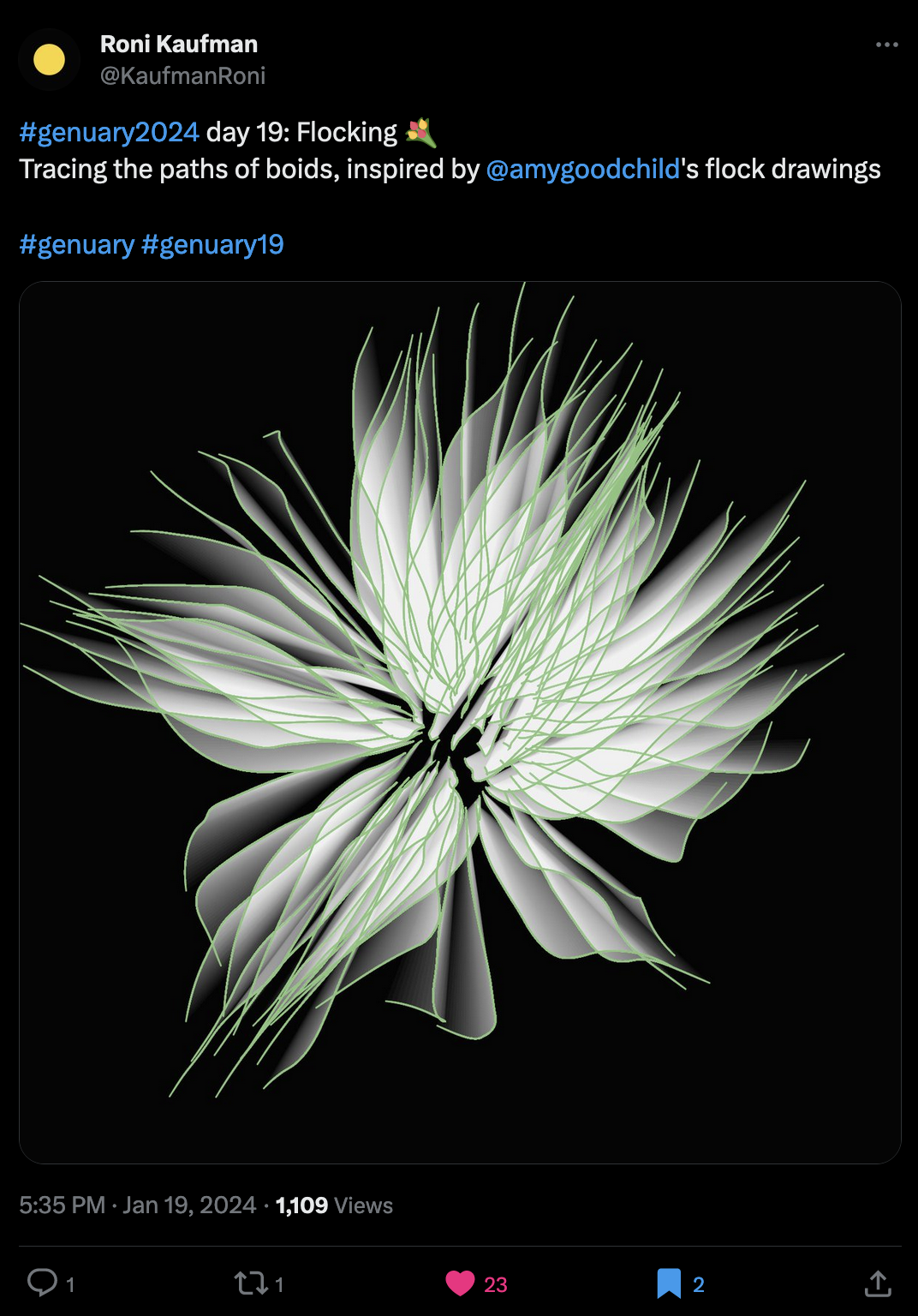

Others also like to create additional challenges factors of their own, like Roni Kaufman, who chose to only create static artworks for each prompt, even when some of them were inherently referring to concepts that are associated with movement, like prompt #15 that asks you to use a physics library, or #19 that simply states 'flocking'.

Or for instance Raphaël de Courville's AI assisted Genuary speedrun. Some people do the prompts on their allocated days, others spread them out over the entire year, and Raph just wants to see the world burn and do all of the prompts in a single sitting. His final time clocks in at 1 hour 46 minutes and 56 seconds. you can watch a recording of his stream here:

Towards the end of the stream Raph challenges Shiffman to also attempt the speedrun and see if he can beat his time. We now eagerly await his response. Also subscribe to Raph on Twitch, when he reaches 2000 subs he'll record another funny bird sound that we can then trigger through the Twitch chat.

Genuary is just such a beautiful event, and the community never feels more alive than throughout it's course - I'm already looking forward to next year!

Harold Cohen Retrospective

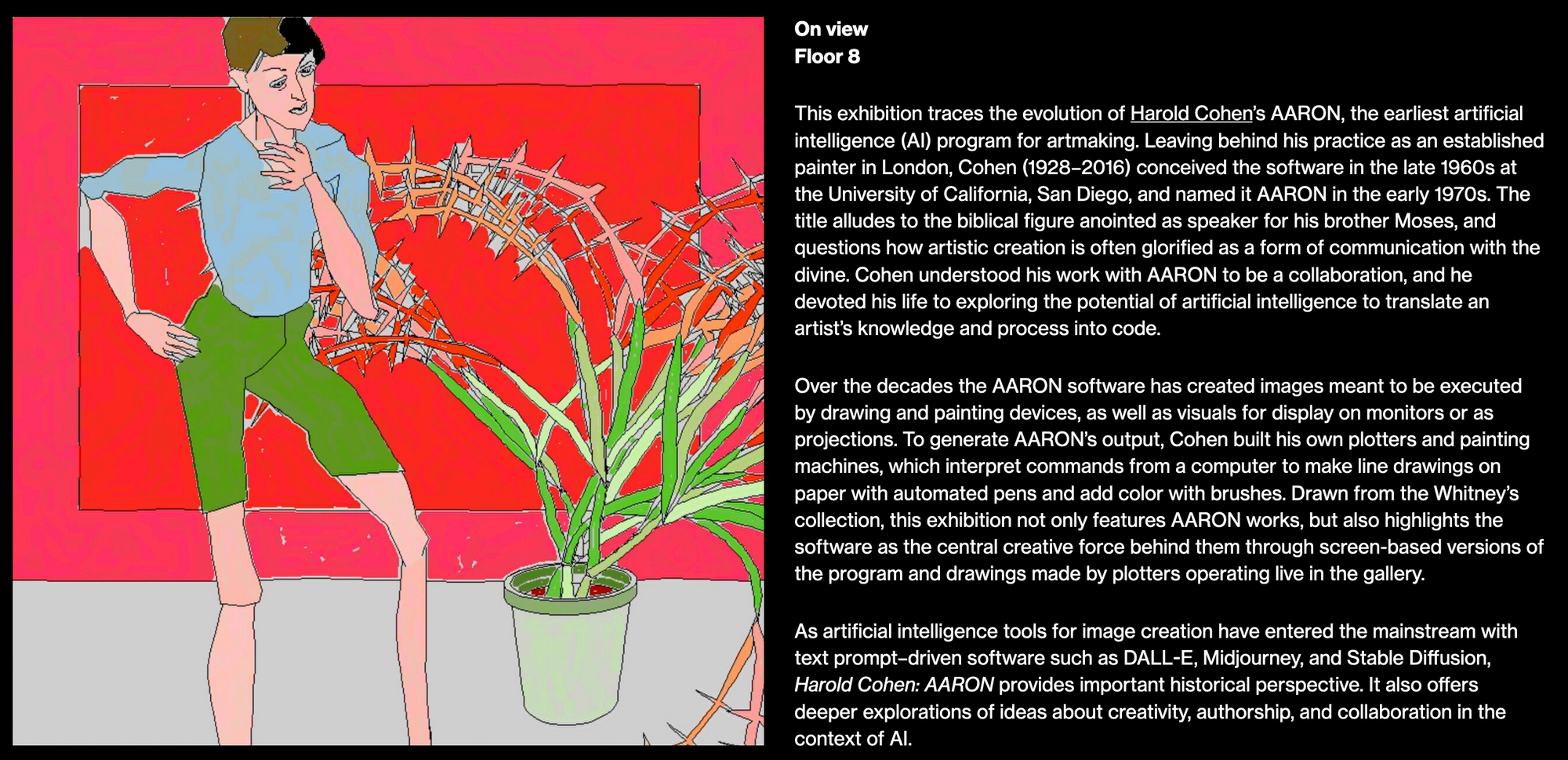

Last week the Harold Cohen Retrospective exhibition showcasing his AARON works kicked off at the Whitney Museum of American Art in Manhatten:

Harold Cohen is one of the earliest names that experimented with AI for the purpose of making art, most notably he conceived a set of algorithms under the name AARON:

AARON is the collective name for a series of computer programs written by artist Harold Cohen that create original artistic images. Proceeding from Cohen's initial question "What are the minimum conditions under which a set of marks functions as an image?", AARON was in development between 1972 and the 2010s - excerpt from the AARON Wikipedia page

Although the source code for AARON is not publicly available, it likely is a vastly different type of AI that we are familiar with today. Harold Cohen was also one the first that explicitly asked questions about the collaborative practice between artist and machine, and being a catalyst for the conversation around authorship and agency. Naturally, we can count on Le Random for stellar coverage, interviewing Christiane Paul, the curator in charge of the AARON exhibition:

It was interesting to see Sougwen Chung being brought up, which I also immediately had to think of when I saw pictures of the AARON plotters - in many ways she follows in the footsteps of Harold Cohen, Le Random also did an interview with her a while back.

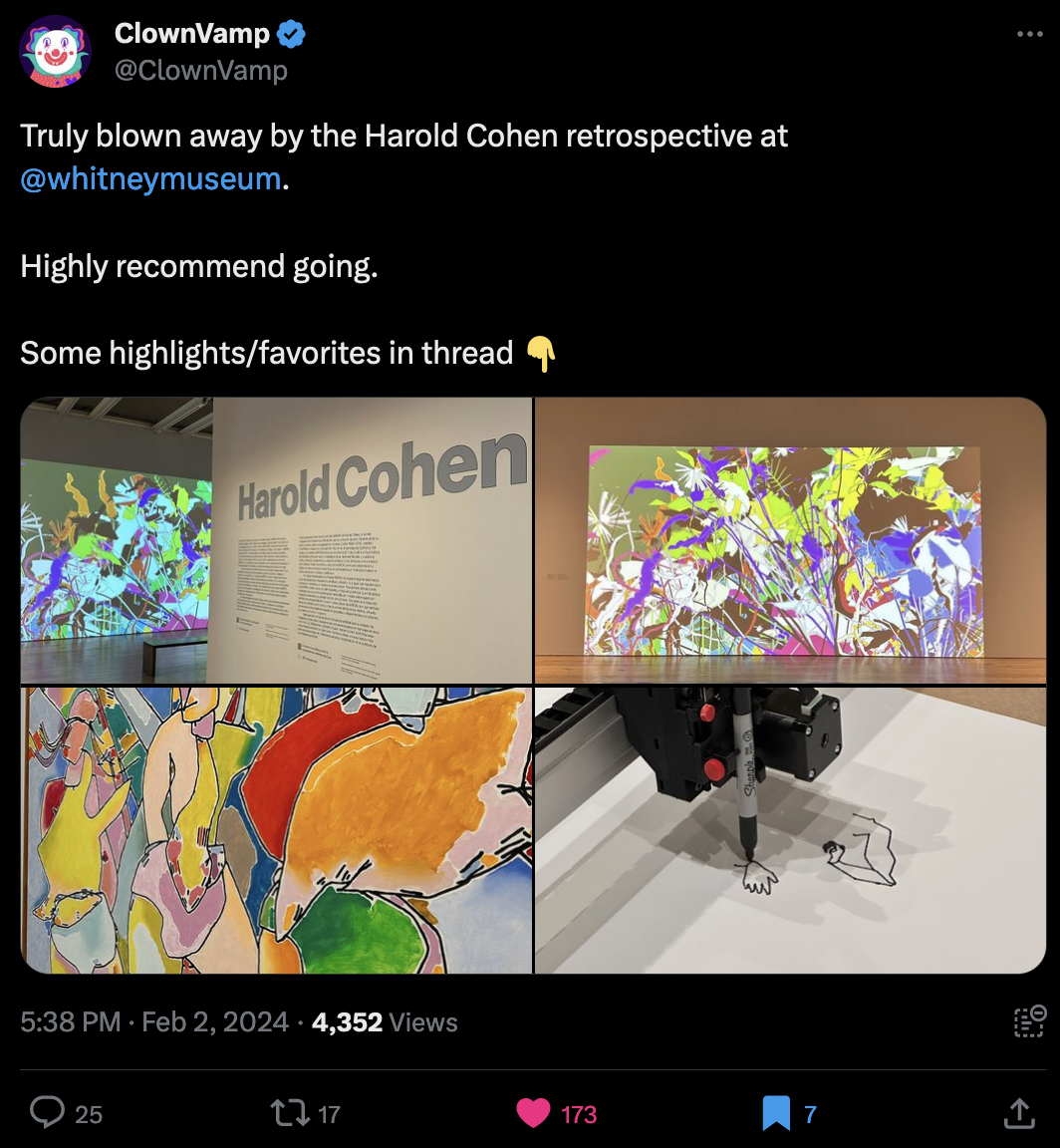

I believe that only a fraction of the newsletter readership is actually physically somewhere near Manhatten, we do however get a glimpse into the exhibition through ClownVamp's excellent Twitter thread, in which some highlights are showcased:

Infini Craft by Neal Agarwal

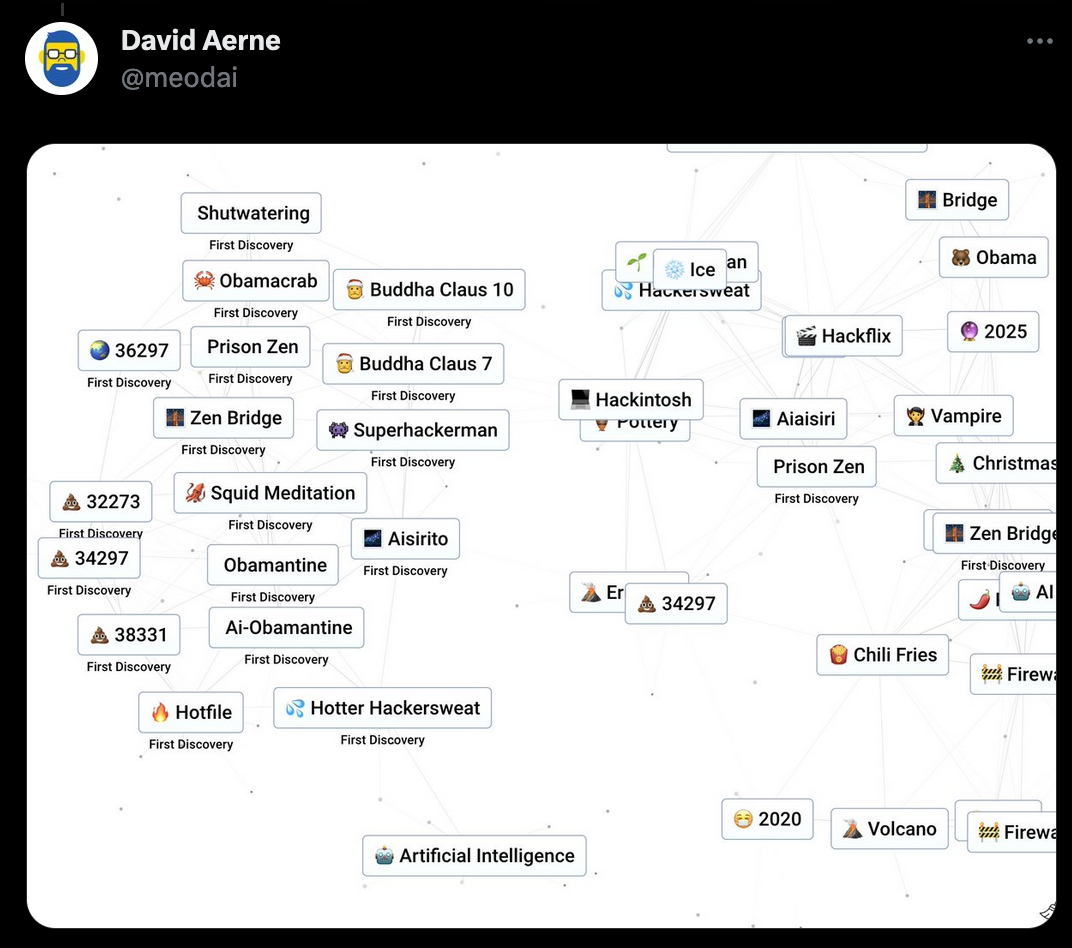

Neal really outdid himself again with his latest project Infini Craft - an LLM powered crafting game:

And as its name suggests, it's an infinite one at that. If you remember a game called Little Alchemy from way back when, it's essentially that - a sandbox game in which you can combine elements by dragging and dropping them onto each other, where they'll then combine into new elements that can be used to discover even more elements and so on. There isn't really a specific goal to it, the entire fun of it stems from trying out different combinations and seeing what it'll produce.

I remember that Little Alchemy would quickly get frustrating once you'd tried out most of the combinations and weren't able to really progress anymore, naturally the combinations in the game are hard coded so once you've got everything it's done. Throwing an LLM into the mix that can endlessly conjure new combinations of things makes this infinitely more exciting:

I'm not entirely sure about how it's implemented, there probably is an input prompt that asks the language model "What do you get when you combine X and Y?" in addition to some database that stores combinations that have already been discovered, it is deterministic in the sense that combining the same two elements will always yield the same output - but sometimes you'll get a notice under an element indicating that it's a first discovery, meaning that no one else has managed to discover it previously:

David Aerne somehow managed to produce an Obamacrab - and if that doesn't make you want to try the game out for yourself, then I don't know what will. Let me know if you end up getting any cool combos:

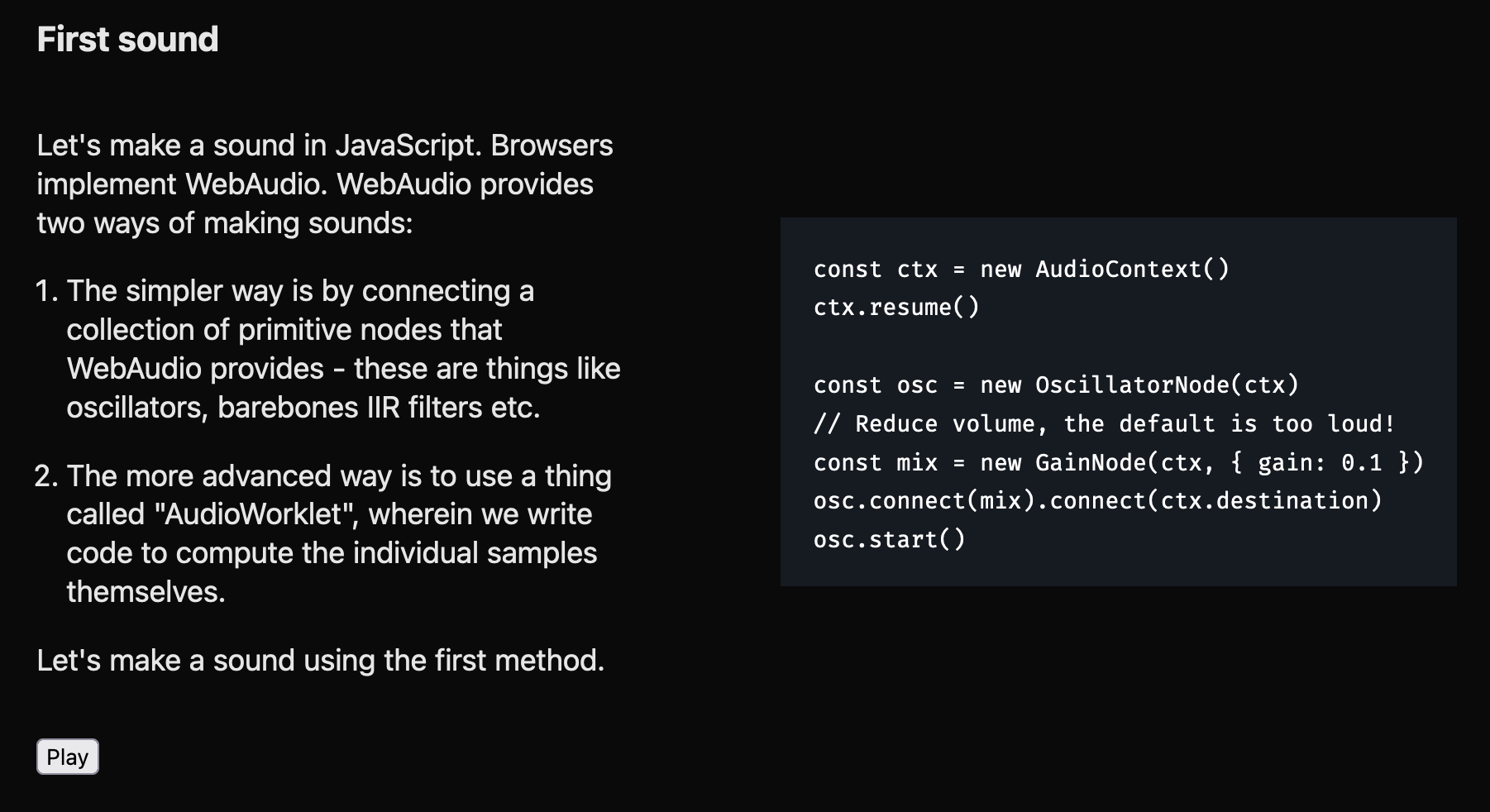

Generative Audio with Vanilla JS

While putting together my top picks for Genuary 2024 (which should come out sometime next week), I was scouring the r/generative subreddit to see what the folks did over there, and came across an interesting generative audio demo (I think that it was a creation for the final prompt) that claimed it was done entirely in Javascript with zero dependencies, which I thought was quite impressive:

I'd always assumed that you need some library to make Javascript produce any sort of sound, like Tone.js, but that doesn't seem to be the case, as JS actually comes with its own Audio context that can be used for this purpose - I'm guessing that Tone.js is actually built on top of that context:

Manav Rathi aka u/thousandsongs wrote a brief introduction to the audio context here:

This post is complementary to another one in which he explains the demo, introducing Euclid's algorithm that seems to be a potent method for creating interesting rhythmic patterns - you can read about it here. So that was definitely a treasure trove, Manav also shares a fully commented synth implementation in Typescript with zero dependencies:

Web3 News

There's way too much going on in the Web3 x genart space to cover every week, but besides the big traction that Farcaster has recently been getting, here's some of the things that I think are worth mentioning 👇

Custom Highlight Embeds

Highlight now makes it possible for artists to share and distribute their generative mints directly from their own websites with custom embeds - here's a quick video run-down of how it works:

Seeing that the Gorilla Sun blog is my main home on the internet, this is super exciting to me - essentially saving the trouble of having to redirect people to another page to mint something. I have yet to release something over on highlight, but there's a couple of ideas that are already brewing - I'm definitely releasing something this month.

Overall I'm really impressed with how many new features Highlight has put out since their launch last year, and this is definitely a big one, giving artists even more control over how they release their artworks.

Artblocks Acquires Sansa

Art Blocks made the announcement that they're acquiring Sansa with the goal of integrating their versatile marketplace into the Art Blocks eco-system:

I think we're all familiar with Art Blocks at this point, the pioneering platform that made generative art NFTs possible on the Ethereum blockchain. As far as I understand, Sansa was later developed independently from the Art Blocks platform, enabling a secondary market place for generative artworks on ETH, due to Art Blocks not having its own proprietary secondary marketplace - I think OpenSea was one of the few options for this purpose.

Art Blocks wrote a little announcement post explaining a bit how this is going to go down and the road-map is:

Digen's Digital Me Open Call

After their successful soft launch at the end of December, DiGen Art has been hard at work putting together their inaugural group show "Digital Me" - and they're inviting artists from the MENA region in their open call:

If you are an artist from the MENA region or know someone that is, consider applying, you can learn more about what they're looking and the criteria with which they will select the artists through the exhibition page and the accompanying brochure:

Tech and Code

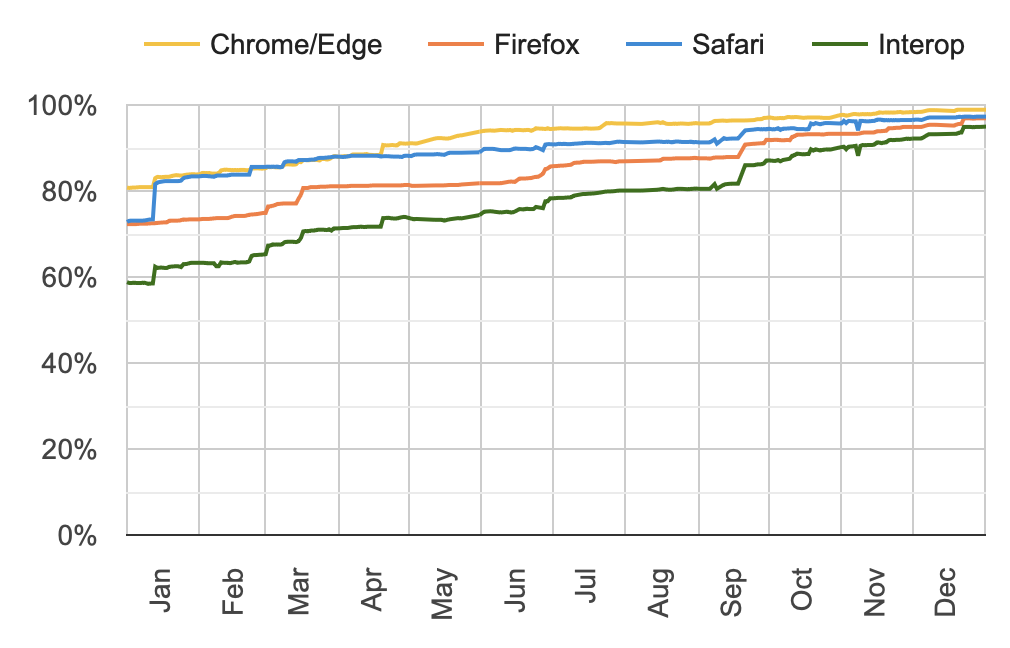

Interop 2024

The results from Interop 2024 just dropped, and it's looking good - the modern web is more interoperable than ever!

The Interop project is essentially a yearly big comparison between the different modern ever-green browsers where some of the differences are ironed out making it such that features work identically across the different browsers. Jen Simmons from the Webkit team recapitulates the findings from 2024 in this article, going over each one of the different focus areas, explaining the issues and elaborating on what's been done to remedy them.

12 Modern CSS One-Line Upgrades

CSS has been evolving more and more over the past years, gradually accommodating the increasing needs of the modern web. We're frequently seeing new rules being added that previously had to be implemented in a hack-y way by combining several different statements, for purposes that they weren't originally designed for. Stephanie Eckles writes about some of these new one-liners:

One other resource that's mentioned, and that I think is worth sharing here as well, is the SmolCSS page; basically a library of small (smol) CSS snippets that accomplish a lot of the reoccurring general purpose tasks, like reoccurring types of layouts, various types of grids, etc. - definitely worth a bookmark:

Making Sense of Senseless Javascript Features

Javascript may be the most popular programming language today, but at the same time has quite a few quirks that will most likely leave you scratching your head when you encounter them for the first time - this article by Juan Diego Rodriguez goes over some of them and tries to explain what is going on:

I've shared it before, but if you haven't seen it yet, you need to watch this iconic talk that Brian Leroux gave at dotjs back in 2012, in which bravely descends into the madness of Javascript all while demonstrating the language's most bizarre features in a live coding session:

OLMo: An Open Source Language Model

Arguably the biggest news in the AI world from the past week is the release of OLMo: an open source state of the art large language model. The Allen Institute for AI aims to democratize this type of new technology, to help researchers better understand the inner workings of LLMs, experiment with their capabilities, as well as enabling developers to create interesting LLM powered applications in which they have full control. Here's a quick run-down of the features that come with this project:

If you're doing anything with AI, this is probably something you'll want to keep an eye on:

Gorilla Updates

Hot off the Press

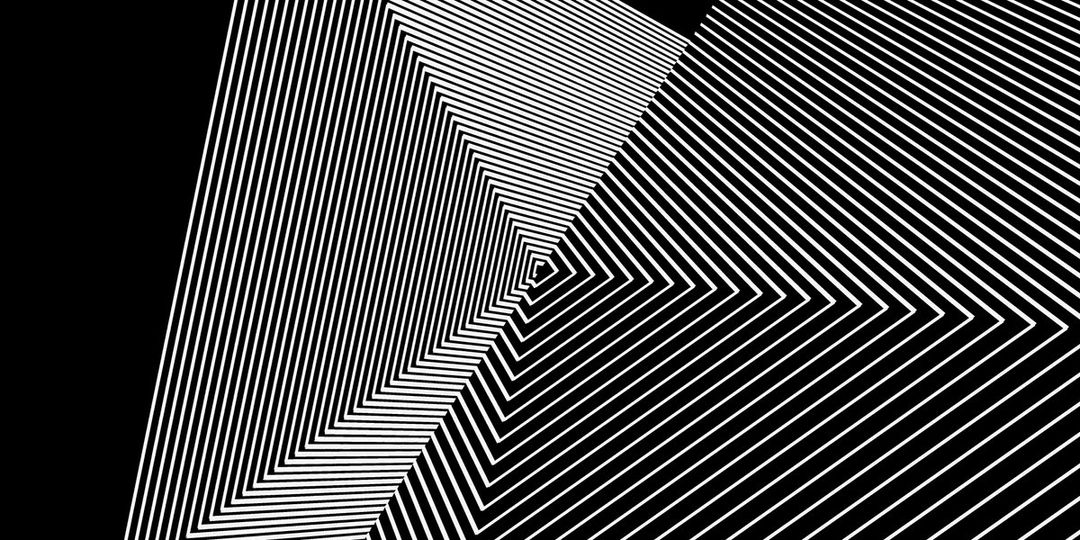

Last week I put together one big recap of everything that I made over the course of Genuary 2024 - turns out that you end up making quite a few interesting things when you make a thing everyday for a month. Wrote a little commentary for each one of my creations, explaining what's going on and covering the inspirations and concepts that inspired each individual sketch:

Another thing I'd like to mention here, for documentation purposes, is that I was mentioned in the Collector Stories Season 2 Episode 4 by SUBI at around the 1 hour 30 minute mark - this is in light of SUBI, a collector that's primarily active on the Solana chain, making the decision to join Tezos with my token 'Somewhere in Between' as their first mint. I'm really honored that they chose one of my works:

Here's a link to the recording of the Twitter space, in which some interesting points were raised:

Fresh off the Easel

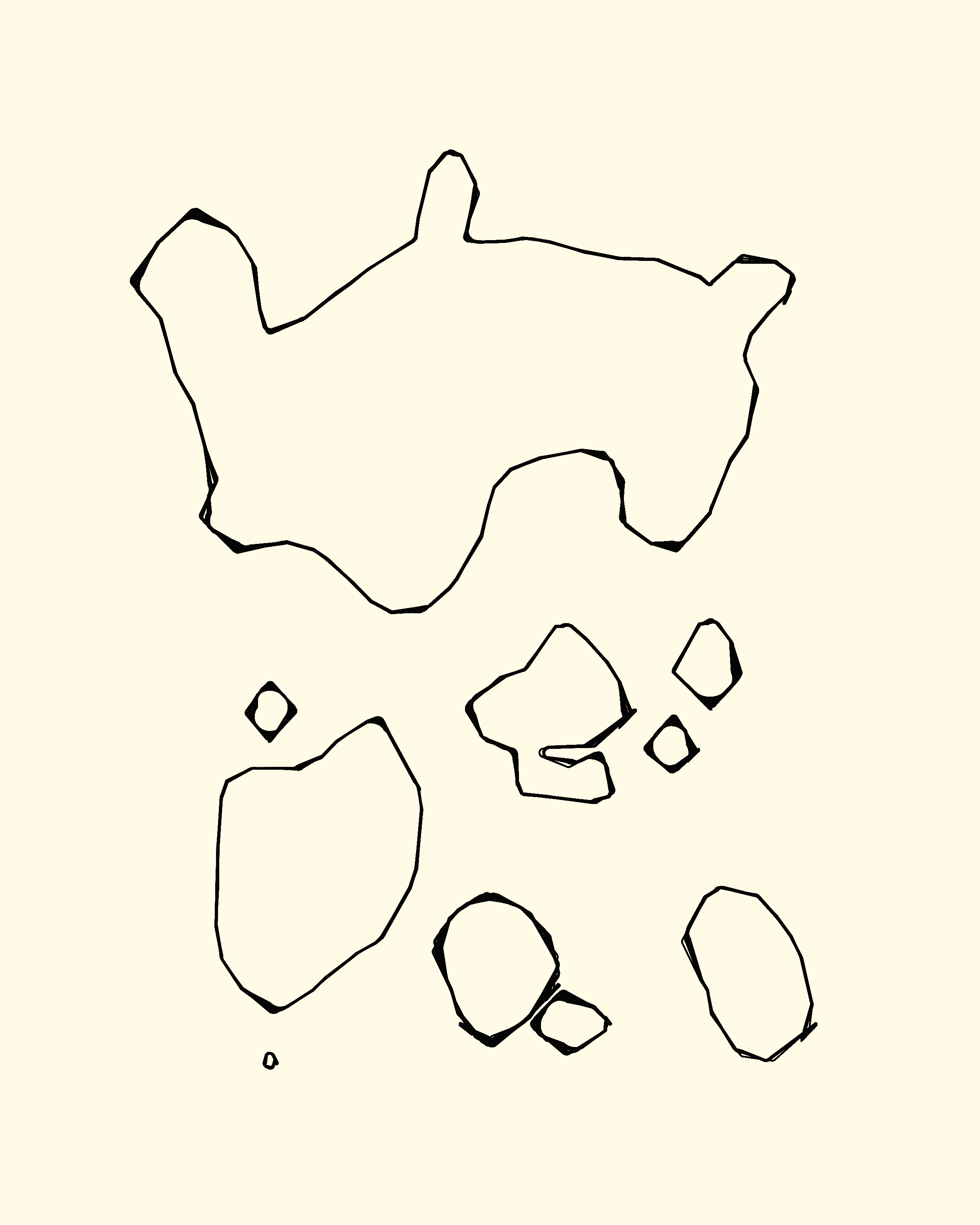

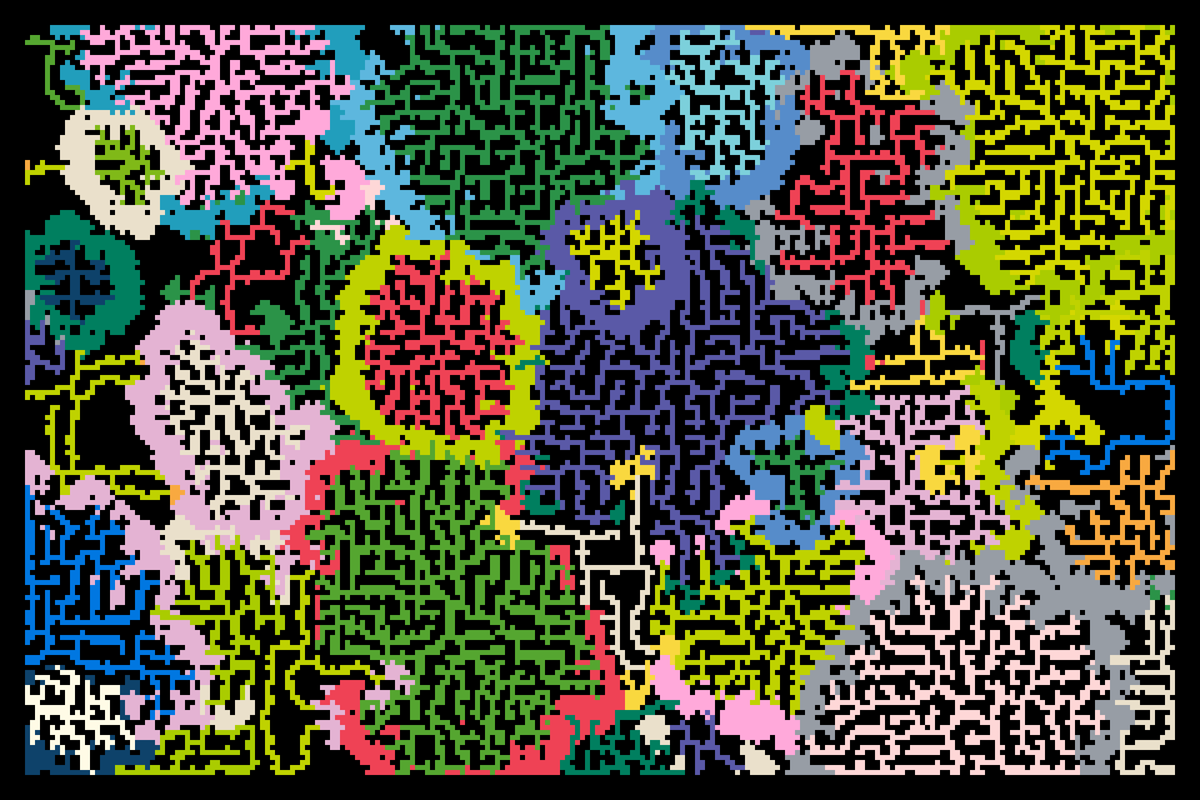

Besides wrapping up those final Genuary prompts, I actually found some time this week to start investigating some of the ideas that started taking shape over the course of Genuary. After redoing a Marching Squares algo for the 12th prompt "Lava Lamp", it got me thinking that I don't necessarily need to use it for anything animated, but could be an equally potent method for generating static shapes, especially in the scenario when it's difficult to construct a shape with a geometric approach - this might sound a bit esoteric, but if it unfolds as I'm envisioning it, you might see some of those in the next Newsletter.

Here's two WIP outputs, which might not look like much at first glance, but I'm quite happy with what I achieved in them:

Besides just being able to find the contour of some regions on the canvas, I'm also able to determine the individual regions in addition to the their vertex information, which means that I can freely control the contour and do more stuff with it, like deforming it, or rounding off the corners, etc.

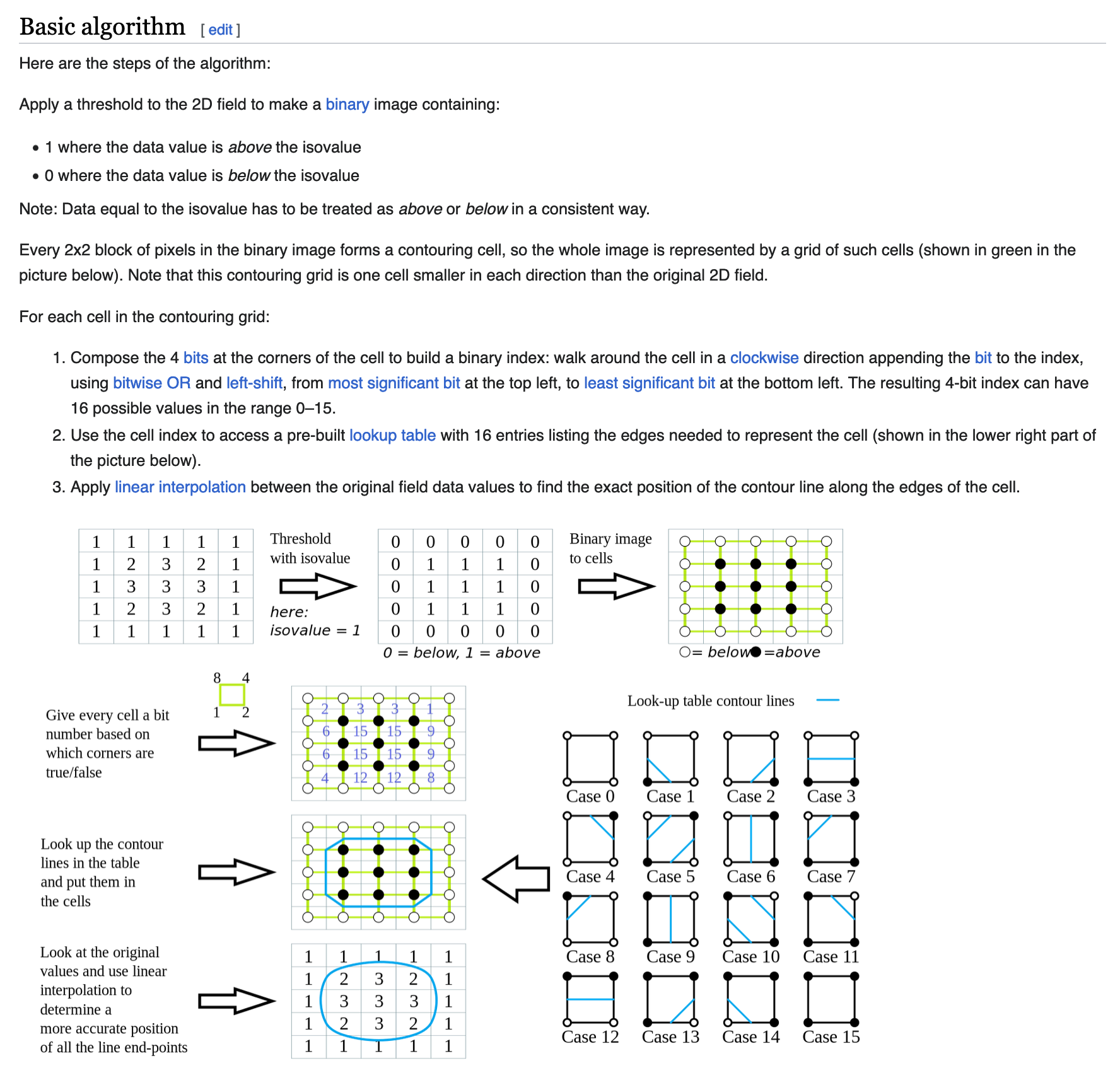

If you're not familiar with Marching Squares: it's a neat algorithm that works in 2D and 3D, and lets you draw the contour of certain regions in a grid depending if the values at those grid points exceed a certain value - in the examples above this value is determined by the proximity of a grid vertex to the surrounding circles. Each of the cells in the grid, depending on which one of it's four vertices is active, will draw a certain type of line, outlining the active region. It's smart and feels a little like magic because the concept is so simple. Wikipedia gives a good overview of the algo:

Naturally, it's not as easy as it sounds, and you'll run into some complications when you're trying to do more with later on down the line. There's also more advanced versions of the algorithm that are more powerful, but at the cost of more complex code - over on Farcaster, Claus Wilke chimed in with a larger project that he's worked on which involved isobands that are more difficult to implement, he also points out that Wikipedia is a really good resource for this algorithm in particular:

Music for Coding

This week I've got a blast from the past for you all, and it's... drum-roll - Weezer!

It's a bit of an odd choice, since it might not be the best music to code along to, but after discovering that the singer and guitarist of the band Rivers Cuomo is currently actually active over on Github and a significant amount of time on programming, it was a no-brainer to include:

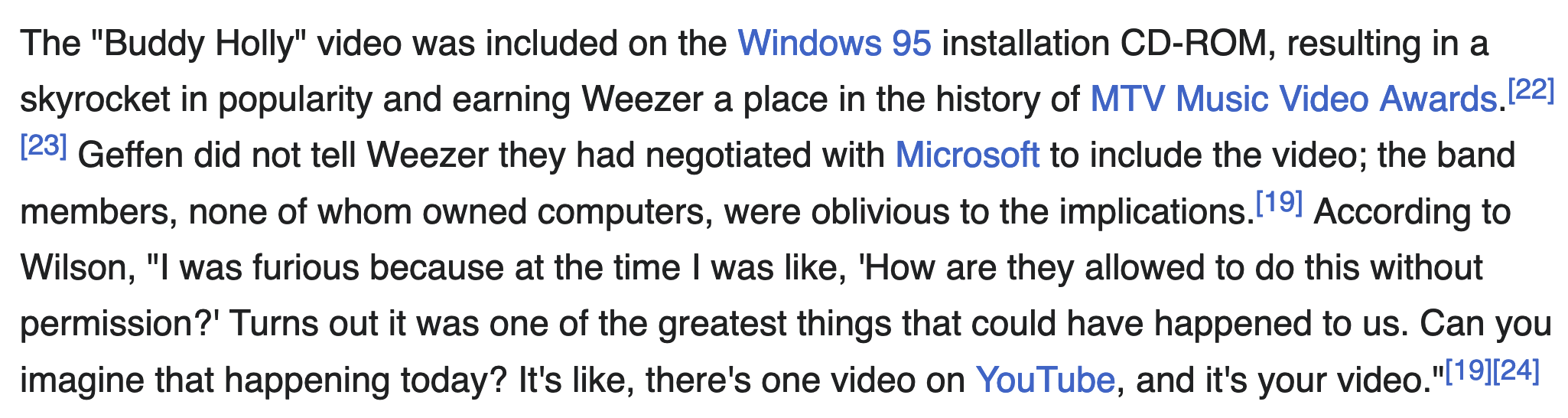

It seems that he is mainly working on a discord bot. Another interesting piece of Trivia that I discovered by going down this Rabbit hole, is that the music video for Buddy Holly was actually included with the Windows 95 installation CD:

The interesting part of this is that the band had no idea about this until afterwards, after being pissed initially they came around to realise that it probably played a factor in the song becoming as popular as it is today:

And that's it from me this week, while I'm typing out these last words I bid my farewells — hopefully this caught you up a little with the events in the world of generative art, tech and AI throughout the past week.

If you enjoyed it, consider sharing it with your friends, followers and family - more eyeballs/interactions/engagement helps tremendously - it lets the algorithm know that this is good content. If you've read this far, thanks a million!

If you're still hungry for more Generative art things, you can check out the most recent issue of the Newsletter here:

Previous issues of the newsletter can be found here:

Cheers, happy sketching, and again, hope that you have a fantastic week! See you in the next one - Gorilla Sun 🌸