Welcome back everyone 👋 and a heartfelt thank you to all new subscribers that joined in the past week!

This is the 51st issue of the Gorilla Newsletter - a weekly online publication that sums up everything noteworthy from the past week in generative art, creative coding, tech and AI - as well as a sprinkle of my own endeavors.

That said, hope you're all having a great start into the week! Let's get straight into it! 👇

All the Generative Things

Tuning Parameter Spaces with Machine Learning

When creating long-form generative art, one of the more difficult parts of the process is the fine-tuning step. Balancing between a varied output space, while also steering clear from "duds", can often be a laborious endeavor and frequently boils down to spending a significant amount of time on sculpting and refining various parameter ranges.

The difficulty of the task stems from the inherent complexity and high dimensionality of parameter spaces with many variables. In a generative artwork the different parameters that determine the final output usually have a non-linear relationship: changes to one variable are not isolated and can have an unexpected effect on the outcome of other parameters down the line. A small tweak in one area might have drastic consequences for the final output, which makes it hard to predict the outcome of adjustments.

Bjørn Staal, a repeat offender on this Newsletter, recently addressed exactly this issue over on TwiX; in a tweet of his he asks whether tuning these parameter spaces can be done with machine learning methods and if there have been attempts to do so. The replies give rise to a bountiful conversation around the topic, other artists pitching in with their own approaches towards the challenging task:

One project that comes up in the conversation and that I was not familiar with previously is Ben Snell's Cattleya - a generative artwork that makes use of statistical shape modeling and PCA (Principle Component Analysis) to create a generalizable template for the shape of different types of Orchids.

To give a brief summary, in 2021, Snell discovered a new passion of his when he began growing different kinds of Orchids in a corner of his NYC apartment. Learning more about this plant species, and becoming engrossed with how they grew inspired Snell to return to the canvas and rekindled his creative flame - ultimately leading to the creation of his generative artwork Cattleya that released on Art Blocks in May 2022. In his write-up "Ill with Orchid Fever" he recounts this story and explains the process behind the generative system.

How does PCA come into play here? And what actually is PCA? In a nutshell, PCA is a statistical technique used to reduce the number of variables (dimensions) in a dataset while retaining as much of the original information as possible. Snell's approach to modeling his generative flowers relies on a common template that has the ability to represent any of the emergent shapes, he explains:

There were two primary forms to model: the shape of flowers and the shape of foliage. I approached each form independently using a technique known as Statistical Shape Modeling. This approach is exactly what it sounds like: it uses statistics to model shapes, in order to make generalizations about them and generate new shapes from the same distribution.

In simple terms, adding PCA on top of this template allows Snell to extrapolate a number of "control dials" that let him interpolate between different Orchid configurations. It's a fascinating and creative approach to tuning the output space of the artwork, in this setting, tuning the parameter space is made more tangible by reducing the number of parameters to tune. I highly recommend reading the article as it provides a more complete picture than I can provide here.

Bjørn's tweet received other interesting replies, more than I have capacity to cover; but another interesting resource that I'd like to share is a paper titled "Understanding Aesthetic Evaluation using Deep Learning" which is accompanied by a presentation over on Youtube:

The overarching questions of the paper is: Can we use machine learning to model individual human aesthetic preferences? While the approach is interesting, I don't see it as particularly useful for the average generative artist. A better title for the paper would likely have been "Using ML to rank and classify images according to aesthetics". Since the authors already had an annotated dataset for the occasion, it was easy to train a model on top, it doesn't seem very generalizable to other aesthetics and or projects however.

The Hacker News comment section for the article raises some interesting points as well, the top comment mentions an article by Jürgen Schmidhuber in which he presents an interesting perspective on the enjoyment and understanding of aesthetics, and that it has a lot to do with our ability to compress patterns and scenes:

I think that much of our understanding of the world around us can be abstracted as mental process of compression. But lest this section becomes too philosophical, I'll cut it short here. What are your thoughts on this, and how do you personally go about fine-tuning your own generative artworks?

While I'd argue that the approach is individual to every project, the ideas discussed here aren't necessarily just pragmatic approaches to more efficiently refine the output-space of a generative system, but could very well also be leveraged for creative purposes - to discover novel outputs that you wouldn't have necessarily been able to find fine tuning by hand. Make sure to check out the other replies in the discussion.

Daizaburo Harada's Artificial Lifeforms

Our favorite Japanese creative coder, Shunsuke Takawo, recently shared a resource that recounts some of the influential Japanese generative artists from the 70s leading up to the 21st century:

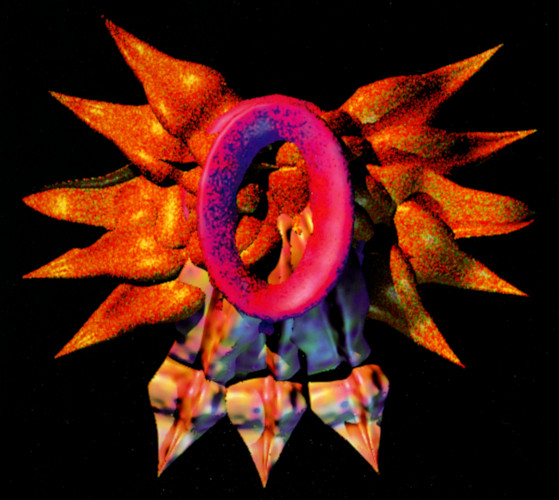

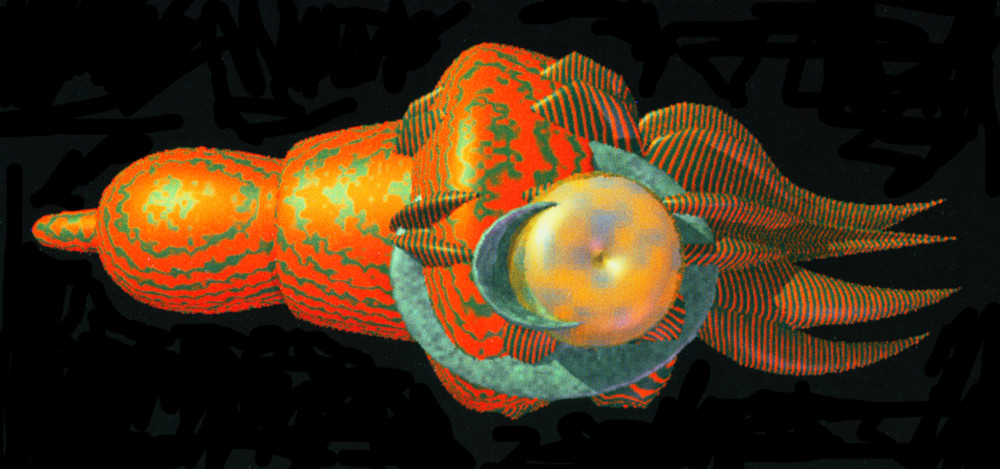

While Hiroshi Kawano's artistry is widely recognized and needs no introduction, I'd like to highlight Daizaburo Harada's work, one of the other artists that is mentioned. Harada is most notably known for his "AL: Artificial Life" series that he release in 1993 Harada as a CG book (at a time where this was still a popular thing to do). The CG book depicted strange otherworldly creatures that Harada labeled as insects; I like to assume for emergent organic features that they presented:

Not only are the aesthetics captivating (impressive for their time) but also the very interesting story behind the artworks. Apparently the creation process for these digital creatures involves sound as an input according to a few resources: "They were created using a novel procedural generation technique using audio waveforms as a seed" according to this page on Moby Games. I would have loved to learn more about the process, but I couldn't really find more info.

The only resource that gives a comprehensive historical recount of Harada's work is an article by Misty De Méo via the CD-ROM Journal - I have to add here, that it is beautifully written:

It honestly makes me a little sad that only little information can be found about Harada's work, and makes me wonder how many other artists and works have already been lost to the passage of time.

Processing 2024 Fellowship Grants

Last week the Processing Foundation kicked off the submission period for their 2024 Fellowship program - the application deadline for submitting your fellowship proposal is the 2nd of May, 11:59 PM EST. If you're not familiar with the fellowship program, it's essentially designed to support a number of projects aimed at benefiting the community in a number of priority areas:

Our fellowship is designed to provide substantial support to individuals and groups within our community. This includes a $10,000 stipend, dedicated mentorship, skill-building workshops, public programs, and community engagement opportunities. Participation for the fellowship is entirely online and we invite applicants from around the world to apply.

This year's theme is 'Sustaining Community: Expansion & Access', with the following 4 priority areas: Archival Practices: Code & New Media, Open-Source Governance, Disability Justice in Creative Tech, Access & AI. If you have ideas that might align with one of these priority areas, and want to learn more about the program, you can do so here:

In addition to that, a new partner program/development grant will be announced later, that is specifically aimed at software contributions towards the p5js library.

Pen and Plottery

Buffer Overflow by Marcel Schwittlick

The plotter wizard Marcel Schwittlick has recently been experimenting buffer overflows, purposefully causing them in a vintage plotter of his, the HP 7475A, to create interesting and unpredictable artworks. Schwittlick explains how this is done in a post over on his website, and additionally showcases some of the artworks that came to be from this approach:

Essentially, the buffer overflows are caused by skipping an important signal in the communication between the plotter and the interface that is sending the movement commands to it, namely the handshake. This handshake's purpose is generally to prevent these overflows from happening, for instance, when the plotter's memory capacity has no room to allocate and read the next instruction Essentially a waiting mechanism as far as I understand it.

Why is it called a buffer overflow? When sending data without a handshake, the buffer might not have enough space available, the excess data then overflows and overwrites parts of memory outside the intended buffer. This can corrupt existing instructions, including those that the plotter is trying to execute, leading to an erratic behavior. Schwittlick shared a video over on TwiX, showing the plotter in action while it succumbs to a number of overflows:

Plotting Tips from Zancan

Zancan shares an insightful tip to drawing filled circles with a plotter - circles in this setting are simply spirals in disguise! He also shares a short Tsubuyaki processing snippet:

It's also worth checking out the replies that provide additional plotting tips and tricks.

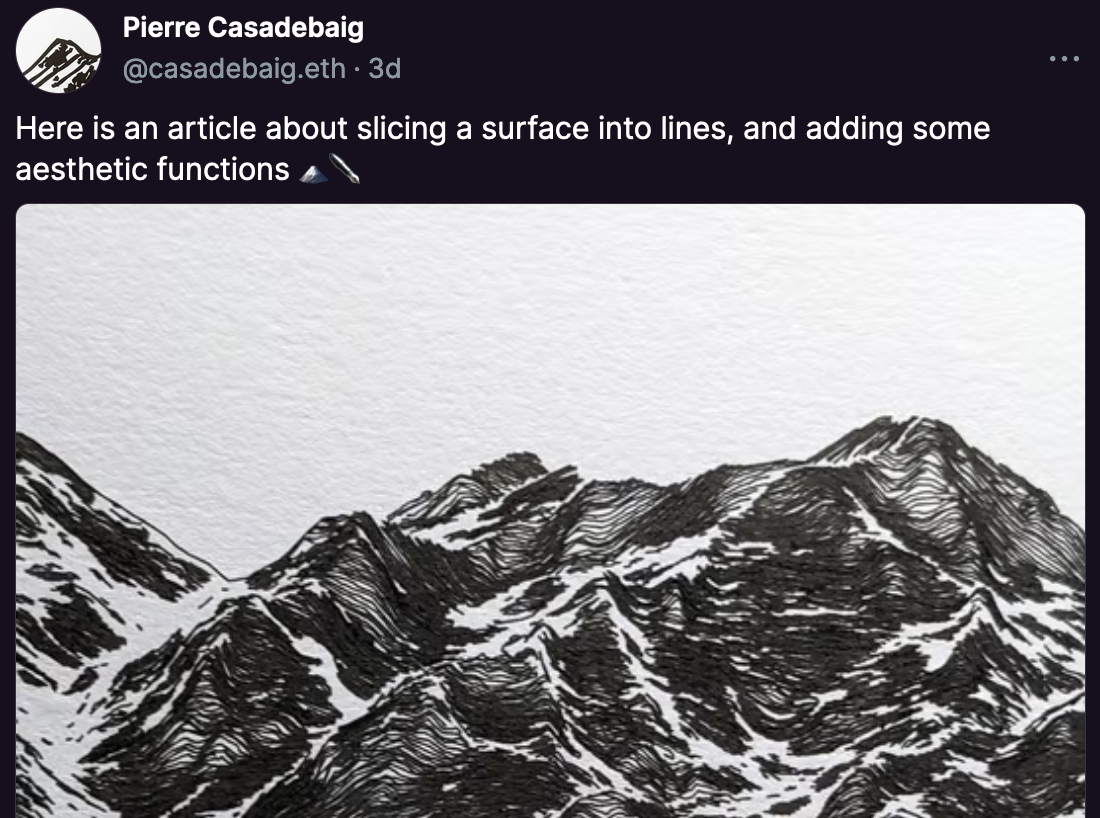

Slicing Surfaces

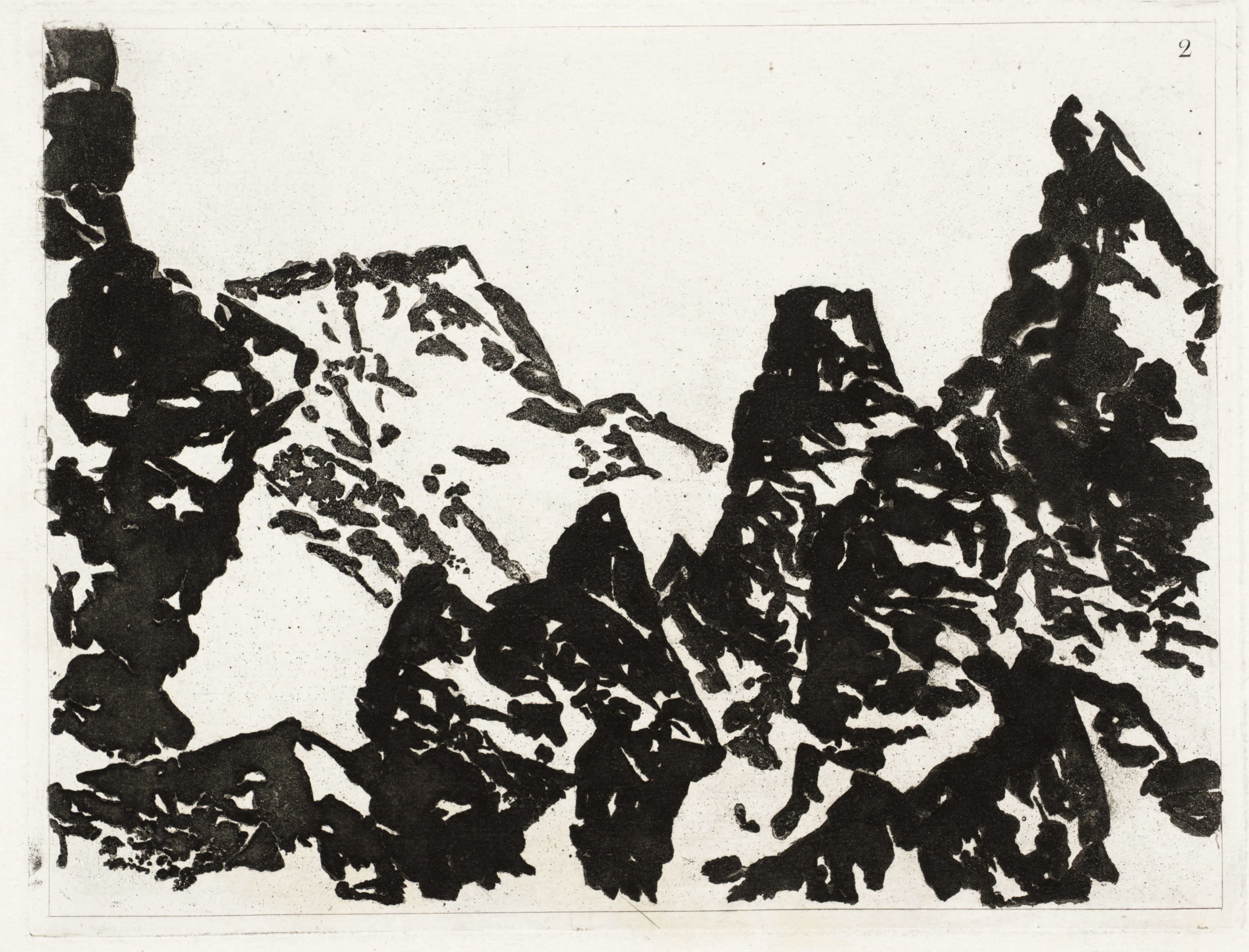

Not a new tutorial, but last week I came across an article from Pierre Casadebaig in which he explains how to convert "topographical data into monochromatic ink sketches" bringing to life beautiful plottable mountainscapes that bear likeness to Matt DesLaurier's iconic Meridian:

I also recommend checking out a more recent article of his in which he explores an alternative aesthetic for representing mountainscapes that is reminiscent of traditional Shan Shui paintings:

Web3 News

Keeping up with Farcaster

Have you recently been active over in the Farcaster scene? Having trouble keeping up? Well, I don't blame you... here's a list of resources that I found helpful in making sense out of everything that's currently been happening on the purple platform:

- If you're still confused about how the entire DEGEN thing works, or need a specific detail cleared up, Ryan J. Shaw wrote a massive FAQ on DEGEN tipping to answer all of your questions.

- If you need to check your DEGEN allowance and how many tips you've received so far, this frame by Nikolai does the trick.

- Ombre developed a neat farcaster frame called Farstats (with an additional accompanying website) to keep track of your activity score as well as useful information about your followers/following.

- With the increasing activity on Farcaster users have been quickly reaching their storage limits. With how Farcaster storage functions this has been raising concerns - here's a cast by Dan Romero in which he addresses the issue and explains the reasons behind how Farcaster stores casts.

- Todemashi compiled a big filterable list of the different NFT marketplaces (a big chunk of them generative) over on Notion.

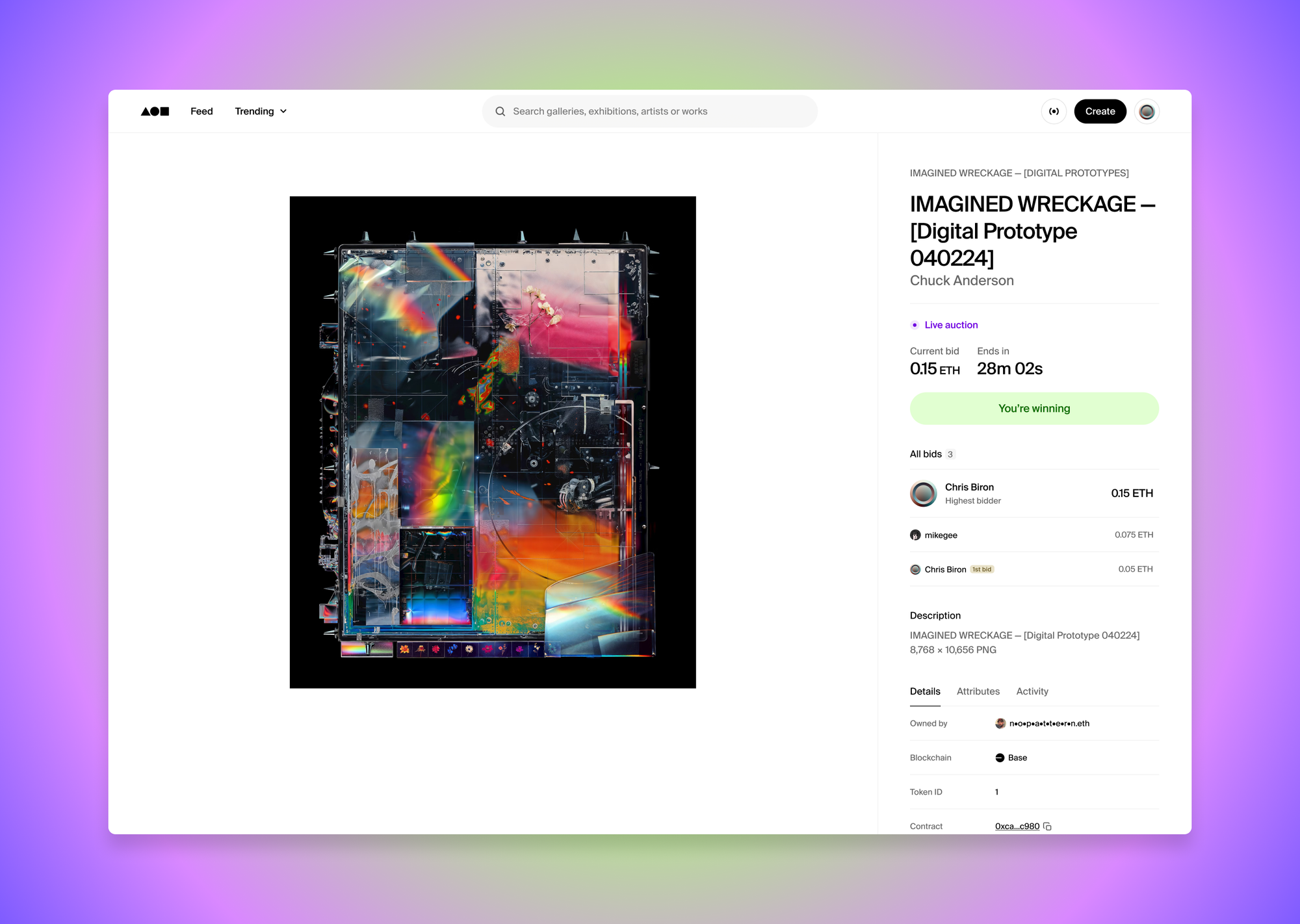

Genesis on Foundation

Over the past couple of weeks we've seen more and more platforms embrace a more efficient alternative to mainnet Ethereum, adopting the Base L2 chain with its affordable gas fees and speedy transaction times. Last week, Foundation followed suite, commemorating the Base integration with their Genesis group show, bringing back an iconic roster of artists for a series of 1/1 auctions on Base:

What's interesting about the Base integration is that it allows for an entirely new kind of seemingly 'real-time' bidding experience on Foundation:

I've certainly been enjoying the new developments that Base has been enabling, it's interesting to see this kind of renaissance at a point when the "market" has been pretty much in the gutter for the longest time now - it's exciting to see things pick up again.

Tech & Web Dev

Google introduces Jpegli

Google recently released Jpegli, a new image coding library that significantly improves how JPEG images are compressed. Jpegli encodes images faster and more efficiently, achieving up to 35% smaller file sizes at high quality settings according to the crowd survey that they ran on several image datasets. Google details how all of it works in their announcement post:

%20(1).png)

This seems to be quite innovative as it's essentially an upgrade to the already existing JPEG format without any sort of interference, or asking anyone to support yet another image format. Jyrki Alakuijala, one of the folks that worked on Jpegli, posted a bunch of comparison images over on TwiX that you can check out for yourself - I can't tell any differences but maybe an expert reader amongst can point out the differences for me:

Overall, the jpegli images do however seem slightly crisper overall. Per usual, it was also highly entertaining to give the Hacker News comments section a read, polarizing as always.

Low Effort Image Optimization Tips

On a similar note, here's an article by Lazar Nikolov from Sentry in which he lists some easy image optimization tips that you can take advantage of for optimizing the images displayed on your website:

If you didn't know, loading in heavy images with large file sizes can be a death toll to your website especially when it comes to SEO - search engines will automatically give you a worse ranking if your page takes more than a couple of milliseconds to load.

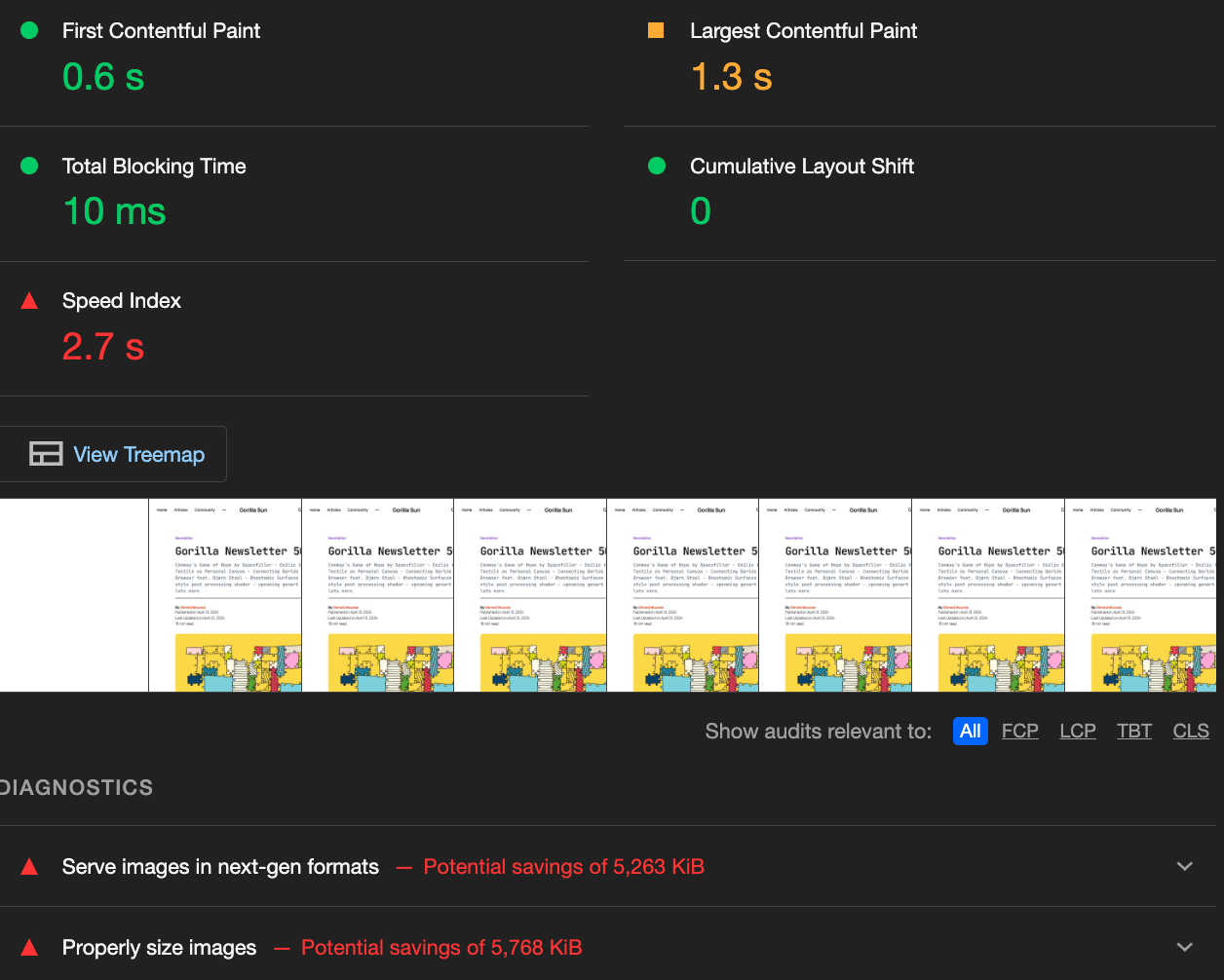

When it comes to the Newsletter, I frequently don't optimize the images that I include since I don't care so much about SEO purposes as much as I do about deliverability - but I can demonstrate the tangible effect of this via the Brave Browser's Lighthouse tool; in the most recent issue of the Newsletter from last week, the diagnostics tell me that I could shave off a lot of loading time by simply reducing the image sizes:

One of the tricks that Lazar pitches, is using the HTML <picture> tag instead of the <img> tag. It might not seem useful at first, especially since the srcset attribute is already a thing, but it provides greater control over how the browser serves images. While srcset lets browsers automatically decide which resolution of an image to serve, the <picture> tag goes a step further and allows for fallback options when the browser doesn't support a certain encoding format:

The Unreasonable Effectiveness of Inlining CSS

And while we're already talking about optimizing the load speed of our web pages, here's an article by Luciano Strika where he talks about inlining CSS to shave off a couple of milliseconds from his page load speed:

Inline styles improve loading times because it eliminates the need for a separate network request to load the CSS file. It's definitely an awesome trick for simple sites that don't have much styling, but I wouldn't unnecessarily clog up the HTML files of a larger site that way. IMO, I think it can be viable to inline the styles that are unique to certain pages.

Gorilla Updates

What have I been up to this week? I would like to believe that I've been quite productive... shitposting over on Twitter:

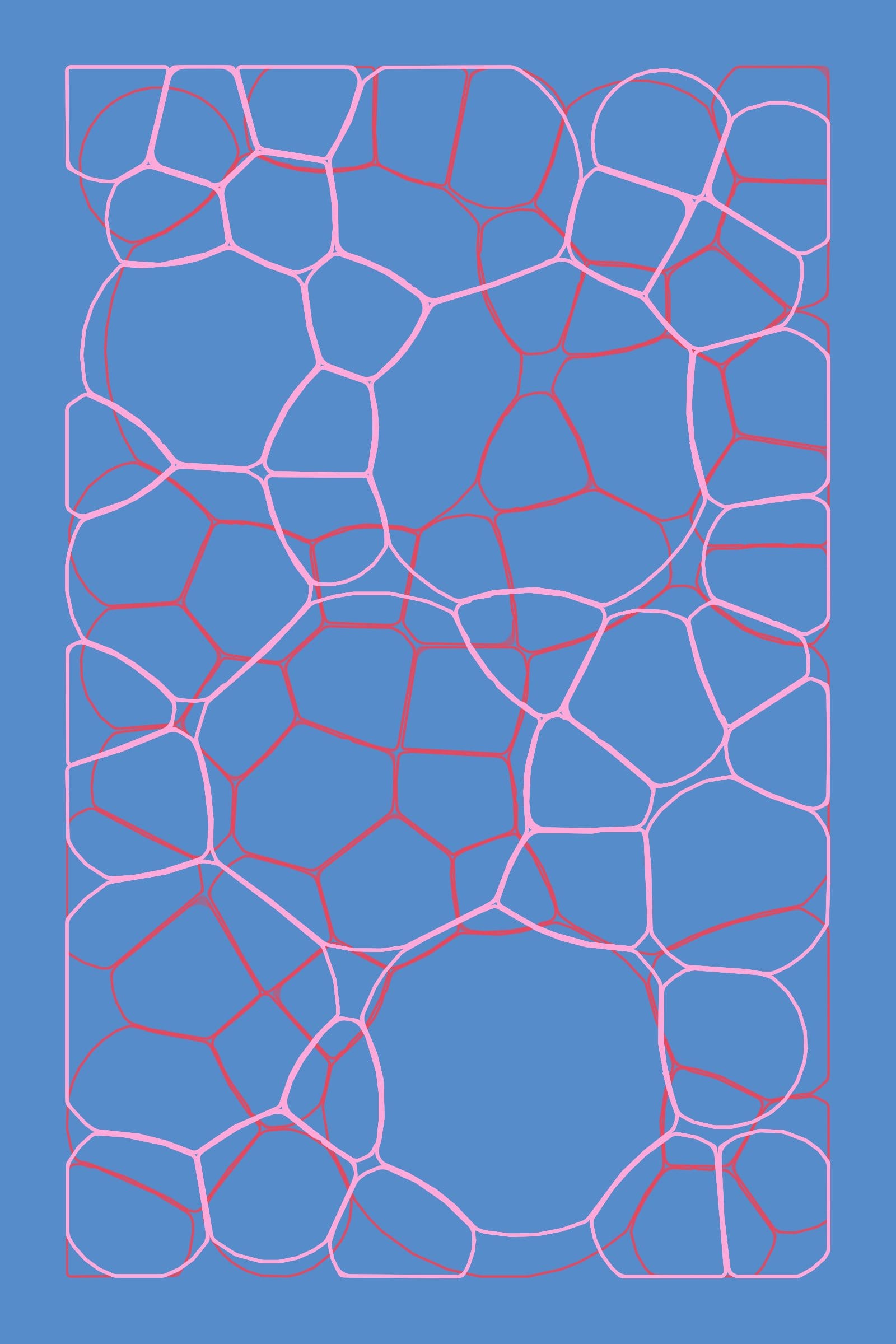

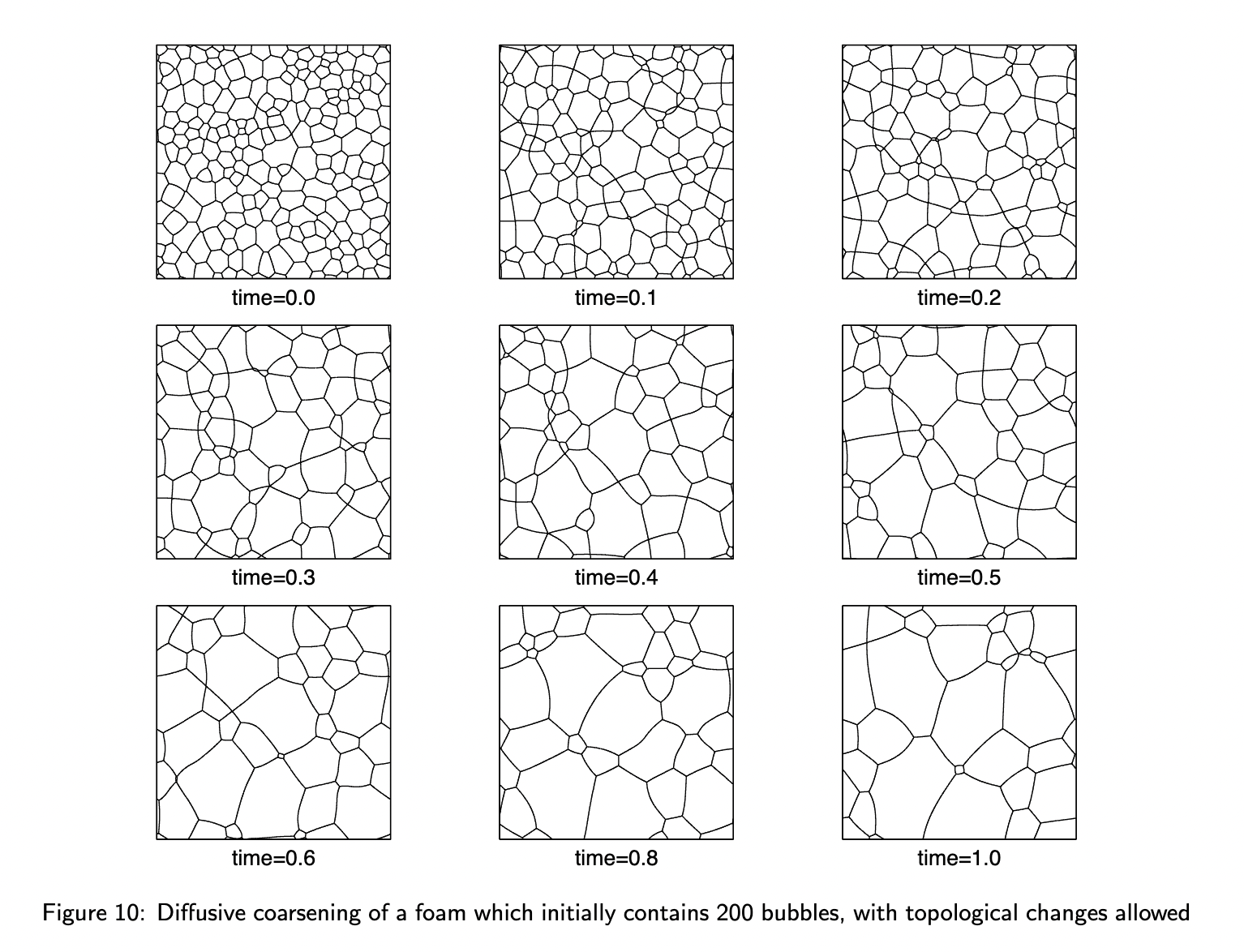

But jokes aside, I came across these figures in a paper titled "Straight-Skeleton Based Contour Interpolation" while researching how to simulate foam bubble structures - this paper doesn't actually have anything to do with the topic, but had some pretty cool figures (and might actually come in handy for some other generative tasks). I was exploring if there were methods for simulating foam/bubble lattices, here's what I've been working on that led me down this rabbit hole:

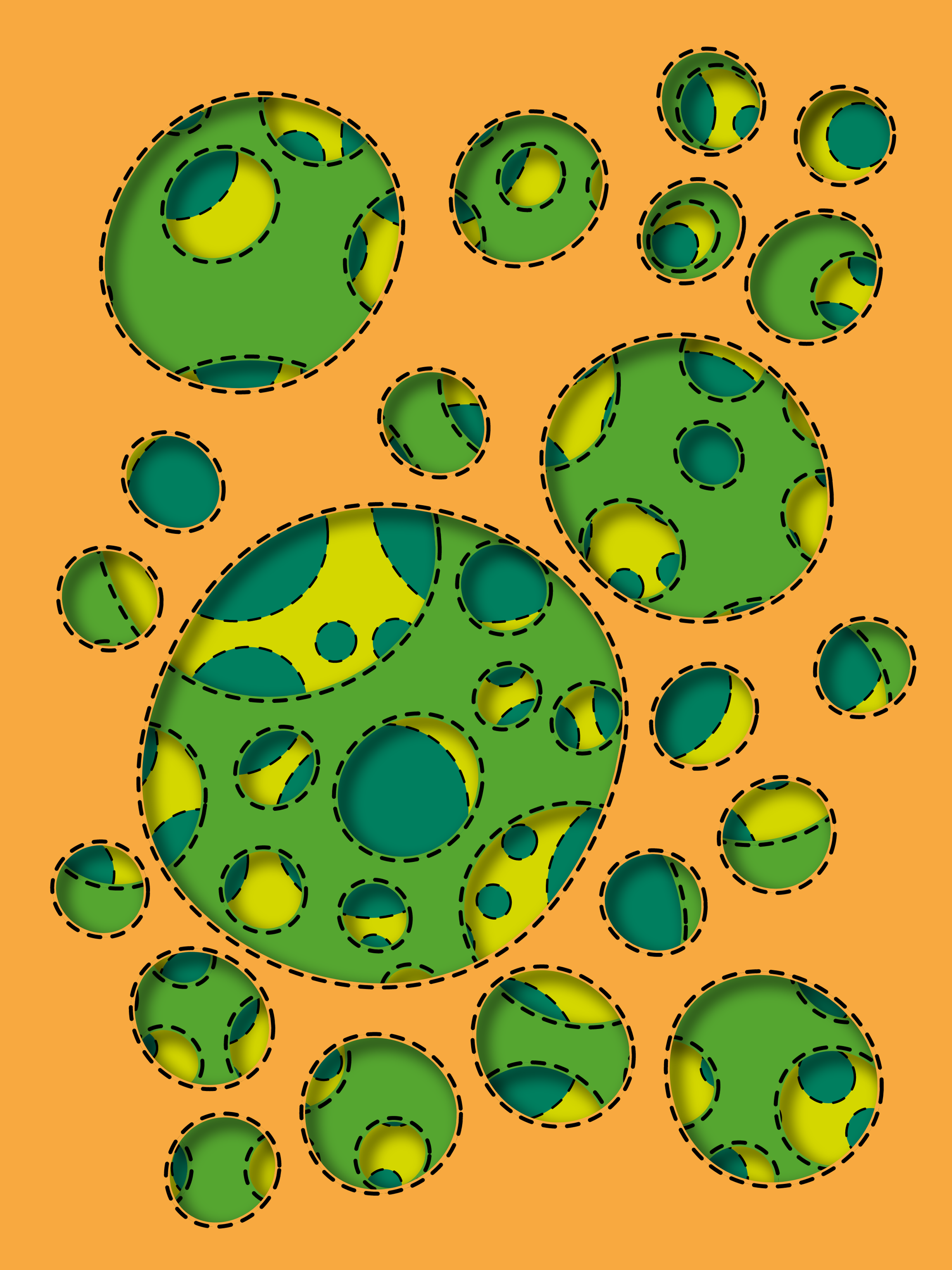

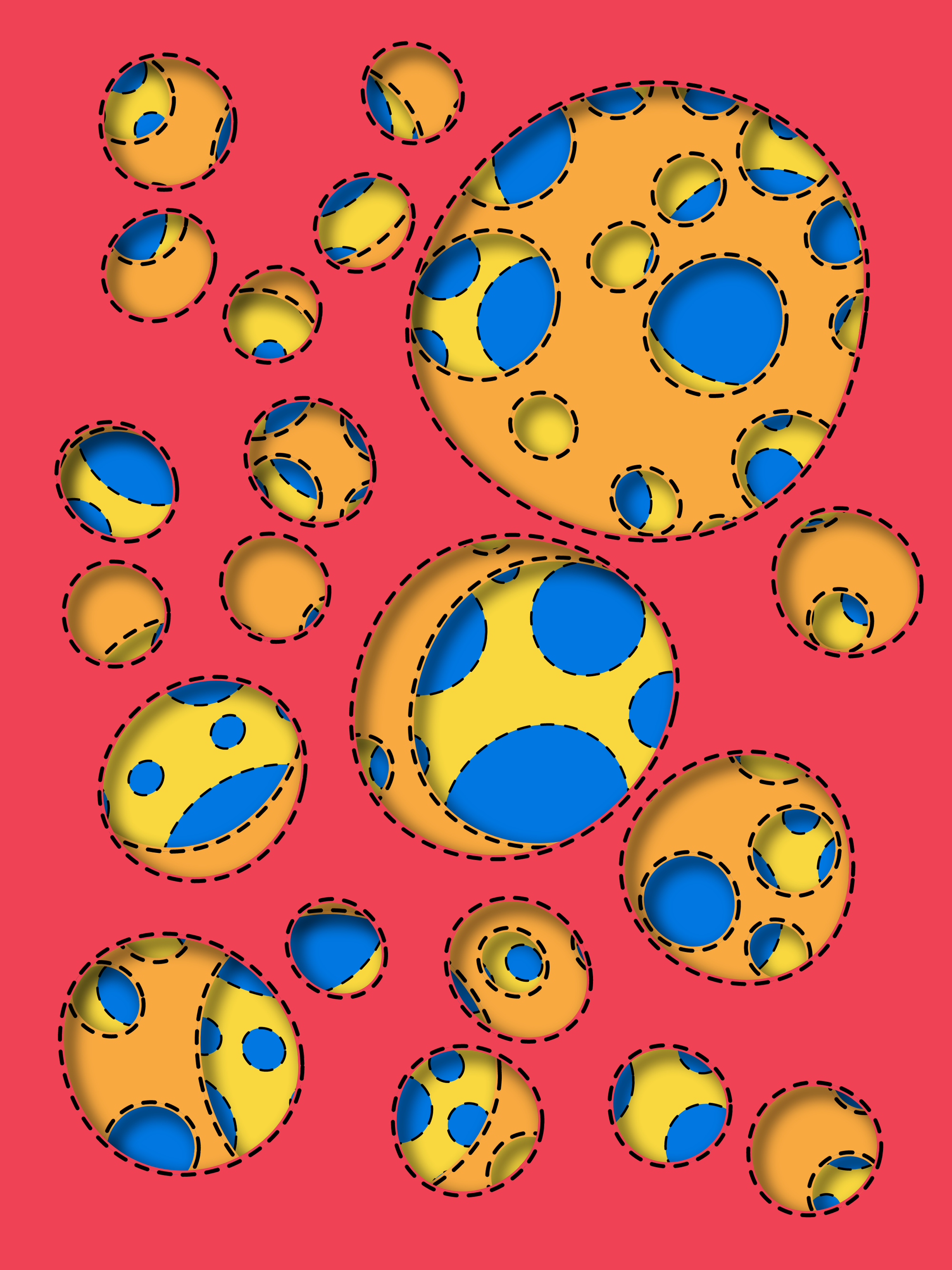

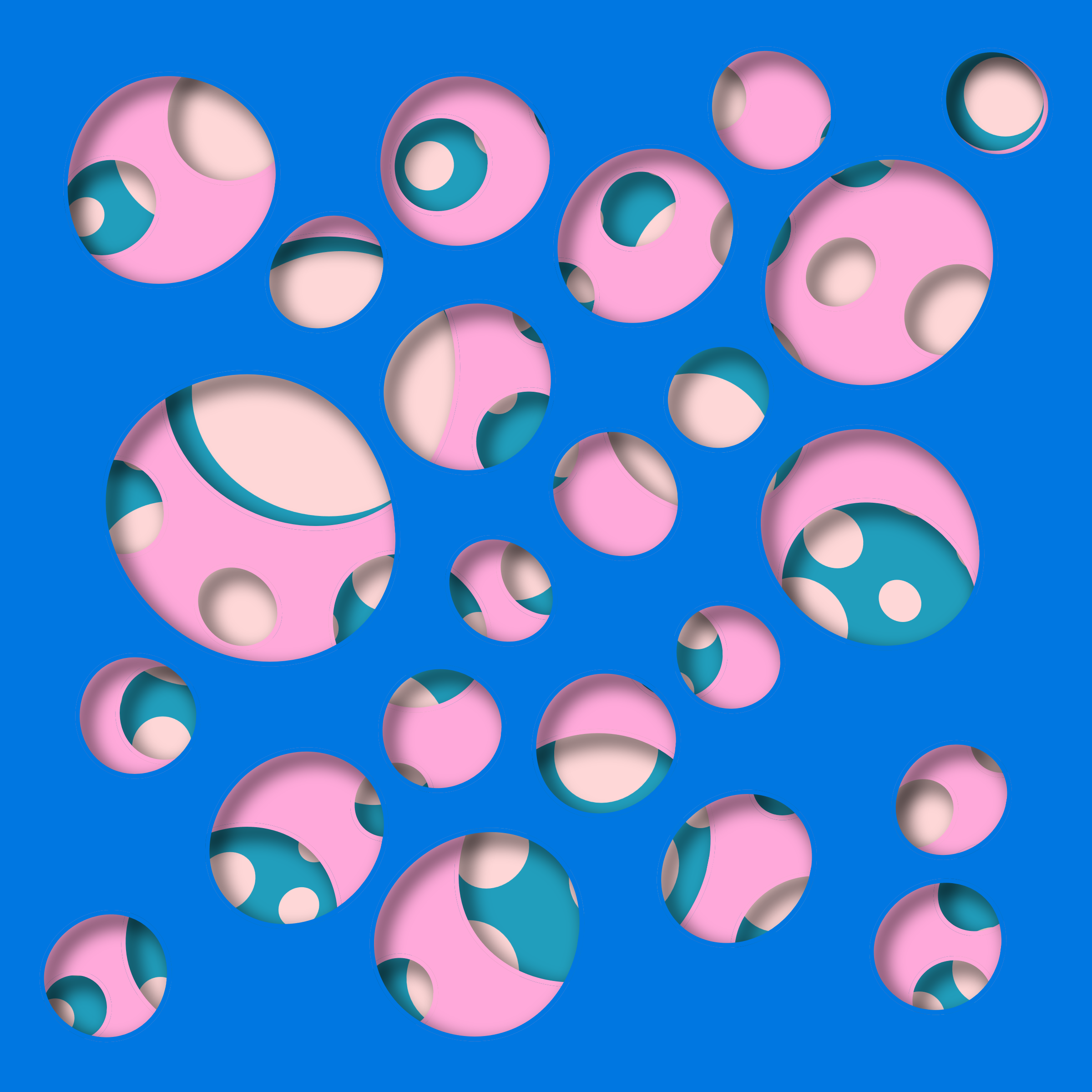

The above patterns emerge from a simple algorithm that uses circle packings as a starting point, the Voronoi-esque diagram emerges when the individual circles in the packing are expanded/inflated such that they fill out the empty space around them, only stopping when two circumferences collide. This can be done via a particle based approach.

The emergent patterns then reminded me of a top-down view of sea foam like structure, you know, when ocean waves smash against a cliff and then retreat again. Apparently it's not an easy task that has a straightforward algorithmic solution due to the complexity of the physics involved - we're actually treading into the realm of fluid simulation with this topic. Moreover, foam lattices are kinda strange, on the one hand they have very specific structural configurations but are also not entirely rigid; as foam bubbles come together they have the ability to relax into specific positions. Here's two photographs from pinterest that I found really mesmerizing; when pressed against a flat surface bubbles form a voronoi-like tesselation, in which the shared edges are not always perfectly straight:

One paper that I found on the topic that describes a procedure for this is titled "The immersed boundary method for two-dimensional foam with topological changes":

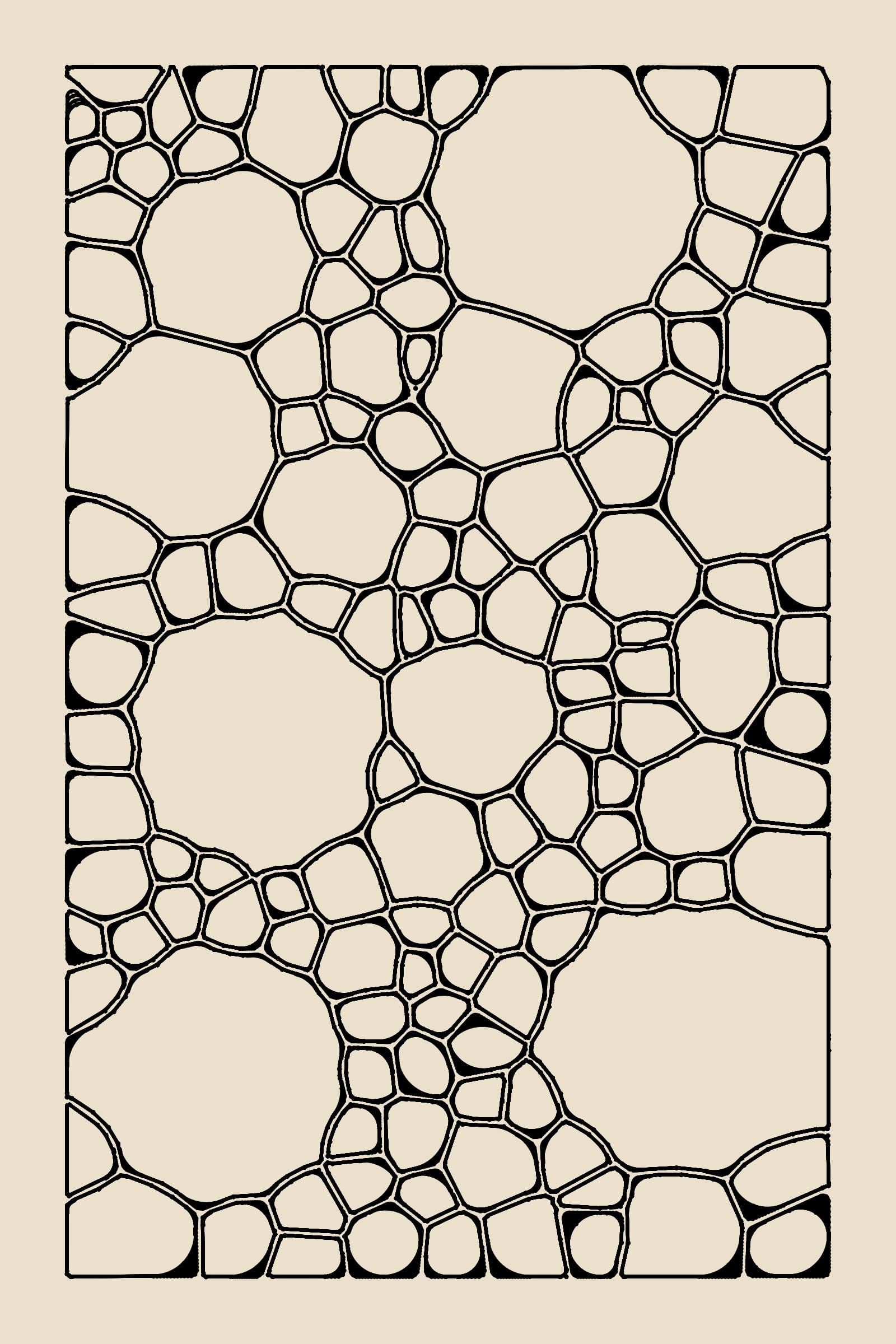

But I will need some more time to figure out if the implementation describe can bring me closer to the aesthetics I'm aiming for. On another note, I also explored circle packings in a different setting, revisiting an older idea, where we peek behind the canvas:

These sketches are made possible with the rendering context's clip function as well as some graphics buffer shenanigans. The emergent patterns are actually pretty cool, while I can totally visually interpret these as cutouts that let me peer onto another layer, the circles also almost look like 3D eggs of some sort. I'm excited to continue exploring this project!

AI Corner

Visual intro to Transformers

3Blue1Brown is back with another video, this time explaining the central mechanism that powers the LLMs of today: Transformers. Not much to say here, except that it's likely going to become the best introduction to the topic:

AI and the Problem of Knowledge Collapse

In the advent of LLMs, the increased usage of these models has led to concerns around a potential "information collapse" - this term is essentially descriptive of a situation where AI curates information for us, favoring common viewpoints; being trained on massive datasets, models tend to favor providing the most common information that lies somewhere at the center of their internal representations in addition to other sorts of biases that they might have. This could ultimately limit our exposure to diverse ideas and ultimately shrink the overall knowledge we access. A recent paper by Andrew J. Peterson tackles this issue:

It's interesting to see the opposing opinions on this issue, some argue that AI is simply a tool, and people will still be able to seek out the information they desire; new tools haven't necessarily made us less knowledgeable in the past. The debate over on hackernews highlights the importance of using AI responsibly and remaining curious in the face of an ever-evolving information landscape:

Music for Coding

I didn't listen to much music this week - but an artist that I just recently discovered really enjoyed was JW Francis. I found his tracks super uplifting with their retro indie vibes - in addition to some of the super fun riffs. I'm surprised that Francis doesn't actually have a bigger following, the music's quite awesome to be frank:

And that's it from me this time, hope you enjoyed this week's curated list of generative art and tech shenanigans! Now that you've made it through the newsletter, might as well share it with some of your friends - sharing the newsletter is one of the best ways to support it! Otherwise come and say hi over on my socials!

If you've read this far, thanks a million! And if you're still hungry for more Generative art things, you can check out the most recent issue of the Newsletter here:

And if that's not enough, you can find a backlog of all previous Gorilla Newsletter issues here:

Cheers, happy sketching, and again, hope that you have a fantastic week! See you in the next one - Gorilla Sun 🌸 If you're interested in sponsoring or advertising with the Newsletter - reach out!