Welcome back everyone 👋 and a heartfelt thank you to all new subscribers that joined in the past week!

This is the 57th issue of the Gorilla Newsletter - a weekly online publication that sums up everything noteworthy from the past week in generative art, creative coding, web3, tech and AI - with a spritz of Gorilla updates.

Thanks again to everyone that's joined the discord so far, we're at a whopping 100 members now! 🎉 Come say hi and let's have a good time nerding out about tech things - here's an invite link!

That said, hope that you're all having an awesome start into the new week! Here's your weekly roundup 👇

Another Chapter in the Race for AGI

Boy, where do we start this week? I know this isn't the usual format - but all of my social media timelines have been absolutely abuzz with the recent announcements by OpenAI and Google, both of which came out with some big updates to their AI models. That's why we're kicking off the Newsletter this week with a big recap of everything that's transpired throughout the past week - there's a quite a lot to sink our teeth in this week!

If you just want the quick version, you can find a TLDR at the end of this section! 🧠 ⏬

Hello ChatGPT-4o

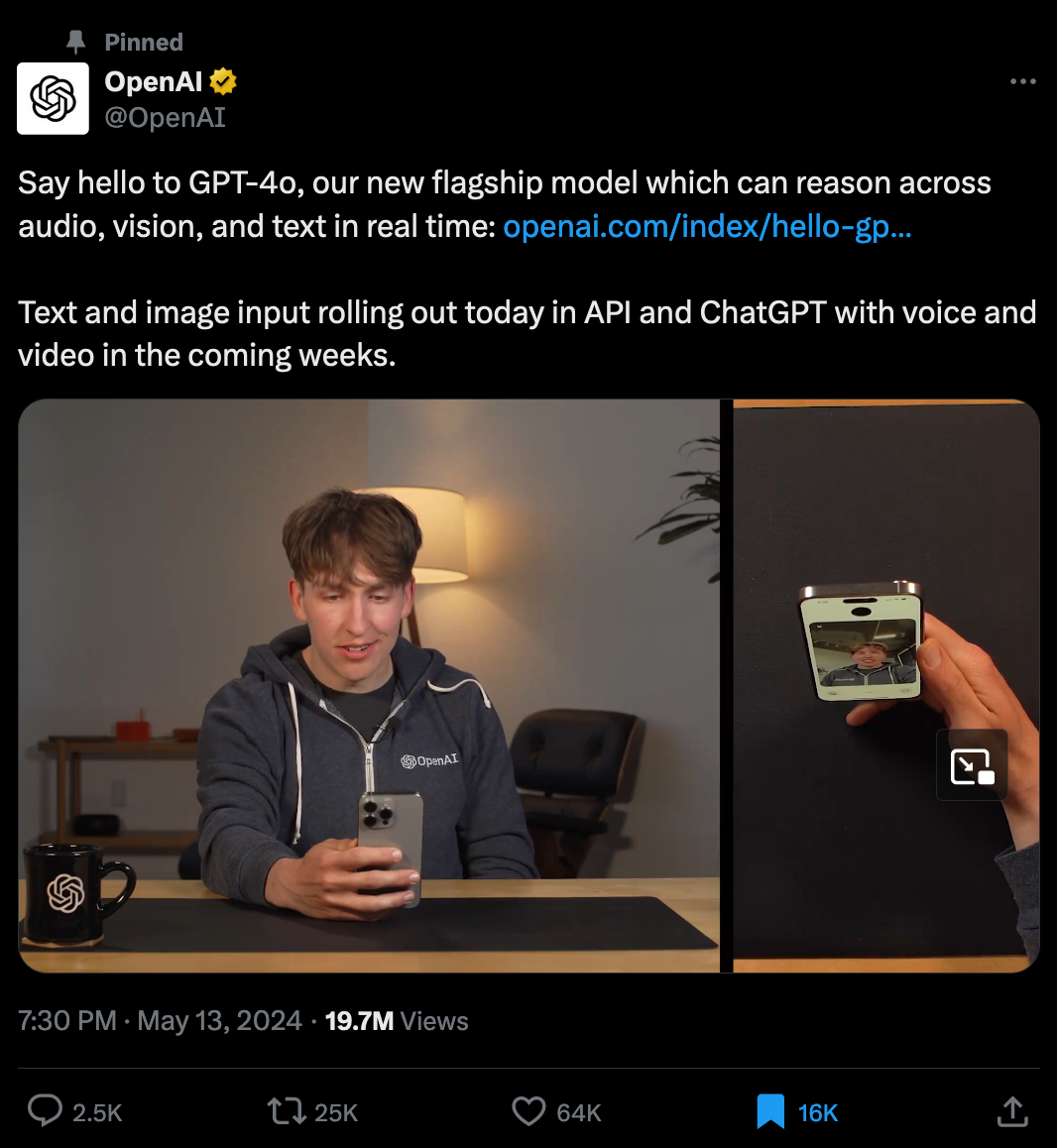

On May 13th, OpenAI made a surprise announcement revealing their new and improved flagship model ChatGPT 4o. The letter O here stands for Omni, or Omnimodel, and is meant to highlight the multi-modal capabilities of this iteration of ChatGPT. While also being cheaper and faster, their announcement places an emphasis on the human-like conversational abilities of the AI.

They boast that it is now seemingly much easier to use, with a noticeably reduced latency between picking up the users' spoken inputs and the text-to-voice generated replies, making it possible to hold a conversation with the smart chat-bot in almost real-time. What's more, is that they're also making 4o accessible to all users, not just those that are currently paying for the premium version. If you check out the web app/mobile app it should already be available. Paying subscribers still do get a higher message limit however.

In the key-note presentation that OpenAI released over on YouTube, currently sitting at close to 4 million views, CTO Mira Murati starts off by providing a run-down of 4o's new features - followed by a live demo, showcasing what the app can do:

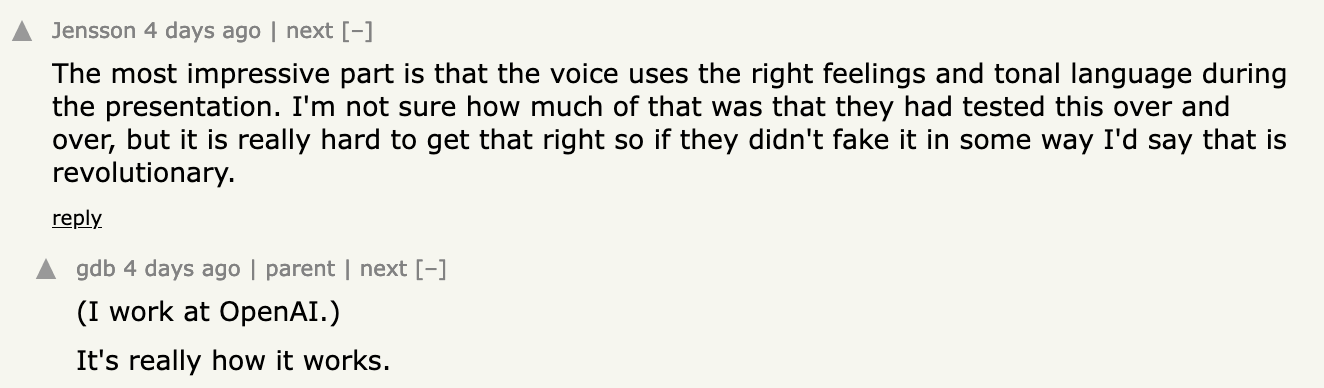

And honestly it's quite impressive that it can pick up on voice and shoot back with answers so quickly, even with video input thrown into the mix, it seems to be able to handle all of it pretty well. I gave it a shot over the weekend while helping out my sister with her homework, and it worked like a charm - you just snap a pick of the exercises, ask it to solve it, and then it'll generate the answer. Truly stuff of the future. And it seems that OpenAI doesn't actually have to fabricate its demos - like some of the other recent trailers in the AI space:

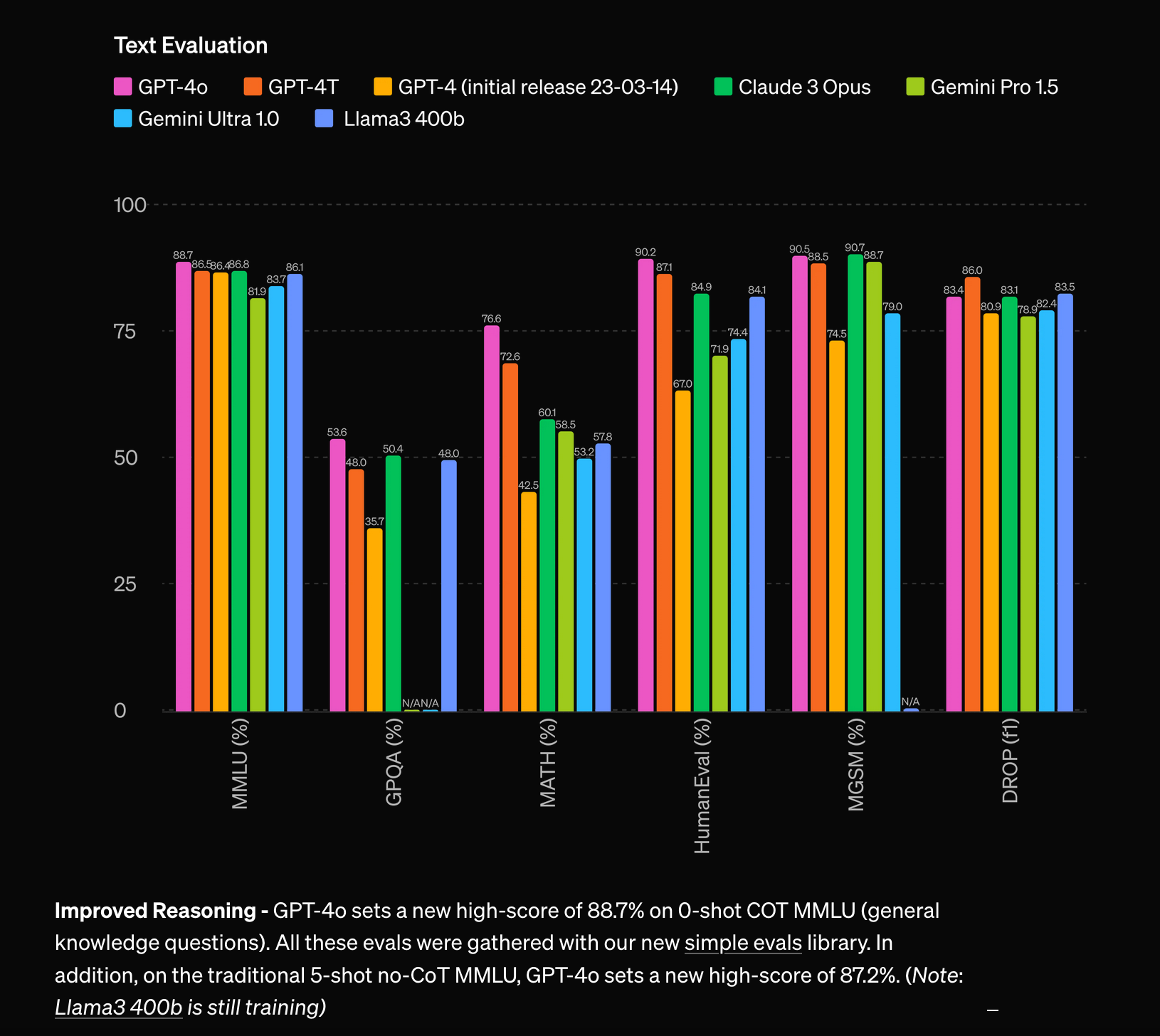

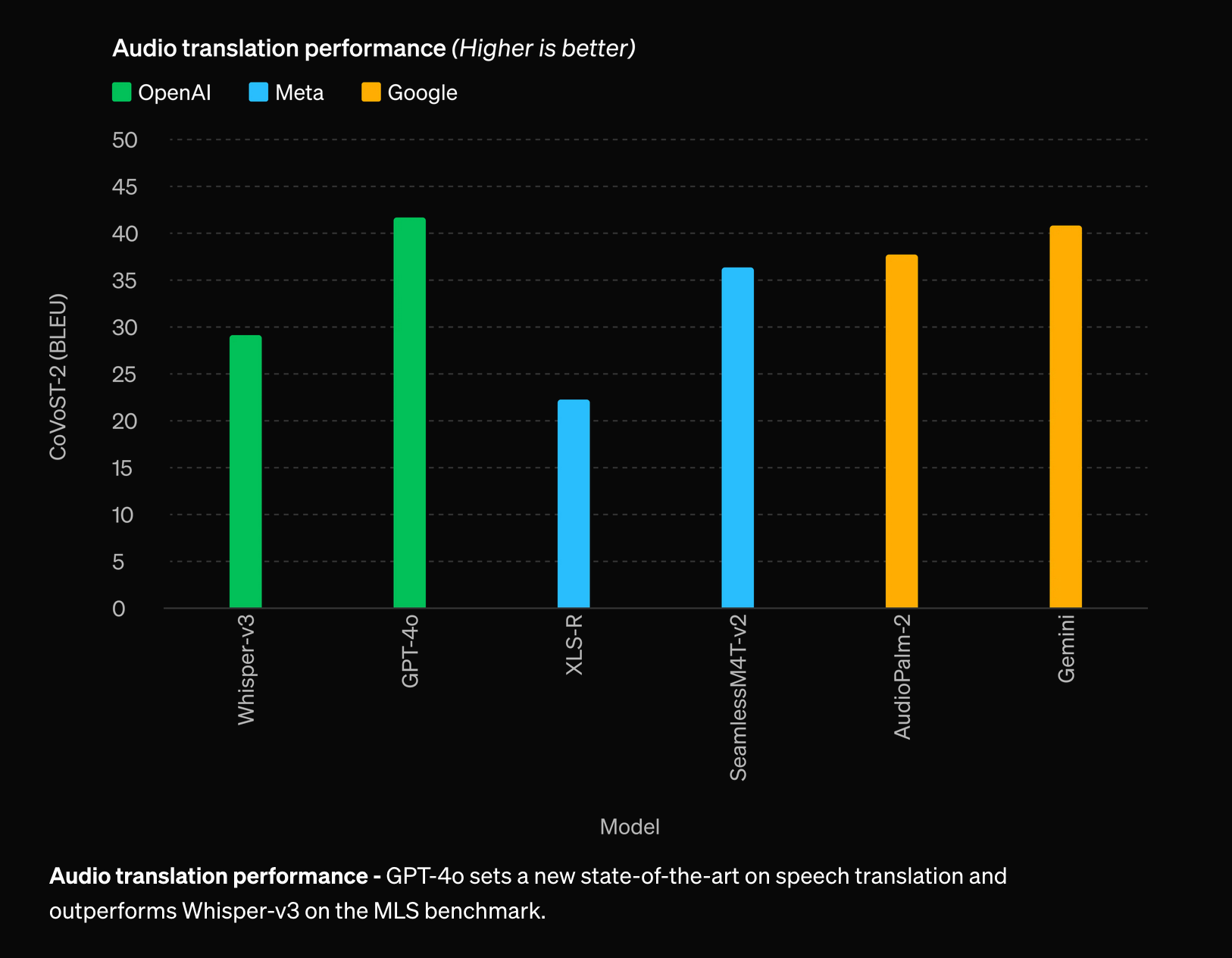

While the live demo is a bit awkward - I found their accompanying article Hello GPT-4o to be much more informative. In it they provide a number of benchmarks indicating that 4o's got many of the other models out there beat across several tasks:

The article also showcases a number of very useful applications. For instance, the model can handle over 50 different languages, and detects these automatically - you don't have to fiddle around with the setting. This means that it can basically serve as a drop in replacement for translators - guess programmers were not actually the ones that should have been afraid for their jobs 🤷

One aspect of 4o, that sparked quite a bit of controversy, is the fact that their text-to-speech pipeline was a little too realistic, even being able to adjust its tone of voice and mood according to the requests of the users. In another demo that was posted over on TwiX - the chatbot decided (set to?) to take on a very flirty tone of voice which was not received well:

Link to the Demo in Question | Link to Quote Tweet by Cassie Evans

This even prompted an entire segment on the daily show, ridiculing this particular demo:

The question here, does the vocalized version of the LLM require the ability to imitate different tones of voice and moods? These models have no innate ability to feel any sort of emotion whatsoever, they are simply emulating these voices based on patterns in the training data - why is a monotonous and robotic synthesized voice not enough? Obviously, I am fully aware that this wouldn't be as marketable. While this is being passed off as a big joke right, it's actually a severe issue.

Edit: Gorilla from the future here (realtive to the Gorilla that wrote this 🦍) just as I was putting the final touches on the Newsletter there was another update from OpenAI regarding the voices included in the app - the decide to disable the 4o's flirt mode for now:

On a more lighthearted not however, it seems that this has also prompted a new type of meme where 4o serves as a virtual boy/girlfriend.

Beyond that, OpenAI is also in the process of rolling out ChatGPT as a Desktop app, I was not able to download it yet however, as it's not currently available in the German App Store it appears.

Wave of Resignations and Open AI Partnership Deals

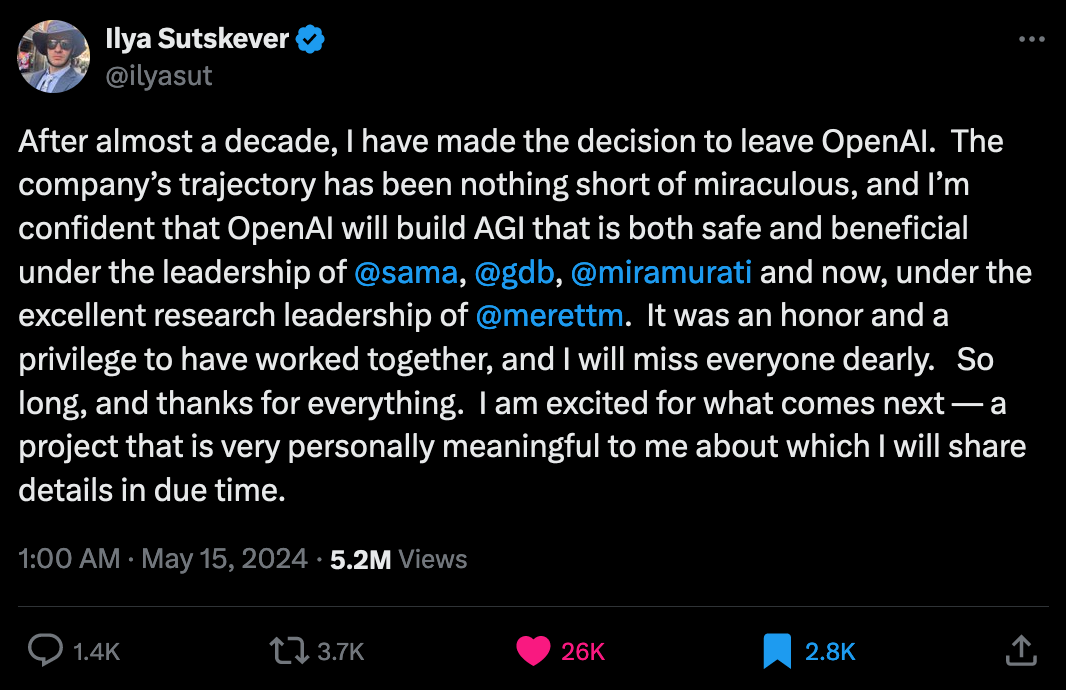

In an alarming turn of events, the very same day that OpenAI reveals 4o, Ilya Sutskever makes his own announcement, sharing that he's made the decision to leave OpenAI after a decade of working there - his Tweet:

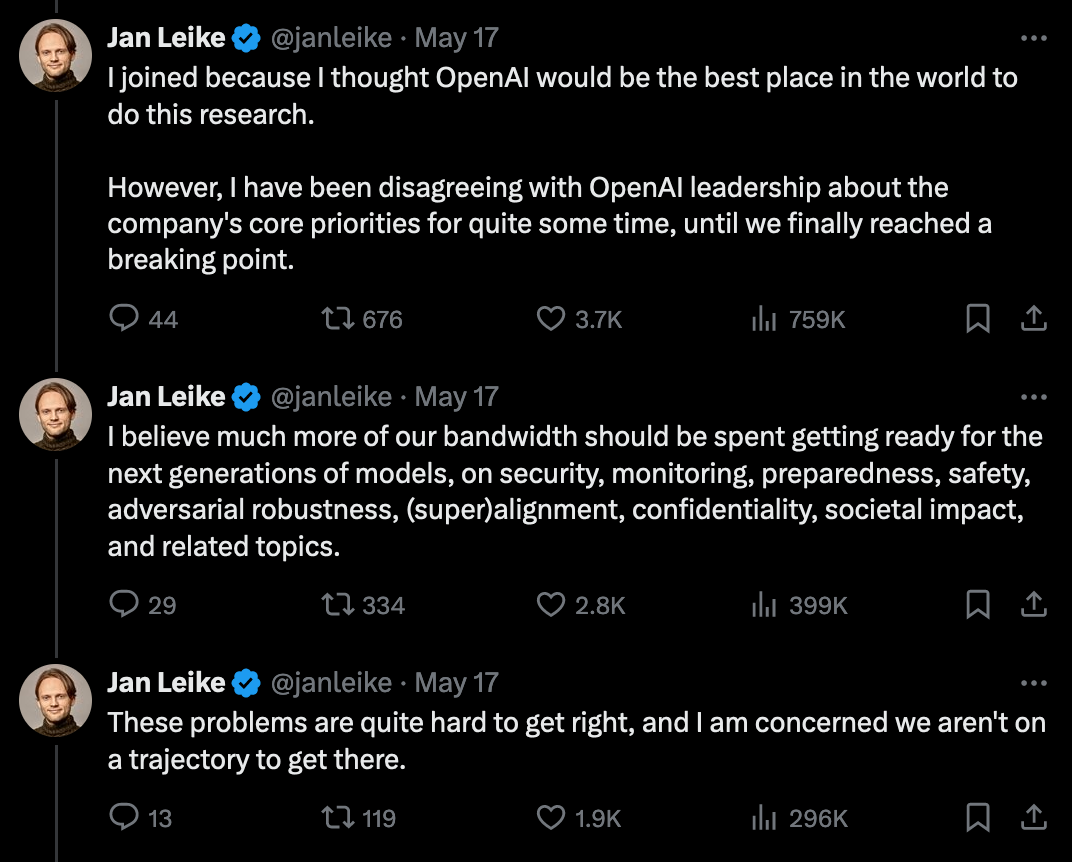

If you are not familiar with Sutskever, he is essentially OpenAI's co-founder and been the chief scientist there for the longest time. In a Tweet from December he did voice his concerns with their trajectory, but there has been no recent update about it. It seems that he is working on a new project of his own that'll we'll learn more about in due time. This resignation is followed by another from Jan Leike, the current (former) super alignment co-lead. He actually follows up with some further information on why he came to this decision, voicing his disagreement with OpenAI's trajectory towards AGI:

It seems that OpenAI is not currently prioritizing the safety of the models that they're developing - which is alarming- but not surprising. We actually did end up getting an update on this issue from Vox that was quick to reach out to those that resigned. You can read it here:

One aspect that plays into this issue is OpenAI's current quest for making deals with all of the major forum-like platforms across the internet, following their deal with StackOverflow, they recently announced a partnership with Reddit:

And we've also got Musk doing his own thing over here.

Gemini 1.5 Pro & Project Astra at GoogleIO

Now that we've covered the OpenAI side of things - let's have a look at GoogleIO, the annual developer conference where Google showcases all of its new tech products. While the conference doesn't solely revolve around AI things, in this section we'll focus on the AI related updates.

If you only care about the tech updates, there's a good and concise list of ten highlights over on the Chrome for Developers blog:

The two big AI updates from GoogleIO are Gemini 1.5 Pro and TheProject Astra. Gemini 1.5 Pro now boasts a massive 2 million token context - meaning that it can retain quite a bit of information during a chat (approximately equivalent to 1.4 million words). In light of this they also make available a new feature called context caching that lets you reuse certain chunks of tokens.

You can find a whole lot of info about this in their official article - for example they showcase how they feed in the entire 402 transcript of the Apollo 11 mission and ask it question about the massive piece of text:

Secondly, they also reveal their Project Astra, a multi-modal LLM powered chat assistant that looks quite similar to GPT–4o - minus the quirky voice, and a noticeably longer delay in response time:

Besides this their project IDX - an AI powered code editor - is now also publicly accessible. I remember signing up to the wait-list for that a couple of months ago, but never getting back an update.

I'm guessing the most entertaining part of the GoogleIO event was Marc Rebillet's stellar performance, which we'll definitely be used as a meme template for months to come:

OpenAI x Google Beef: Race for Apple Integration

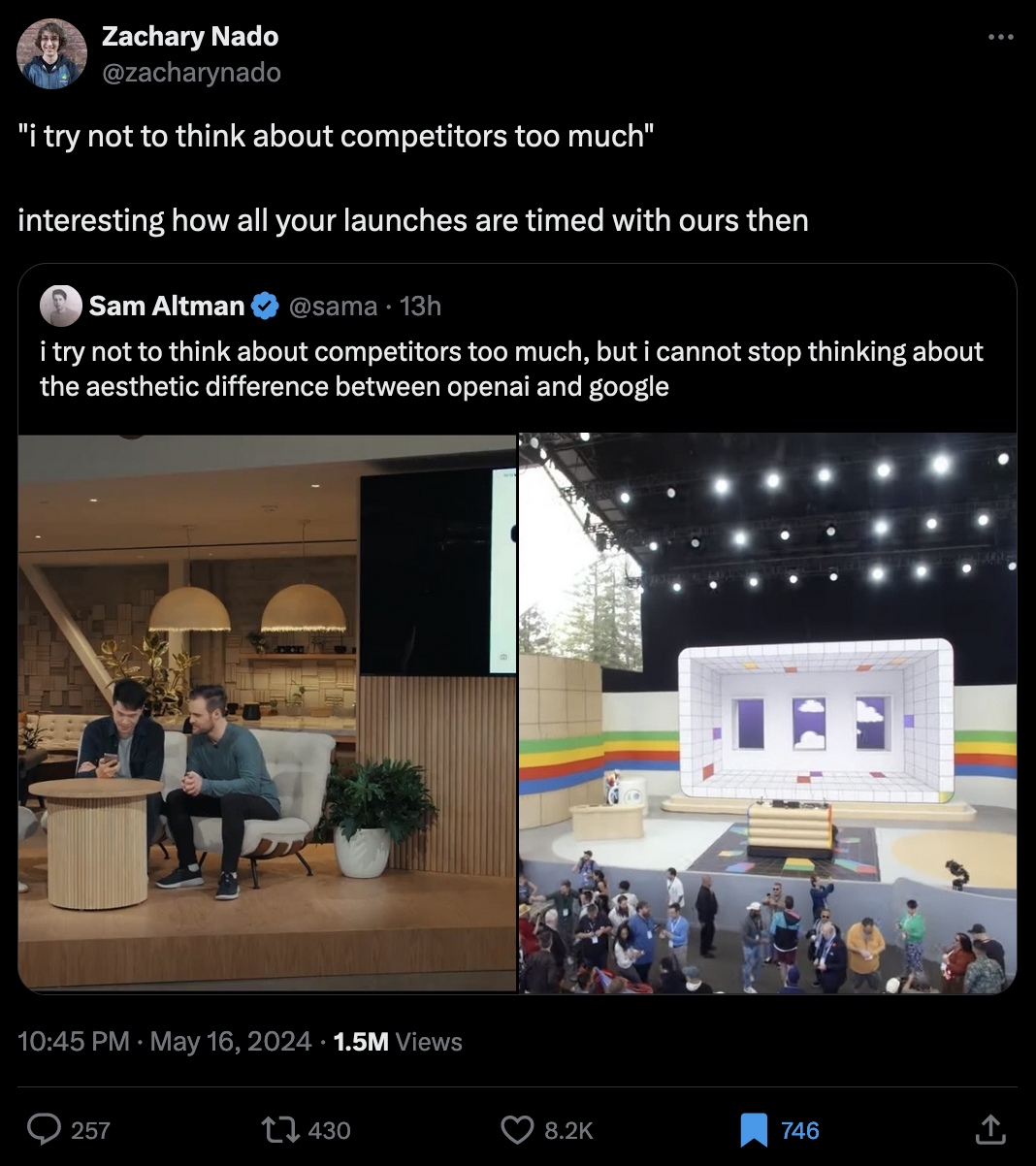

It's not pure coincidence that both OpenAI and Google made announcements about their new products - it seems that it was a calculated strike from Altman to steal the show, just a couple of hours prior to when GoogleIO kicked off. When Altman made a snarky remark about the "aesthetics" of the event, this prompted a reply from Nado a research engineer over at Google Brain:

The surprise announcement of OpenAI could have very likely been related to the negotiations that have been underway with Apple, which is currently looking for a third party to power the AI features of future iterations of the iPhone. While it seemed like the deal with OpenAI was coming to a close just a few days ago, apparently Google's Gemini is not off the table as a candidate:

This begs the question why Apple is not simply developing their own in-house solution for this purpose. But it might just be that they don't really have the data for this purpose due to their privacy policy:

But that's a can of worms that we'll have to open another day when Apple makes a decision on which third-party they'll rely on for powering their AI features. And yeah that's pretty much all of the important updates from the past week in that regard - that I could find at least. What did I miss?

TLDR Recap of Events

- OpenAI reveals GPT-4o, an improved, cheaper, faster, more multi-modal iteration of the GPT models.

- Controversial demo of GPT-4o makes the rounds on TwiX | Daily Show segment takes a stab at the much too realistic voice of 4o

- Wave of resignations ensues, Ilya Sutsekever & Jan Leike | Vox Coverage of the reason behind the resignations, concerns about safety and OpenAI's trajectory.

- OpenAI x Reddit Partnership Announcement Link

- GoogleIO 10 Highlights | Gemini 1.5 Pro | Project Astra | Project IDX

- Twitter Beef GoogleIO x OpenAI | Race for Apple Integration

- Marc Rebillet's performance at GoogleIO

All the Generative Things

Coding my Handwriting by Amy Goodchild

Amy Goodchild is back with another article, and as always, it's a banger. As a continuation to her previous post on figuring out a generative approach to handwritten letterforms, she updates us with an improved method that upgrades her generative blockscript into a cursive writing generator. Individual letters can now be connected in a seamless manner:

By putting together a little editor that lets one encode the letter coordinate paths, simply by placing individual key-points via mouse click, it's now become much easier to encode multiple variations of each letterform. Amy further elaborates on some of the intricacies of adjoining the letter paths.

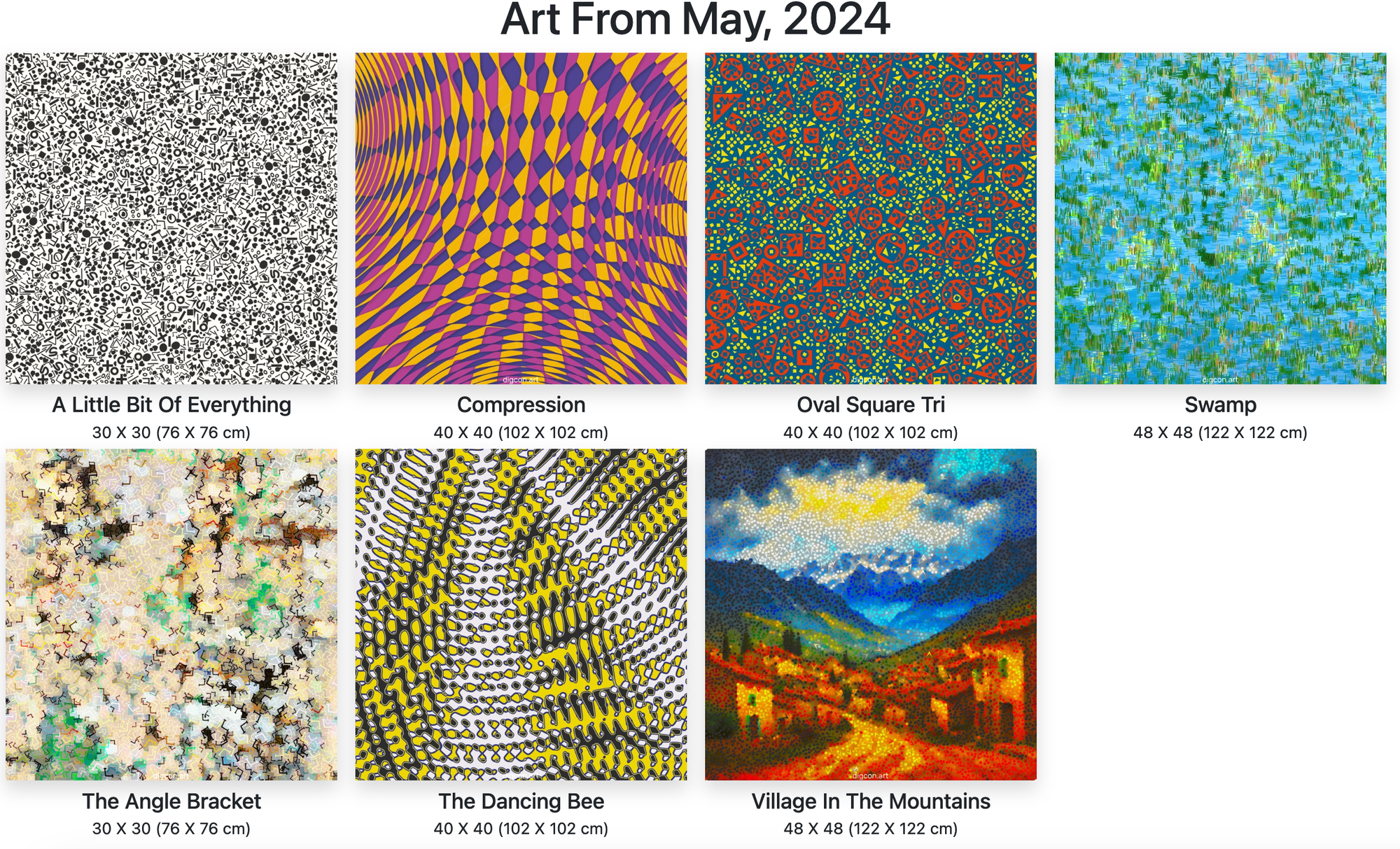

The Art of Digconart

As always, it's been a big joy to get to know you guys in the discord. In this segment I'd like to shout out Andrew Wulf, who I've had the pleasure of exchanging a couple of messages with in one of the channels. Prior to retiring in 2021 and finding himself picking up a generative art practice, Andrew spent over 4 decades working as a programmer. He recounts some of his experiences over the years and provides interesting retrospectives in written form on his programming blog The Codist:

What I found quite interesting, and what sets Andrew apart from the crowd, is that he uses the Swift programming language for the purpose of making generative art. Swift is a language that you'll most likely associate with iPhone App development, rather than artistic purposes. But it seems to be working for Andrew, and he states that he's built his entire tooling in Swift (I would be curious to learn more about how that works!). At this point in time, I'm currently not aware of any other generative artist that is using Swift. You can view many of Andrew's creations over on this art repository that he's created for himself carefully archiving all of his creations:

Andrew also runs another blog in parallel, that focuses on his art practice. I particularly enjoyed the article titled "What is Generative Art", in which he summarizes the art-form pretty succinctly. It also provides a pretty sobering perspective, that stands in contrast to many of the narratives that are being spun around generative art these days. You can read it here:

Highly recommend checking out Andrew's stuff!

Colette Bangert on Growing Visually

I have to admit that I was previously not familiar with the work of Colette and Jeff Bangert, two pioneers that were already using machine made randomness for the purpose of art making in the 1950s. As usual, this is where Monk comes in to educate me, introducing the Bangert duo to us in a recent Le Random article:

Sadly Jeff Bangert passed away in 2019 - having been the one to write the computer code that brings their art to life, Colette now resumes her practice by hand. Bangert's artistic philosophy is deeply grounded in the continuous observation and interpretation of the world around her. She views art as a process of seeing and creating, where traditional methods and new technologies coexist and enhance each other.

Web3 News

News from the Purple App

Things seem to have slowed a bit over on the Purple app - it's felt a little quieter (to me) at least. This is likely due to that big haircut that DEGEN allowances have received since the beginning of the second airdrop's fourth season — which was a big factor in driving traffic on the platform. But that doesn't mean that there haven't been a couple of other developments however; just a few things I found interesting in recent days:

- Dylan Steck reports to us from the front-lines and tells us about the happenings over at FarCon with his write-up here.

- Bonfire is a platform geared towards Web3 creators, allowing you to manage your community, create different kinds of pages and provides a way to present all of your content in different ways. I haven't had the time to check it out yet - but it looks interesting however.

- AirStack is a tool that lets you build dapps on FarCaster - you can find the docs here.

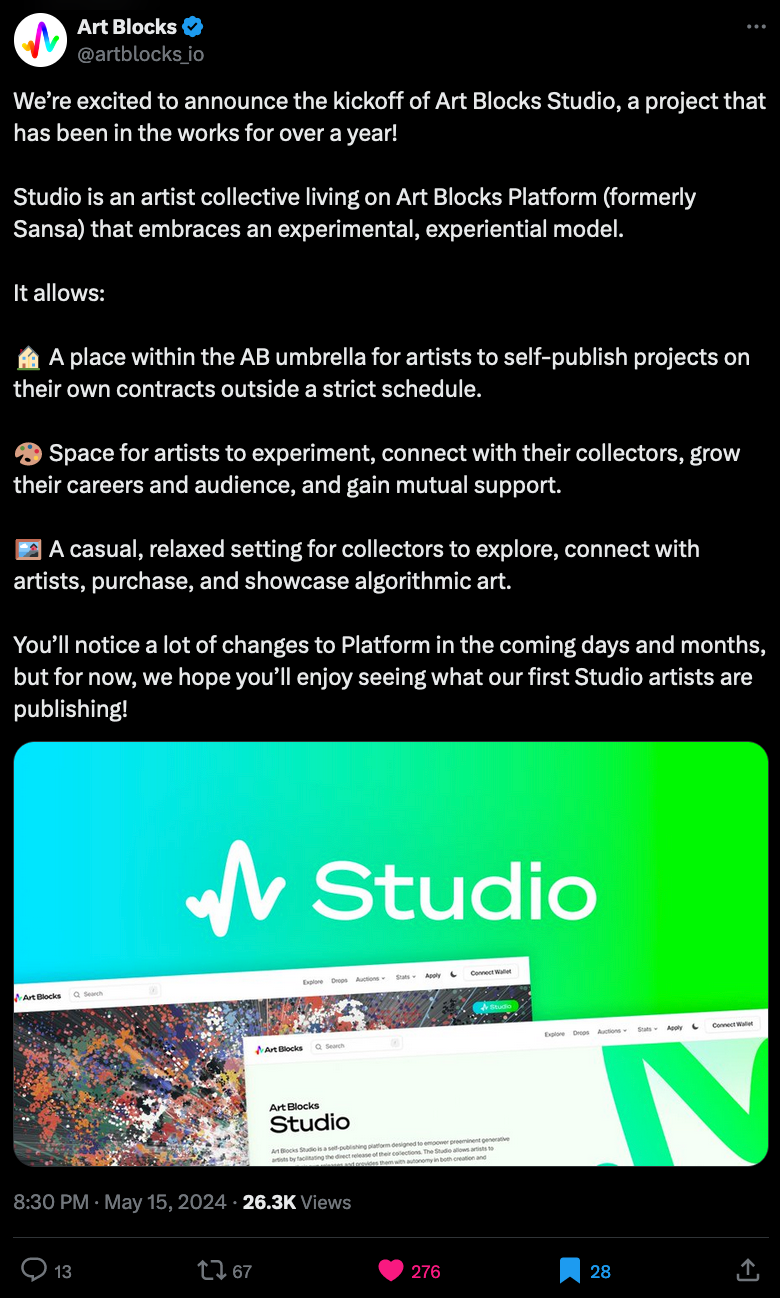

Art Blocks Studio Update

Art Blocks makes a return with an update regarding the Studio platform that they teased a couple of months ago, and been developing ever since - it seems that they're nearing something more complete. If you've missed the previous announcement, it's basically a new part of the Art Blocks eco-system that will allow artists to self-publish generative art projects (not entirely un-curated though as far as I understand going off of the previous announcement).

They'll be gradually rolling out new features in that regard over the coming days (or months) - the official announcement indicates that it'll be more than just a tool for creating and publishing generative projects on AB:

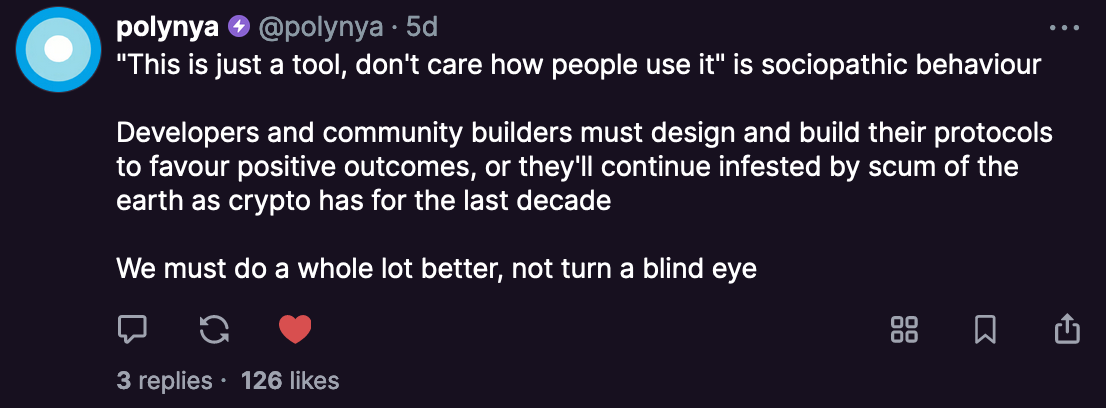

Crypto's Broken Moral Compass

Polynya is an pseudonymous crypto writer that's become quite influential in the space over the past years with their insightful writing on crypto related topics. As always I'm quite late to the party: towards the end of 2023 Polynya made the decision to leave crypto Twitter, and early 2024 going on an indefinite hiatus from writing. The article titled "Crypto's broken Moral Compass" marks the end of the popular blog - you can read it here:

The article addresses the steep decline of the crypto space into what is largely just a big casino - where many of the meme coins and Ponzi schemes are not contributing to anything meaningful or productive. And this is something that I've also experienced, while I try to exist in my own little gen-art bubble most of the time, treading outside of it, especially on Farcaster right now, feeling like stepping into a big untamed jungle. If you're active in any Web3 related community, what's your overall sentiment been? Are things looking up, or are we standing at the edge?

Polynya has however been sporadically casting over on the Purple App:

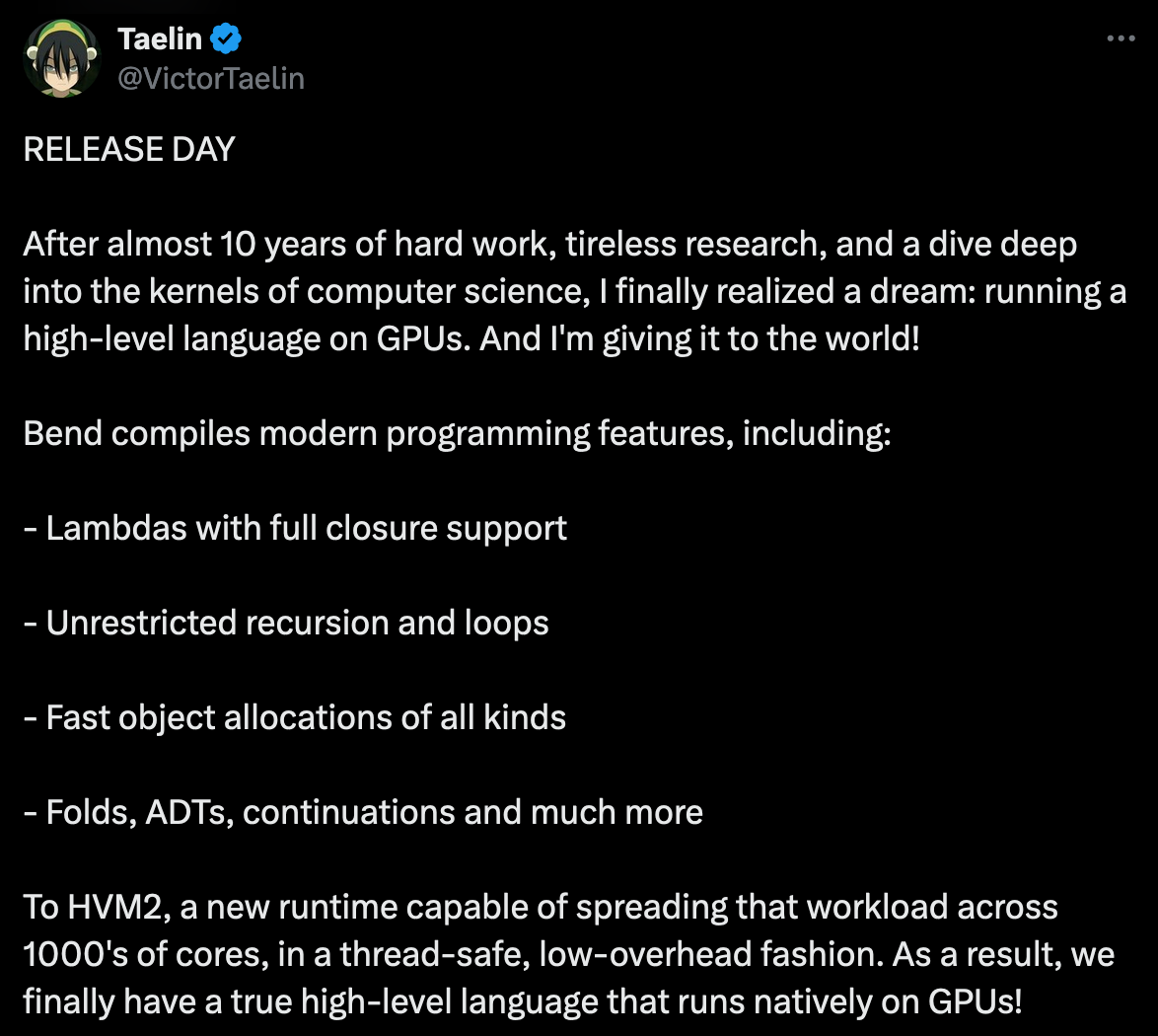

Bend Programming Language

Bend is a brand new high-level programming language that seems quite practical: it lets you write parallel code for multi-core CPUs and GPUs - without having to write out the code that handles the parallel computations manually. A first glance at the syntax, Bend looks a LOT like python, and supposedly, writing Bend also feels like it.

The language makes the bold promise that Any work that can run in parallel, will run in parallel:

You can try Bend for yourself following the instructions over on the official Github repo

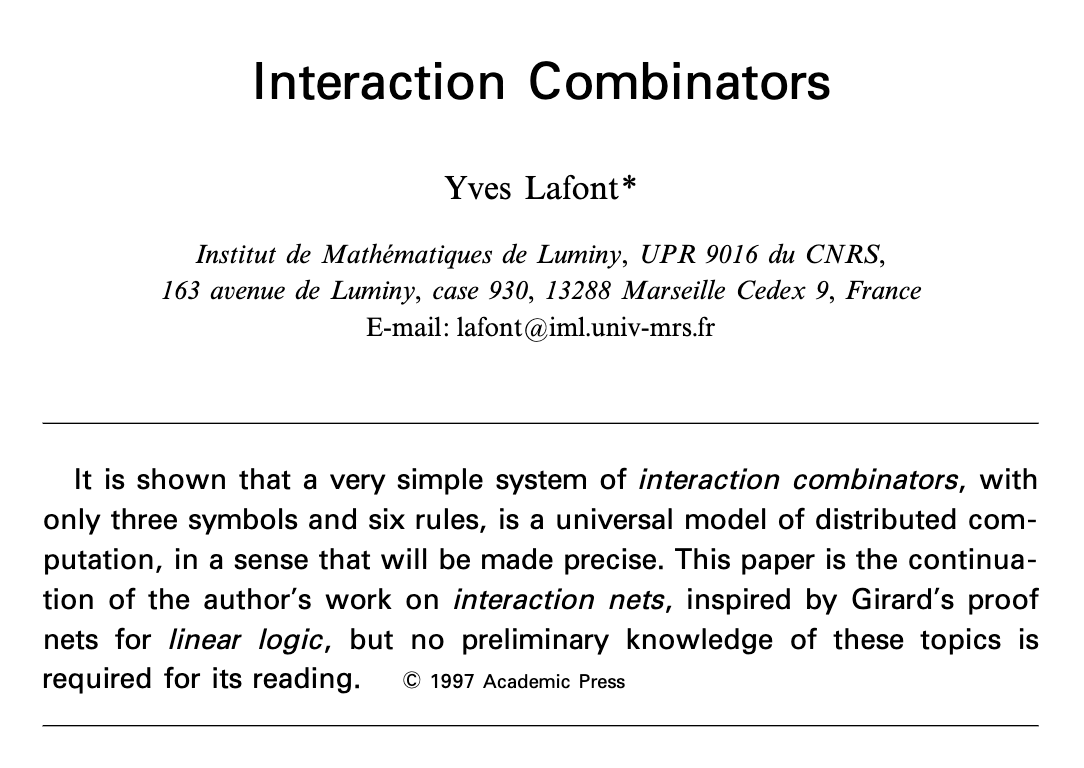

How does Bend do this? And how does it work under the hood? In Bend, expressions and values are internally modeled as graphs called "Interaction Combinators" - basically representing the chain of computations that need to be effectuated, where larger expressions come to life as a number of these graphs tied together. Running the program then boils down to solving these graphs, which somehow allows for instructions to be executed in parallel wherever it is possible - that's my rudimentary understanding of it right now.

The concept of interaction combinators was originally proposed in a paper by Yves Lafont back in 1997:

Beyond that it also has some other syntactical differences to real Python. Victor Taelin, the creator of Bend, reports that it took over a decade to put this into concept into practice however:

Tech and Web Dev

CSS Masonry Saga Part 3

The CSS Masonry Saga continues; if you haven't been following along with what's been going on that front, here's a quick recap:

- Two weeks ago the WebKit team over at Apple published a proposal for integrating a Masonry layout as a part of the CSS Grid spec.

- A week after (last week) the Chrome Browser Dev team published an article in response voicing their concerns on this proposal.

I don't know why the WebKit team decided to open this can of worms, but at this point it's become a pretty lengthy discussion.

The article that's made the rounds is by Picallili most recently, who's already had an appearance on the Newsletter previously with an previous piece of his titled "A more Modern CSS Reset". He pitches in on the CSS Masonry debacle with a new article of his, voicing his concerns on the accessibility of Masonry Grids, especially regarding their tabbing behavior:

Essentially, when you have a Masonry grid, the tabbing behaviour becomes ambiguous, in which order should the selected box travel - horizontally or vertically down the columns? What's the expected behavior in this scenario? This causes confusion for users who rely on keyboard navigation.

We can have a different Web

In a beautiful essay, Molly White provides a poignant analysis of the current state the Web finds itself in, lamenting the good old days of the internet when things seemed to be much easier than they are today. She delves into some of the reasons why we might be feeling this way, and reflects on how large corporations and different monetization strategies have transformed the current landscape:

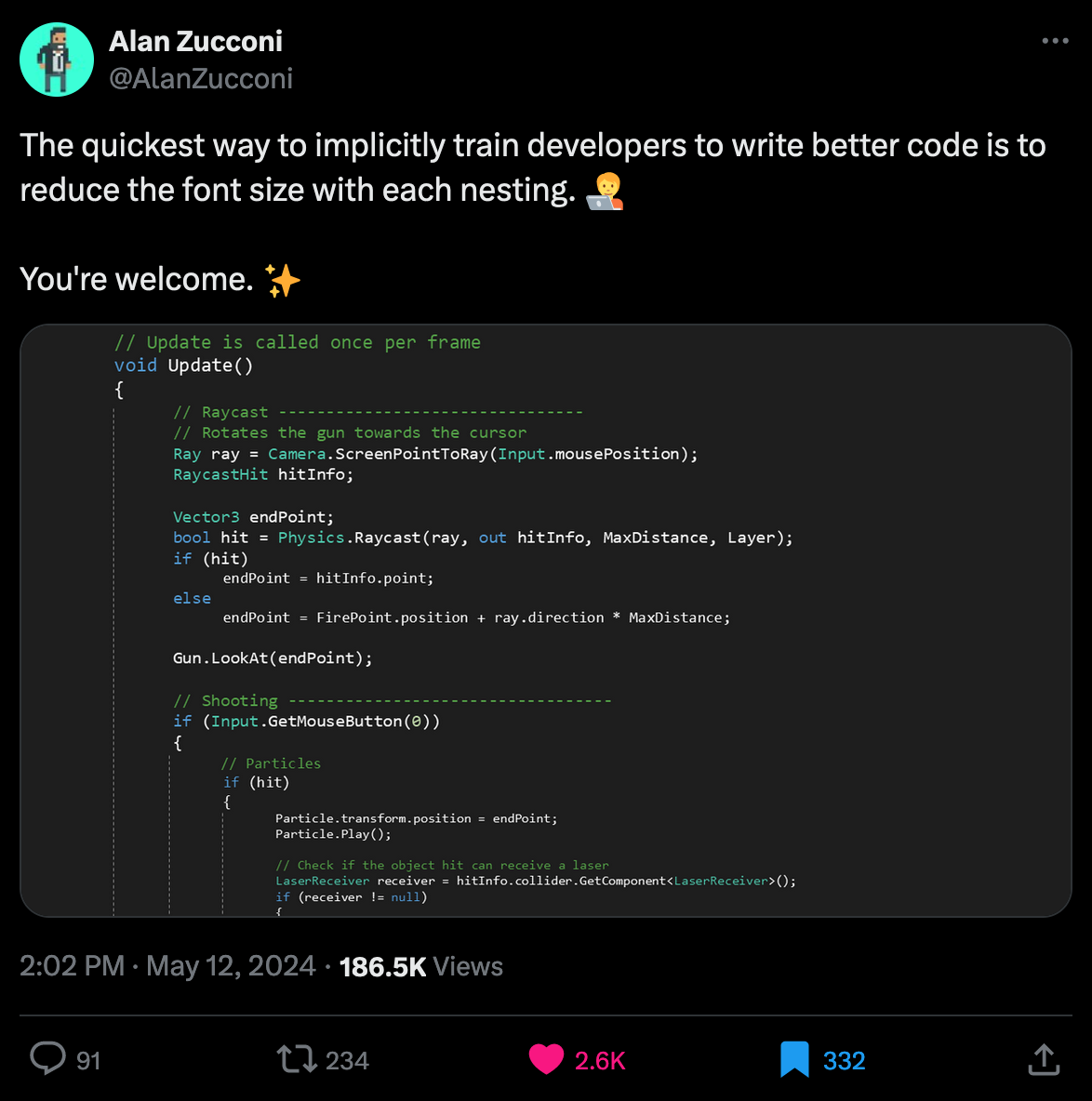

How to train a Developer

Alan Zucconi shares an interesting trick that should help keep your code in check and will implicitly train you to write better - flatter - code:

Gorilla Updates

We'll safely skip over the usual AI Corner segment this week, since we've already copiously talked about the current state of AI in the opening section.

As far as Gorilla things go, this week I published another Hypersub exclusive tutorial over on Paragraph, and it's a topic that I've been meaning to tackle for a while now: deforming geometric shapes, and creating all sorts of wavy tile patterns. I'm not gonna reveal too much here, but it's actually not too complicated of a trick - if you're interested in reading, article's here:

If this sounds interesting to you, want to get access to three other exclusive pieces of my writing, in addition to a monthly artwork airdropped directly into your ETH wallet, consider signing up to my Hypersub here. If you're already subscribed, thanks a million, and hope you enjoy the tutorial! Let me know if you need any clarifications - and make sure to join the member only discord channel!

While we're already on the topic of Ethereum, my Hypersub got featured in the coinbase wallet - which absolutely made my day when Bas notified me about it (thanks again for that):

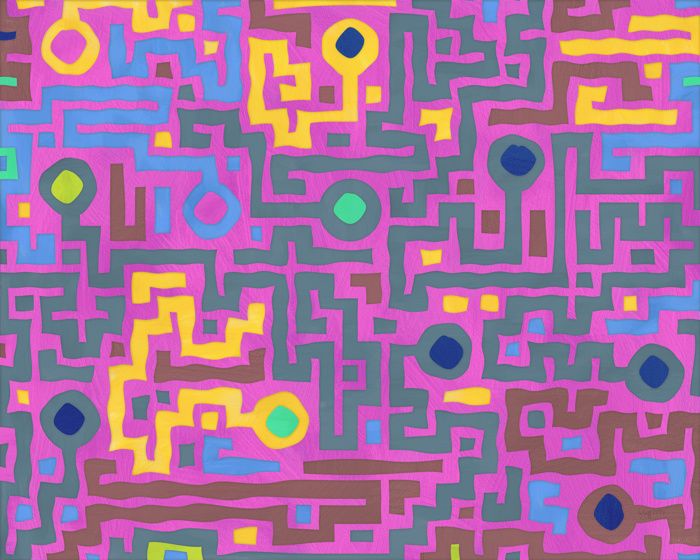

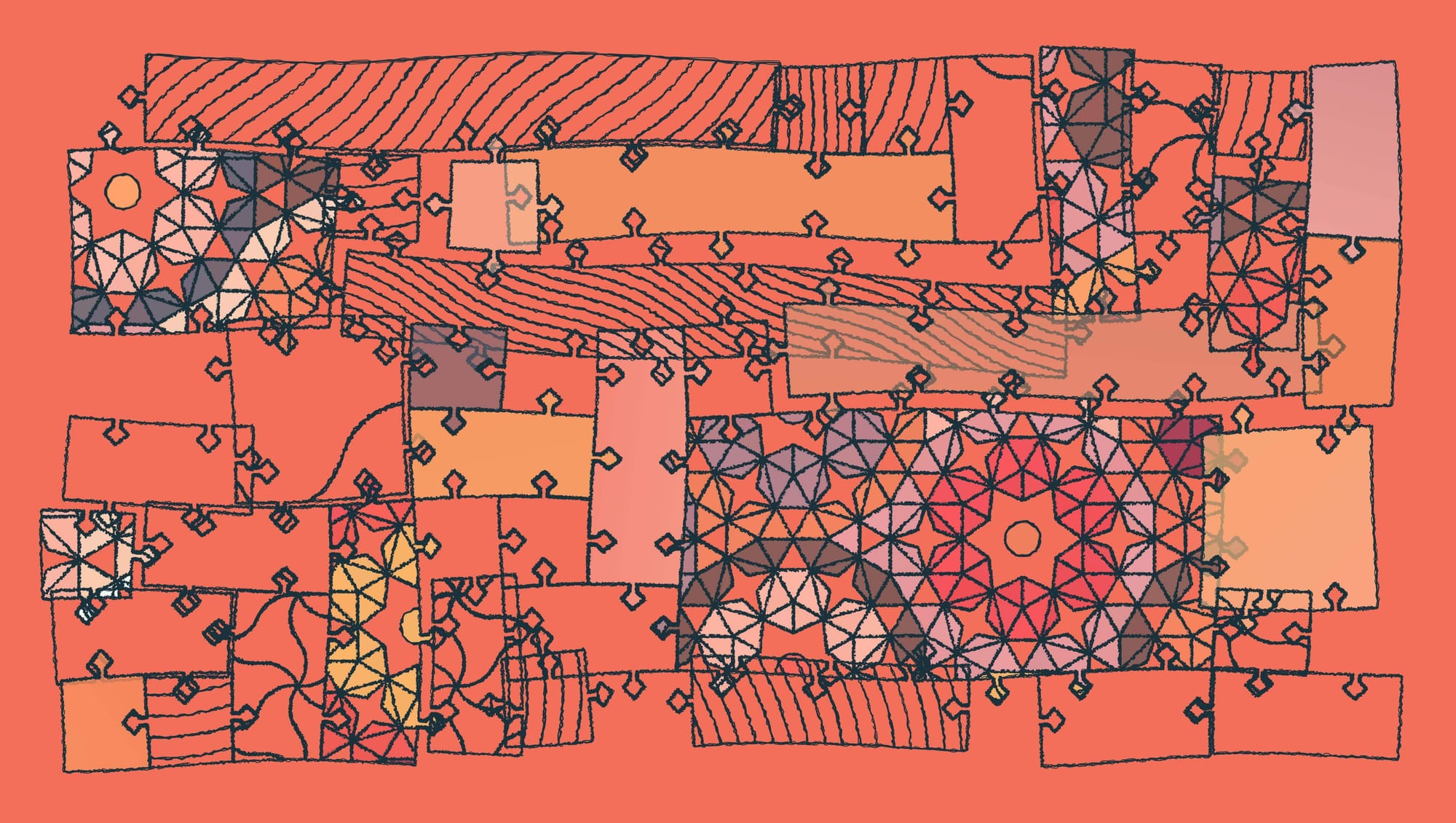

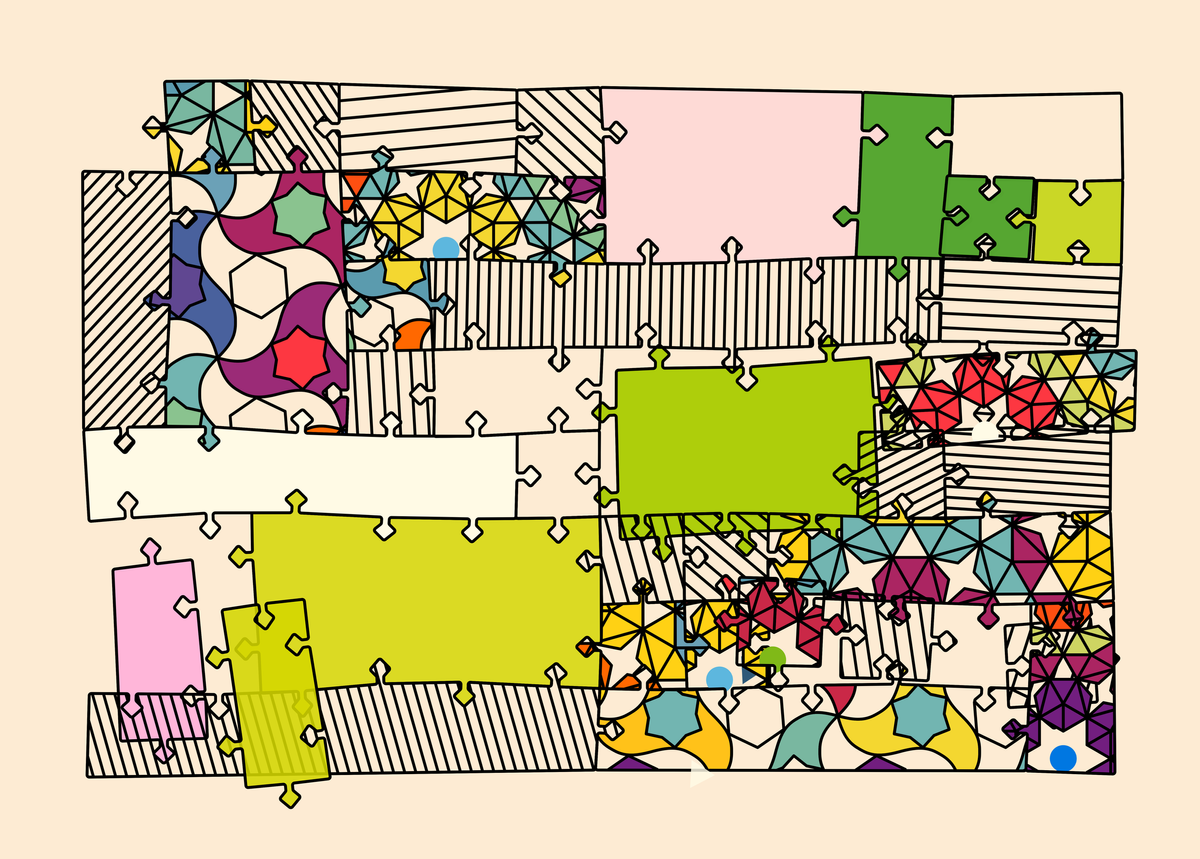

Besides this I continued working on my Arabesque Puzzle Grids, which turned into something that I'm extremely proud of, a write-up for the project is also almost complete and should be published quite soon - here's a sample of one of the outputs that I'm really happy with:

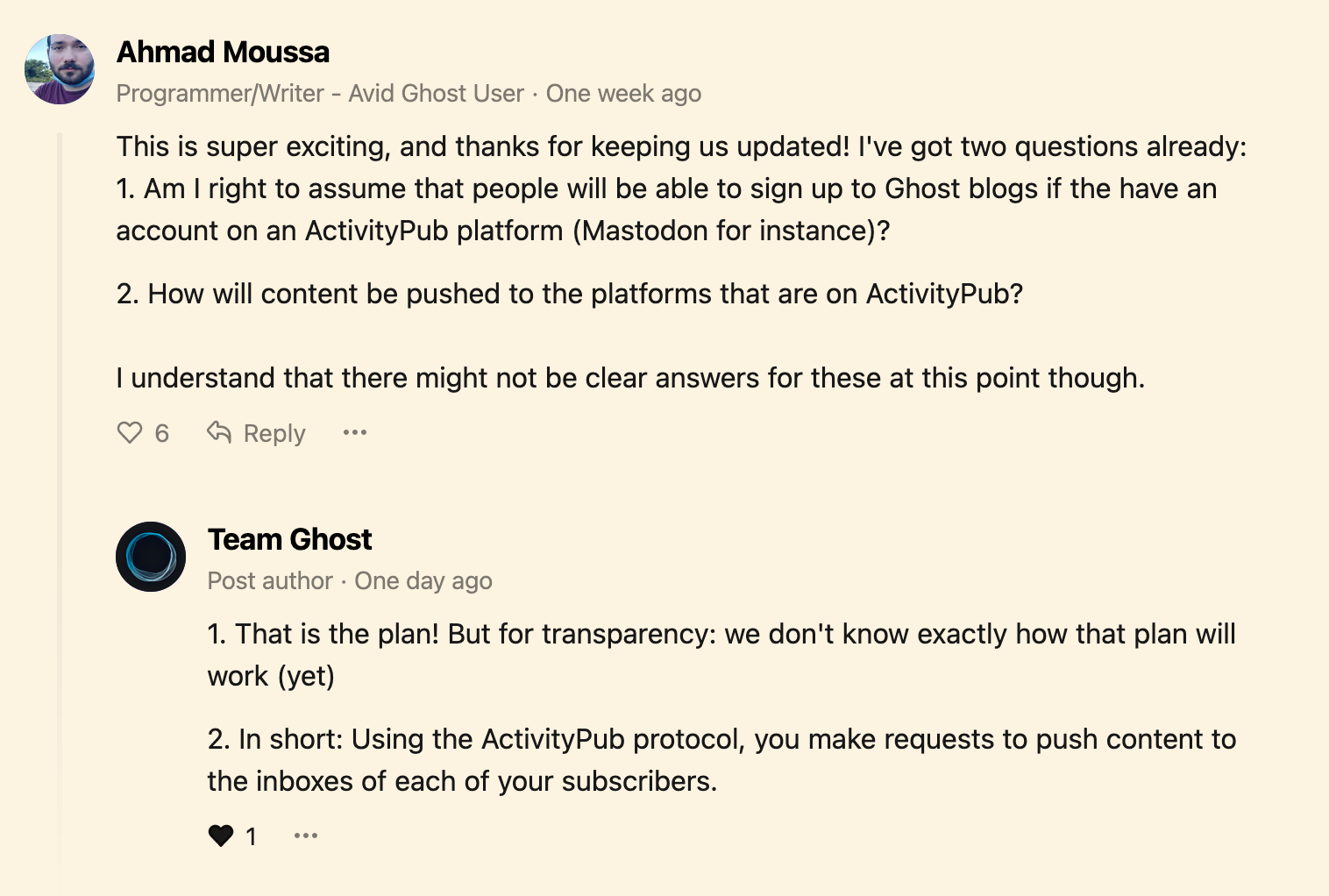

One last thing pertaining to the blog, is Ghost publishing another update regarding the ActivityPub integration that they're currently trying to realize. While it's still very early at this point it seems that they're committed to it, and already doing some initial tests. For instance one thing that the integration will enable is that Ghost blogs will be able to follow each other, just like other accounts on ActivityPub can follow each other.

They also replied to my question on their first update, which does shine just a little bit of light on how things will work:

Music for Coding

I don't frequently listen to Ambient music, since it seldomly is a mood I have, but over the weekend I highly enjoyed Dan Abrams', aka Shuttle358, dreamy soundscapes:

And that's it from me—hope you've enjoyed this week's curated assortment of genart and tech shenanigans!

Now that you find yourself at the end of the Newsletter, you might as well share it with some of your friends - word of mouth is till one of the best ways to support me! Otherwise come and say hi over on my socials - and since we've got a discord now, let me also just plug that here, come join and say hi!

This week's Newsletter ended up being quite beefy (4600+ words, how bout that Dan? 🤣). Take it as an early make-up for the upcoming Newsletter, that will likely end up being quite a meager one, as I'm expecting to be quite busy with IRL obligations next week 🙏

If you've read this far, thanks a million! If you're still hungry for more Generative Art things, you can check out last week's issue of the Newsletter here:

A backlog of all previous Gorilla Newsletters can be found here:

Cheers, happy sketching, and again, hope that you have a fantastic week! See you in the next one - Gorilla Sun 🌸

Interested in advertising or sponsoring the Blog? Reach out!