Welcome back everyone 👋 and a heartfelt thank you to all new subscribers that joined in the past week!

This is the 66th issue of the Gorilla Newsletter - a weekly online publication that sums up everything noteworthy from the past week in generative art, creative coding, web3, tech and AI - with a spritz of Gorilla updates.

If it's your first time here, we've also got a discord server now, where we nerd out about all sorts of genart and tech things - if you want to connect with other readers of the newsletter, come and say hi: here's an invite link!

That said, hope that you're all having an awesome start into the new week! Here's your weekly roundup 👇

All the Generative Things

Generative Anesthetics: A Eulogy for Generative Art

One most important recent piece of writing, that I'm quite late to cover, is Kevin Echerick's thought-provoking critique piece titled Generative Anesthetics. In it he eloquently points out the shortcomings of current generative art in the context of blockchain tech and the economic market that's emerged around the art-form in recent years:

Usually I'd put this one in the Web3 section of the newsletter, but I believe that it'll be an important read for many years to come. Esherick explicitly states his main point of critique: the current NFT market and the mass-production/consumer culture surrounding it set a detrimental precedent, generally making for a breeding ground that spawns weak and uninteresting works. The root of this problem can be traced back to how generative art is inherently financialized on the blockchain - maybe we've grossly over-glorified digital ownership in this setting. Just because generative art can exist under this tokenized form, doesn't mean that it always needs to do so.

Here's one point that I'd like to offer my thoughts on, not to refute it but to provide context:

Usually this involves something about the “dynamic interplay between order and chaos” or “the emergence of complexity from simple algorithmic rules.” The works are algorithm-first. Meaning, the real human impact of the artwork, is with frustrating frequency only an afterthought. In place of meaning come technical explainers that fill the void where concept should be.

The reason for generative art being primarily algorithm-first, in lieu of trying to explore interesting concepts, is that generative art simply is inherently algorithm-first. Artists that engage with the art-form are mainly driven by exploratory desires. In essence, a different Modus Operandi. A good source to back up this claim is an article by Amy Goodchild from a few months ago, where many generative artists chime in with their raison d'être:

An important aspect that plays into this are also the affordances that code as a medium, as well as the modern tools used for making generative art create. It also plays a big factor in the resulting aesthetics, aggravating this feeling of sameness. These two factors might not be a good recipe for making innovative, novel, and impactful works.

But then again, what actually is the real human impact of an artwork? If you tell me... maybe I can work that into my next piece.

There were many responses to Esherick's article, all of them worth reading and taking in - the most important one of them was however from Maxwell Cohen, titled "Leave Generative Artists Out of It". The text makes a very important point that Esherick's lengthy analysis seemingly leaves out: viewing all of these issues from the artists' vantage point, most of which are simply lumped together as a large body of uninteresting work:

Maxwell makes a few points that really hit home:

When we peruse Artblocks or Objkt or Fxhash and look over the generative work there, we must remember that we’re looking first-and-foremost at experimentation, at uncertainty. We are looking at works in progress. We are looking at people fooling around with a new medium, a new mechanism, the resultant mix of new paint.

Sure, there is a lot of trash out there, to be very blunt about it. Only out there for a quick buck. Sure, maybe of a lot of my works from the early HEN days, are by today's standards also considered trash, but it's important to keep in mind that these works don't really claim to be art, as much as they've been ongoing explorations of the medium, the underlying tech, and as a means to document my personal journey.

I think it's a bit unfair, even a bit snobbish to imply this kind of grouping statement. I'll be honest, it hits a little bit of a nerve. At this point I've talked to dozens of the generative artists currently active in the space, that come from all sorts of backgrounds, and ranging from all sorts skill levels. The common denominator is that they all have interesting stories to tell about their works, and are deeply involved with their practice, pouring endless amounts of thought and passiong into their works:

To peruse generative art projects individually is to encounter a gargantuan array of aesthetics, moods, styles, influences, and themes, let alone coding practices. This is not an assembly line run by automatons (some of them, maybe); these are real artists who have approached a new medium with exploration their primary purpose.

Many of them do not receive the recognition that they deserve. But as any other niche, generative art has also become hyper competitive. Of course, the market will not prosper on passion alone. This is to say that even if a lot of the ideas are rehashed, it doesn't mean that this collective body of work hasn't contributed anything, or doesn't have any value.

One other notable response to Esherick were thoughts by Linda Dounia, in which she formulates her own thoughts much better than I can. She describes the relationship between gen art and the market as an "arranged marriage":

Esherick's essay is a refreshing take, especially when compared to some of the of the other critiques that I've read, often not amounting to much more than loose bundles of words, that leave you dizzied and confused after taking them in. When you write a critique, I think you owe it to at least be eloquent and make it count. Critique needs to breed discourse, and Esherick did it masterfully.

Algorithmic Art as a Subset of Generative Art

On a related - algorithm-first - note, Monokai wrote a statement addressing the current terminological problems surrounding the label "Generative Art" and its conflation with AI Art these days:

I had the chance of chatting with Monokai a bit during the genart summit earlier this month, and we actually talked exactly about this issue, which makes reading his words so much more interesting. Maybe I can offer my own thoughts - unsurprisingly I've also been thinking about this quite a bit.

I fully stand behind that it is semantically correct to classify AI generated art as generative art, primarily due to how these models inherently function - be it image generators or LLMs. You might have noticed this yourself, feeding in the same prompt twice will usually return outputs that adhere to what you asked for in your prompt, but also present variations. In other words: same prompt /= same output. This is because the process by which images and text are sampled involves randomness.

Besides the points that Monokai already stated in how the process between classic generative art and AI art is vastly different, creating the generator vs. prompting/sampling from the generator, I would also argue that there's a big difference to machine learning models from an algorithmic point of view. They are a special category of learning algorithms, that distinguish themself by also requiring storage to function. Classic generative art relies on self-sufficient hand crafted generators on the other hand. When you train a model, it abstracts numeric internal representations that essentially capture the ideas presented in the training data. These learned weights need to be stored separately, and are necessary for the models to really do much at all.

I've also recently thought about the defining qualities of generative art, similarly to how Monokai approaches it, trying to pin point the common denominators to generative works, and whether or not this investigation could lead me to a better term for it. I kept finding myself coming back to the notion of "Emergence" as one of those defining qualities, as it encapsulates and implies a number of the aspects we usually associate with a generative artworks:

- Emergence implies a system. Emergent behavior can only become apparent when a number of rules interact with each other. Several rules in combination are a system.

- Emergent behavior in turn is usually labeled as "something more than the sum of its parts", and this is generally what artists strive to do in generative art. A good generative system should also present this property.

- To some extent emergence also implies randomness. Emergent behavior is usually unpredictable and surprising, which I feel is also an important part of generative art.

While emergence is a phenomenon that many generators strive to emulate, it doesn't capture all of the dimensions of the artform. But maybe I'm also completely off with this. I'd love to hear your thoughts on the matter! I think "Algorithmic Art" is a good placeholder in face of AI art to lessen confusion and miscommunication, since it implies a difference in the process. It might not be the ideal term to capture the entire essence of artform however - you could make an equal argument for something like "Procedural Art" I think.

I still tend to answer with "I do creative coding" whenever I meet someone that's not in the know of all of this. Anyway, these are my 2 cents, I'll report back in when I've thought about it a bit more. Thanks for sharing your thoughts Monokai. I also couldn't stop admiring the beautiful website!

Le Random Generative Timeline - Entering the 2010s & Interview with John Maeda

Le Random's timeline is now almost caught up with the present day, this Monday (the day this newsletter comes out) will mark the addition of chapter 9 that recounts the eventful 2010s, in which not only tools like P5JS entered the scene, but also set the technological, blockchain based precendents that catapulted generative art to new levels of popularity in the 2020s:

On the Editorial side, Monk's sat down with one of the current leading voices at the intersection of design and AI, none other than John Maeda. Currently the VP of Design at Microsoft, John Maeda was a research professor at the famous MIT Media Lab that gave birth to Processing:

In the interview it becomes apparent that Maeda embraces AI powered creativity with open arms, especially as an augmentation to human creativity rather than a replacement. His prediction is that experts in their fields will become even better, while novices will have the chance to create work that they might not have been able to achieve in their lifetime. One point that he stresses however, is the importance of understanding the capabilities and limitations of current AI - and that artists will need to become extremely efficient in their unique styles to be able to compete in this new fast paced landscape:

Visual artists and writers will need to get much faster at delivering their craft in this new age of computational creativity. We’ll all have to learn to use our seconds as carefully as we manage our days and weeks.

I am definitely feeling this on a daily basis; AI's dramatically changed the expectations for speed and variety in art and content creation - if social media hasn't already imposed unrealistic paces, AI's just raised the bar another notch. I think this is a good point to re-share a talk that Maeda gave earlier this year, titled "Design against AI" in which he explains how designers need to adapt with the increasing influence of AI on design tools:

Besides this - not to flex (absolutely a flex) - Maeda follows me on TwiX for some reason. Please don't tell him. He might just cut me off the list to turn that Following count into a nice round 500 😂

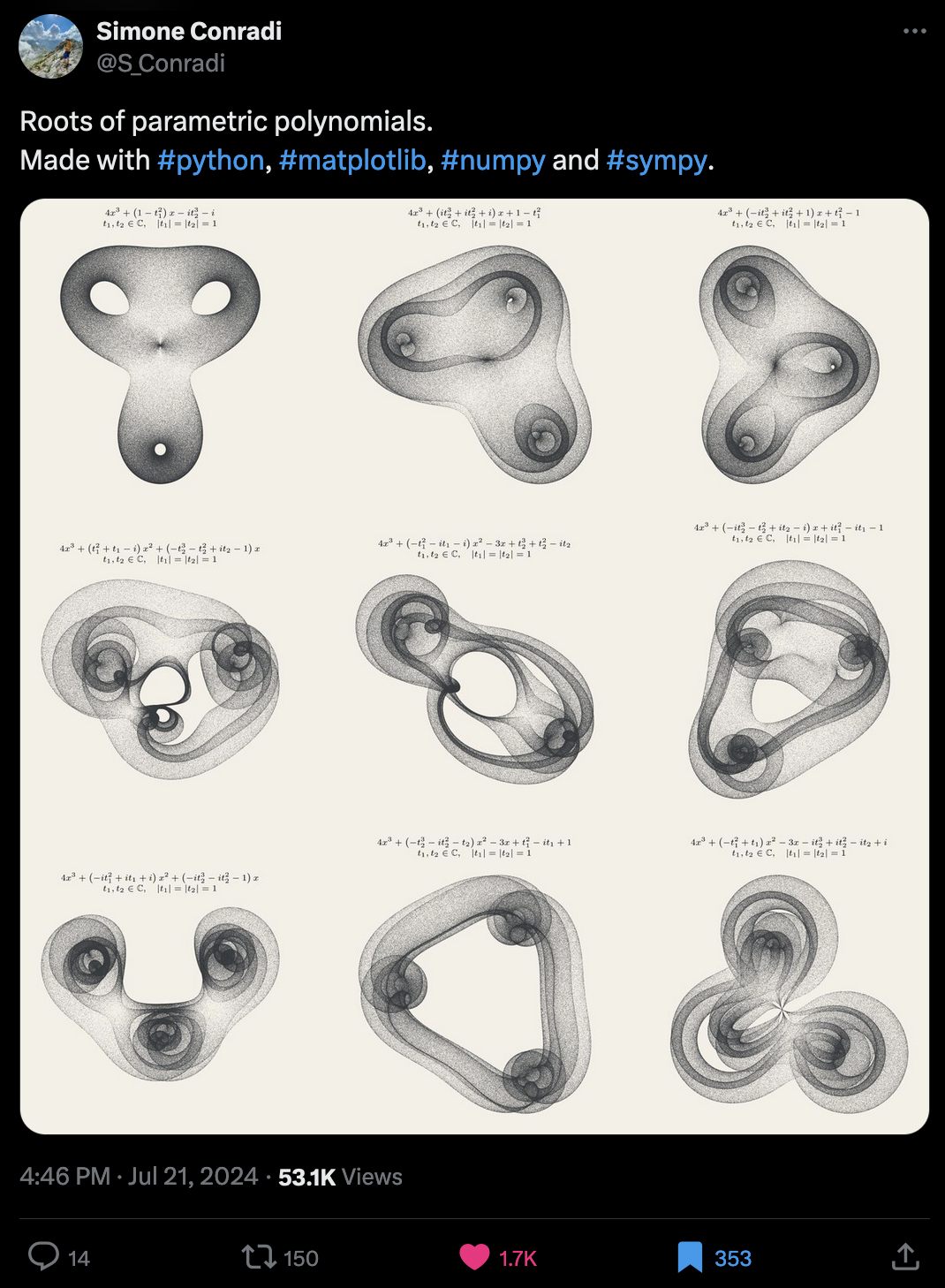

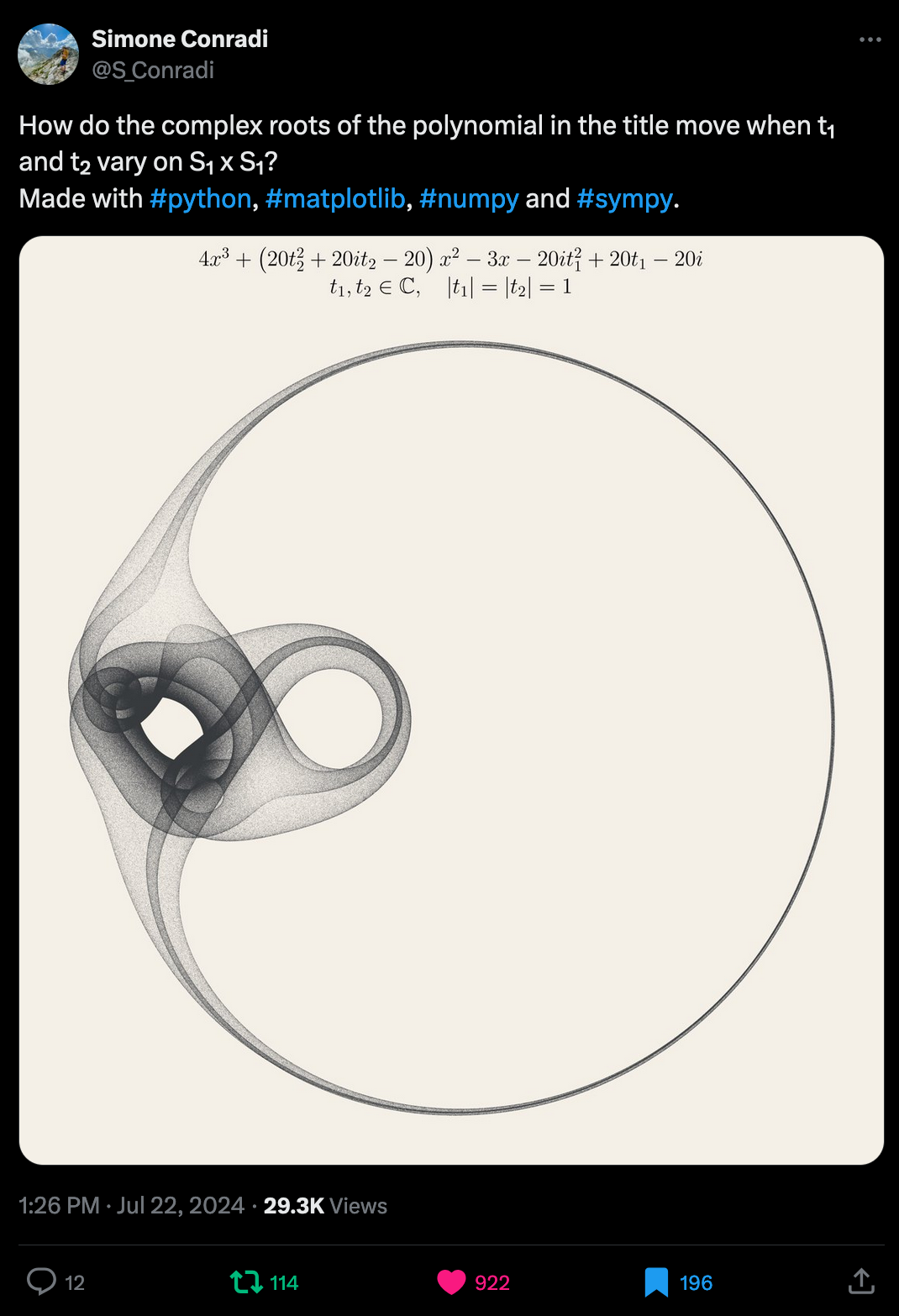

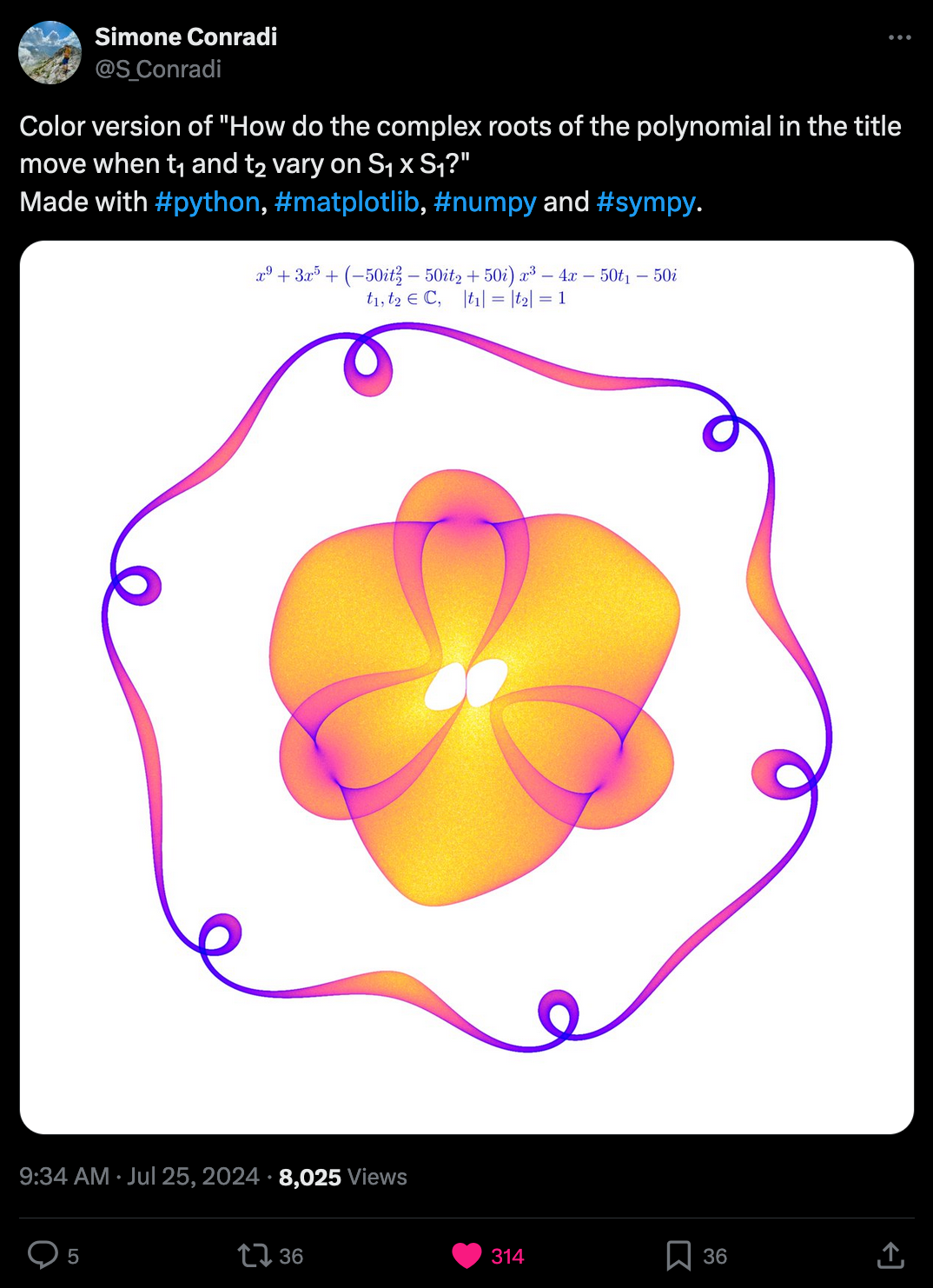

Complex Roots of Polynomials as Generative Art

Simone Conradi has been on an absolute tour-de-force of generative art in the past couple of weeks. You might remember issue 61 of the newsletter in which I shared Simone's implementation of Particle Lenia - a mesmerizing modern particle based approach to Conway's Game of Life. In the meantime Simone's explored some other mathematical notions for art making purposes, one of them being the evaluation of polynomials and their complex roots - have a look for yourself at the following beautiful shapes:

Link to Tweet #1 | Link to Tweet #2 | You should also check out Simone Conradi's Twitter profile for more beautiful variations.

Over the weekend I challenged myself and tried to figure out how to actually visualize these polynomials the way that Simone describes. To that end we need to unpack a few things, in particular what complex roots of polynomials are. Maybe there's some inaccuracies, so correct me if I'm off - not a mathematician by any means.

A polynomial is nothing more than an equation containing variables that are raised to different powers and multiplied by coefficients (some numbers basically). For example, one that might be familiar to you from your school days, is: x^2 + 2x + 1 = 0. The root of a polynomial are then simply the values of x for which the polynomial equals zero. For example the root of x^2 + 2x + 1 = 0 would be x = -1.

Some polynomials turn out to be problematic however and don't end up having a proper root; take x^2 + 1 = 0 for example; simply moving one term to the other side we obtain x^2 = - 1 which is not something that has a solution. The square of a number can never be negative. But, if we were to evaluate this polynomial as a complex number we could say that i and -i are two solutions for it - essentially the complex roots thereof.

I'm not going to go over complex numbers here, but if you want a refresher on and have 20 minutes to spare, I'd recommend this video by Veritasum that is quite an enjoyable watch:

The polynomials chosen by Simone always end up being complex the way that they're structured with the deliberate t1 and t2 coefficients. These two variables are also important in that they are the handles by which the resulting swirly shape emerges. In the context of complex numbers, S1 stands for the unit circle in the complex plane, meaning that we're simply moving t1 and t2 around it, varying their values and evaluating the polynomial for each, then marking the result on the canvas.

Naturally, I couldn't resist attempting these recreation of these patterns, especially since Simone provides all that is necessary. I picked a particular variation that I thought looked cute (below), and while I didn't manage to actually get it right, it seems that the code that I threw together in about an hour is at least doing something, there seems to be some similarity at least:

I will definitely have to revisit the idea when I have more time and try and exactly reproduce Simone's creation.

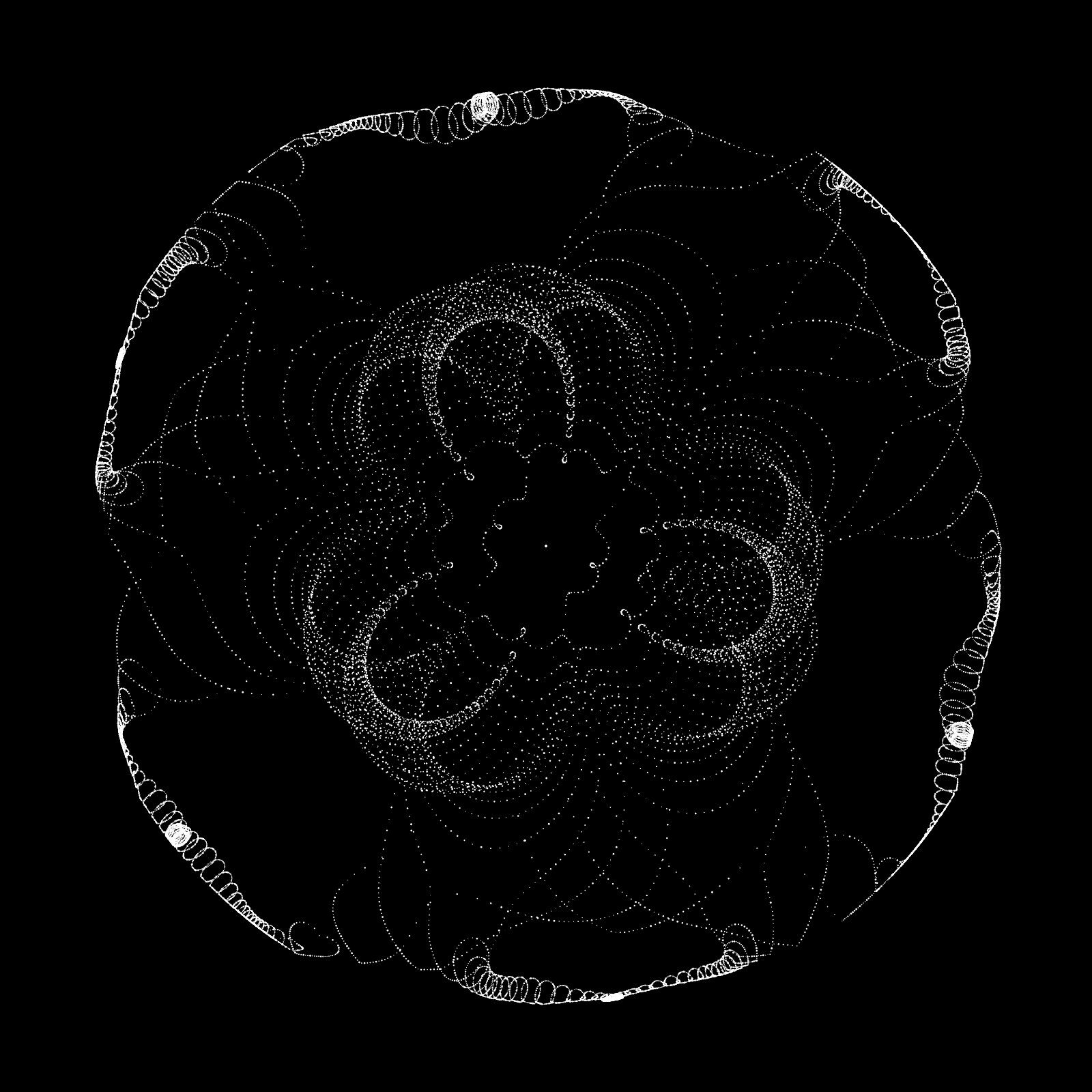

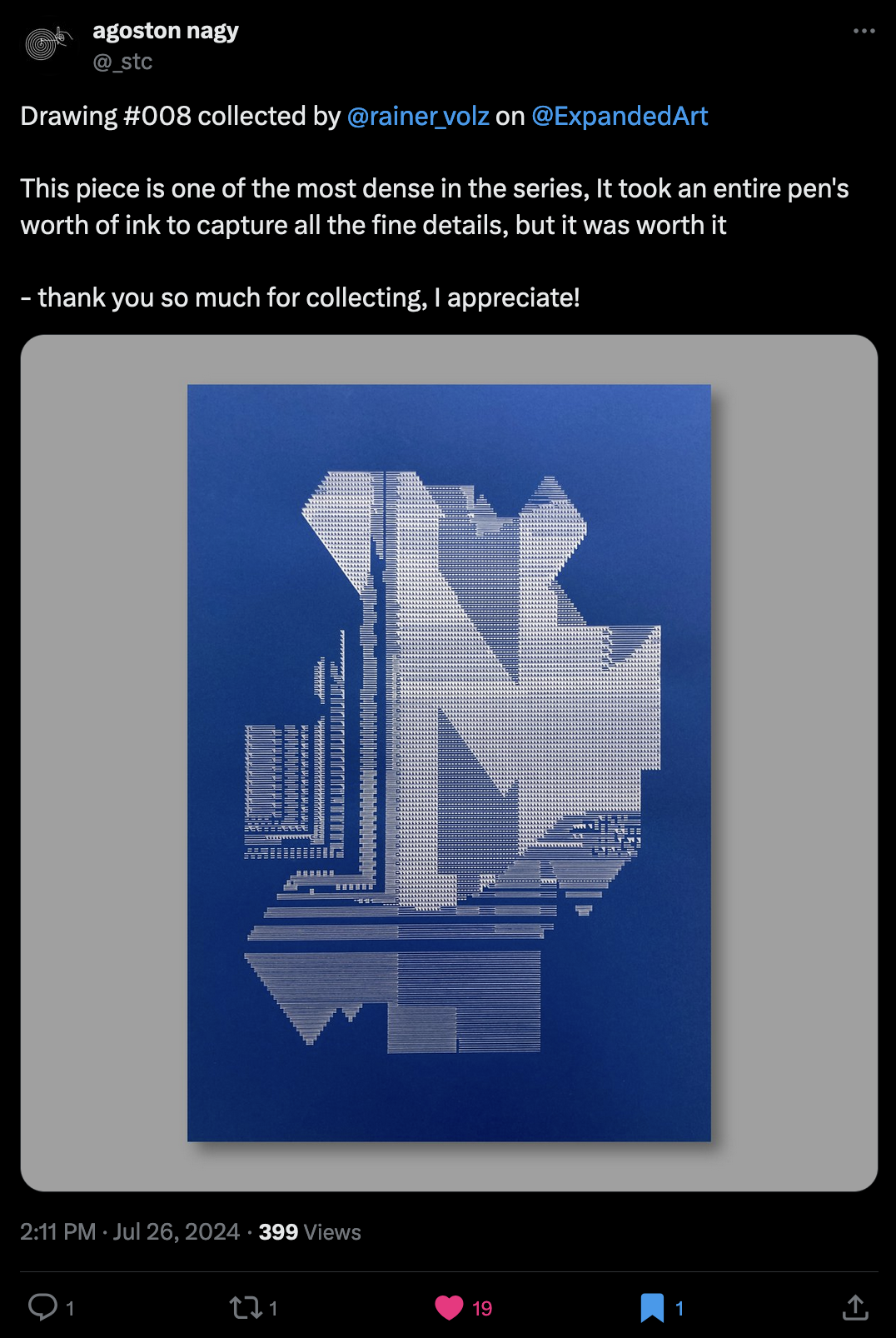

Time-shifting Geometries by Agoston Nagy

Another artist I had a lovely chat with at the summit was Agoston Nagy. He also recently published an article titled Time-Shifting Geometries, in which he gives us insight into the inspirations and the process behind his generative series titled Procedural Drawings:

Link to Tweet #1 | Link to Tweet #2 | I also had the chance of seeing one of the works in person at the Expanded gallery, I did unfortunately not snap a pic.

Agoston's work is deeply influenced by the ritualistic procedural practices of old, such as the ancient Indian Kolam, where flower is used to draw symmetric patterns for ornamental and ceremonial purposes, as well as the ancient Chinese divination text referred to as I-Ching "The Book of Changes" where random arrangements of yarrow sticks are used to make predictions and advice decisions.

He also mentions Aristid Lindemeyer's grammatical systems (L-systems) that were introduced in the famous book "The Algorithmic Beauty of Plants" as a source of inspiration, and proceeds to provide a break-down of the system sbehind his plotter art pieces, as well as some thoughts on the temporal aspect of them.

David Gerrells on How Not to Use Box Shadows

Following up on his article from last week, in which David Gerrells writes about simulating millions of particles on the CPU with vanilla JS, he's now back with another experiment in which he tries to push things to the limit. This time he explores Box Shadows, and in particular, on "how not to use them":

I want to share some of the worst possible things one can do with box shadows all on a single div. Things which shouldn't work at all yet somehow they do.

So yeah, he basically ends up making an entire ray-tracer with box shadows, the writing is hilarious and engaging, and you'll learn a lot from it - check it:

Other Cool Generative Things

- Earlier this year PyCon 2024 took place over in the US in Pittsburg, Pennsylvania. And just recently a complete playlist of talks was released for it - you can watch it here. I learned about it through a blog post from Jörg Kantel in which he shares some of the highlights (post's in german, but I think you'll figure out how to translate it). Most notably one of the talks is about the python version of P5JS.

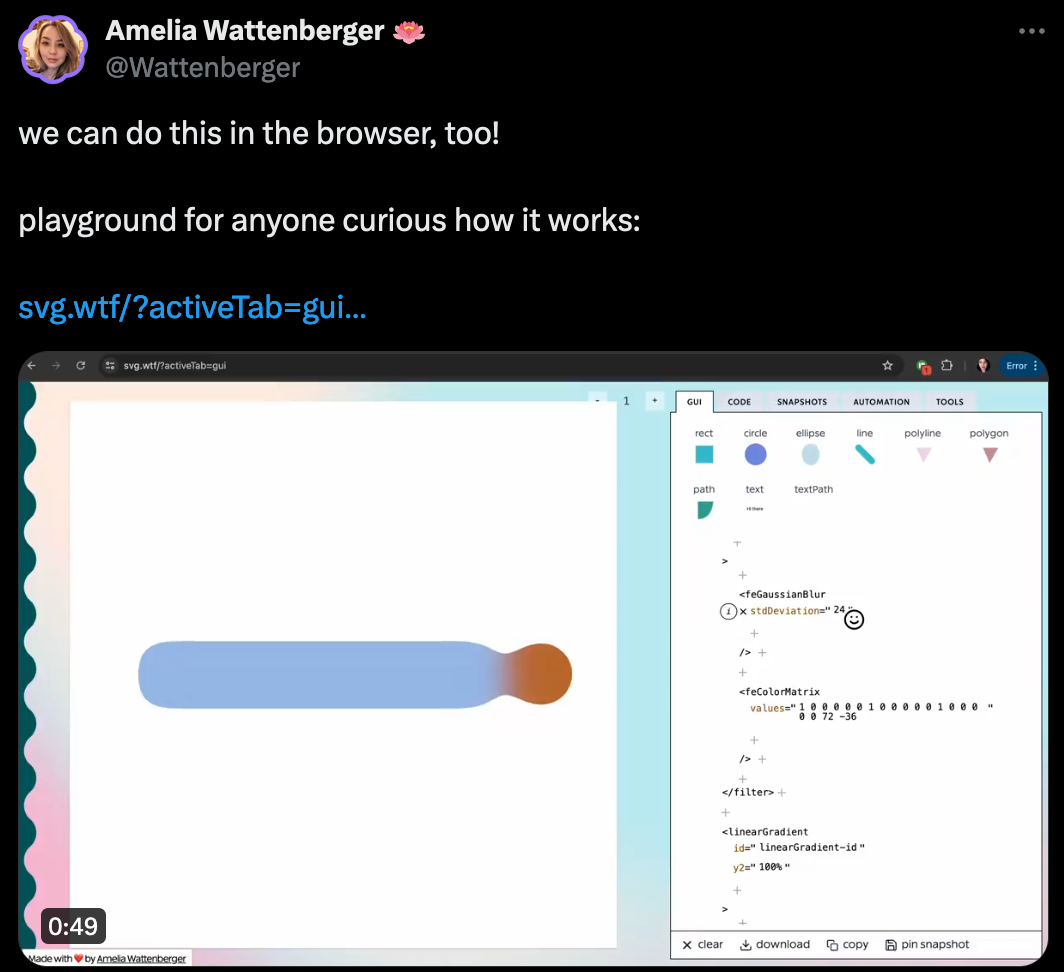

- I don't know how she does it, but Amelia Wattenberger shares yet another cool tool of hers, one which lets you assemble SVGs, simply by dragging and dropping them onto a canvas, which then automatically generates the corresponding SVG code in a side panel.

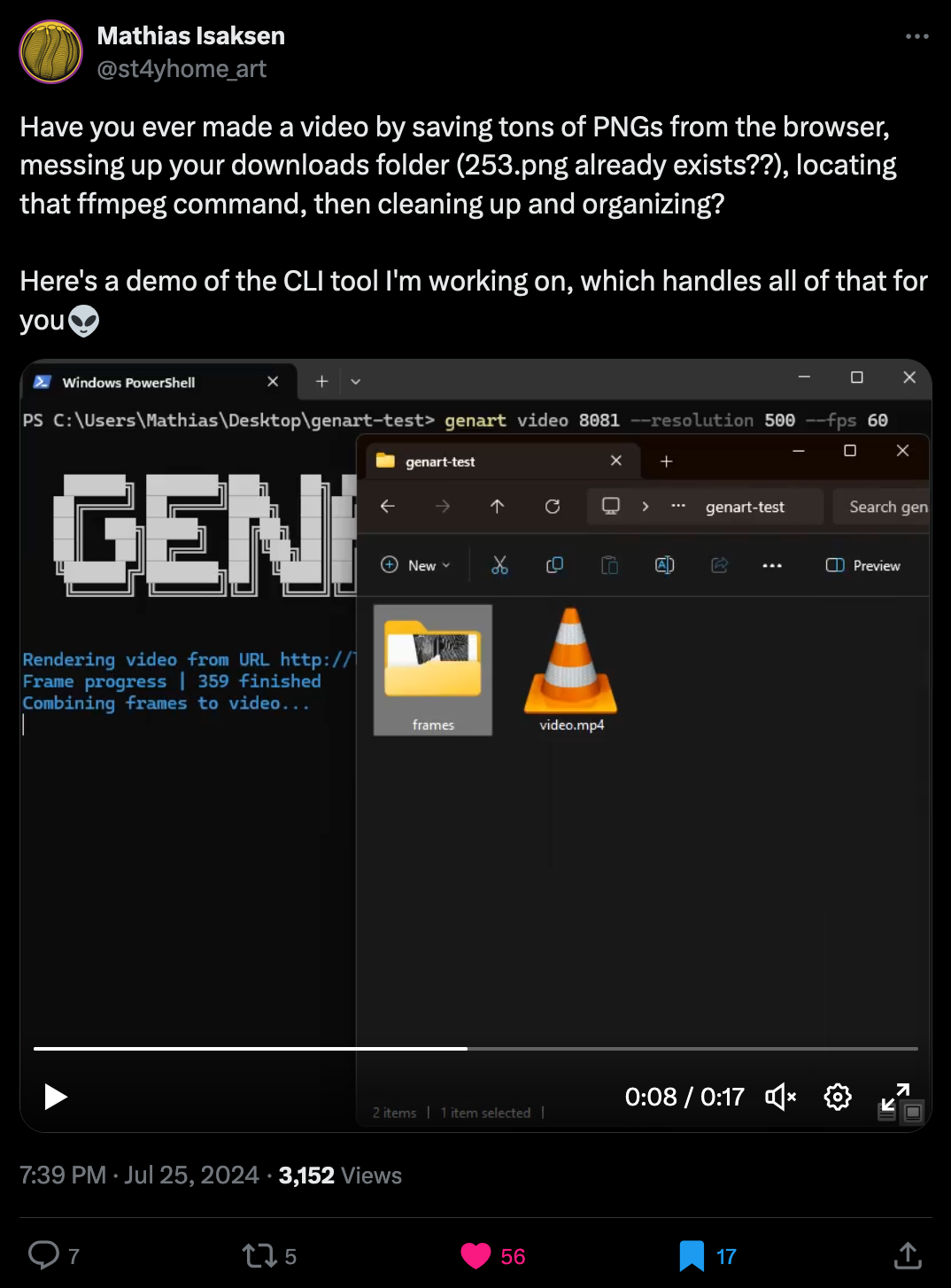

- Mathias Isaksen shared a demo for a command line tool that he's been working on, which assists you in generating animations/videos of your code art directly from a URL.

Web3 News

Riding the Bull: 1 Week In

...I'm ashamed to say, it only just clicked why it's called Rodeo.

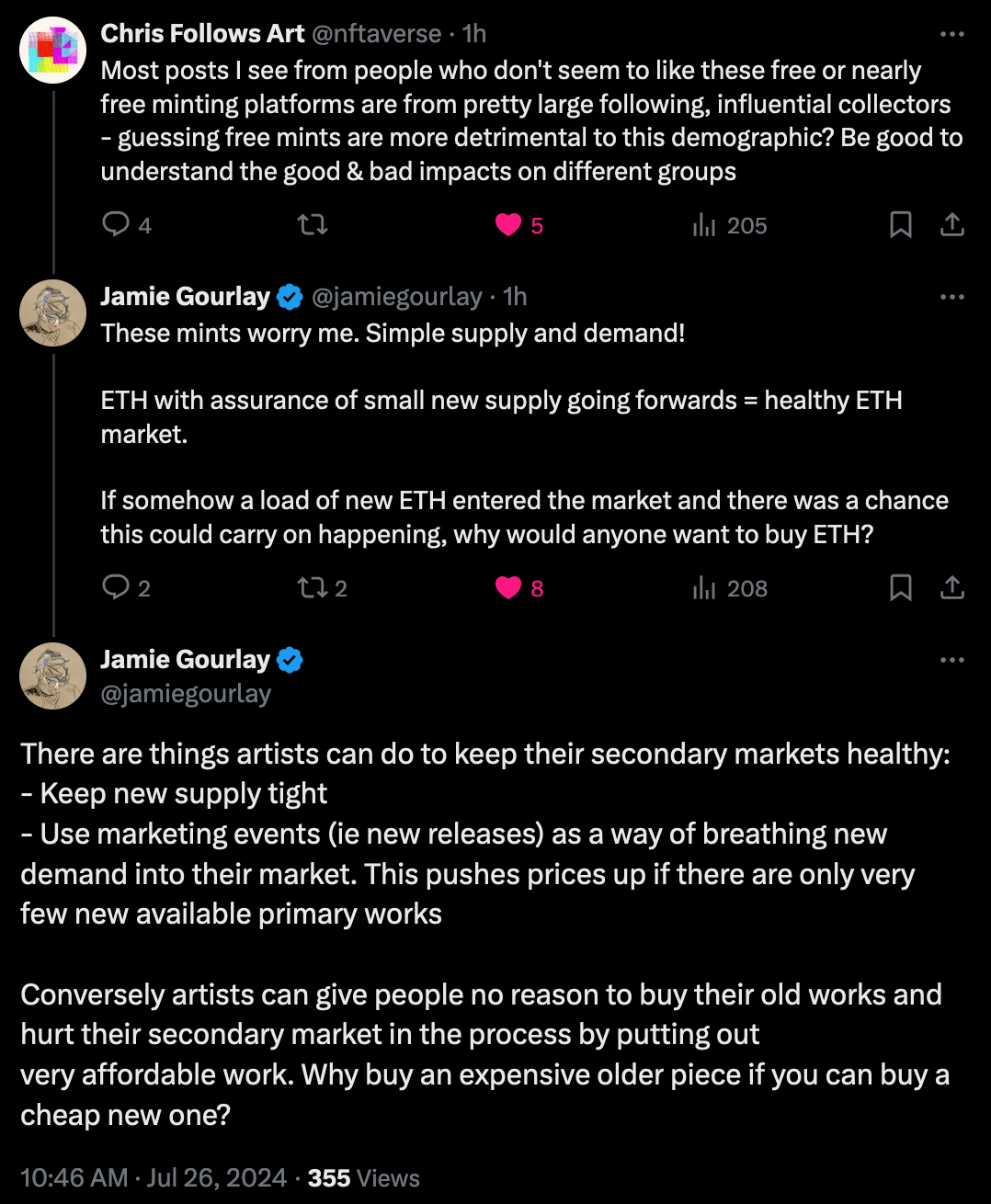

Rodeo's been a whole bunch of fun up till now, and it's been an amazing way to stock up my collection with a whole bunch of affordable works from friends and creators that I admire. After this initial honeymoon phase it's however also important to take a step back and see how Rodeo fits into the larger picture of the the current market - and the overall sentiment's been a bit mixed:

I'd say that Jamie Gourlay is absolutely right with his points, pushing out this many works at such a low price point, could heavily devalue an artist's body of work. I think this entirely depends on how you view Rodeo as platform however, if you actually consider it to be a marketplace, or just a social media platform enhanced with minting capabilities.

We've been advocating for a slower paced market with more deliberate and meaningful releases - Rodeo completely subverts this however, with its overarching mechanics incentivizing a fast pace and big quantities. It feel that this might not really be sustainable in the long run and could be a recipe for burnout. Maybe these minting mechanics will need to see some revision to make them more sustainable.

Bruce's Artistic Journey

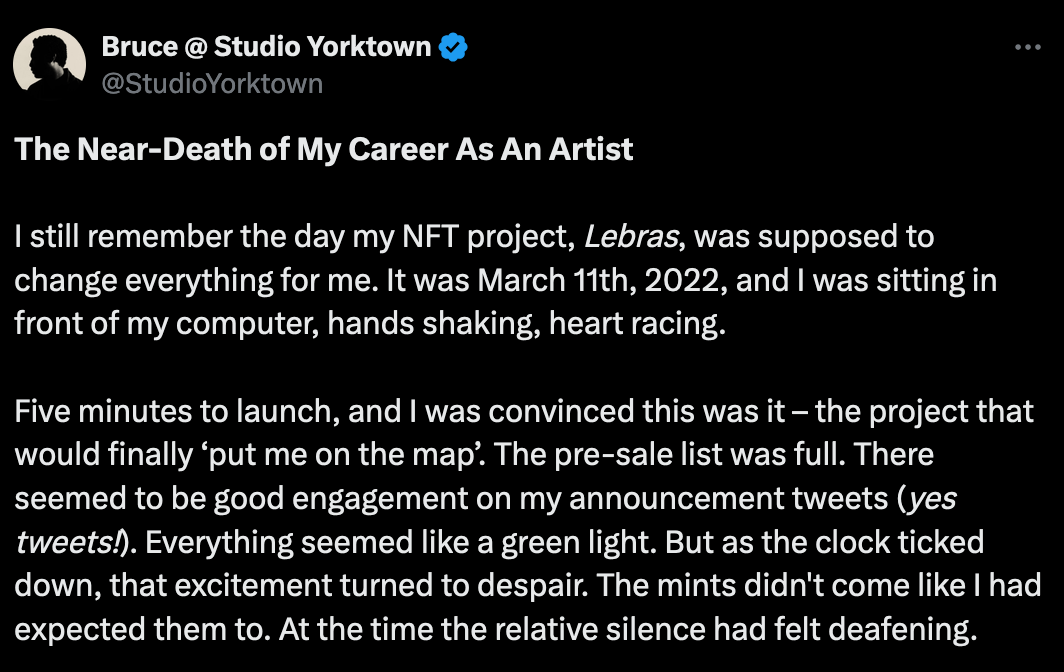

In a long-form tweet Bruce shares the story of his artistic journey, highlighting an important inflection point in his career, and a crucial change in mindset that's allowed him to persevere in the space. It's un-intuitive, and it's super difficult, especially with the pressures that the space can exert on artists at times, but Bruce advises to let go of what "could be" and focus on creating - the process is the only thing that we can actively control after all:

Maybe this is the exact reason why Bruce's methodology is so sound, and I've really admired how he's been able to be so consistent about it over the past years.

As artists in the NFT space, we face unique pressures. We are driven by a desire for success, but the metrics we use to measure it – mint numbers, revenue, floor prices – they can be cold and unforgiving. It is easy to get caught up in the narratives and expectations of others. But we must resist.

It's very easy to beat yourself up when your work doesn't meet the market's criteria for it being considered a success, but it's really important to remind yourself of the reasons why you're creating and making things. The joy of making and learning things has always been my own inherent driving factor, sometimes it's easy to lose sight of it however. Back in 2021 when I minted my very first few code experiments on HEN, and later on fxhash, I had no clue on how deeply impactful this would be on what I'd be doing in the following years. And what a turbulent journey it's been - with many emotional challenges. But I'm grateful that I'm still at it

The Curator Economy by Tom Beck

Tom Beck wrote an interesting piece about publishing in the digital age, particularly about the importance of the curator in it... and I feel oddly addressed by it. In the essay he tries to redefine the role of publishers in a modern setting, and how they can more accurately perform a curatorial role:

And this newsletter is in essence a form of curation; the original motivation for the newsletter was to collect and highlight all the worthwhile things that I can scour every week so that you don't have to. While it's not the primary intent, in a way this is like telling you what is important and what isn't. Tom explains that in the current digital landscape the curatorial role has to a large extent been overtaken by the algorithm that governs most platforms:

[...] this is how all social media feeds and recommendation algorithms work. They gather information on what you consume and then feed you more based on that initial input. They quite literally cannot do anything else. They are not capable of introducing true novelty.

Tom advocates for more human friendly algorithms, and systems that can enable a symbiotic relationship between creators, curators, and the platforms. He provides an example of such a system in the Web3 setting towards the end of the piece. He also mentions Timothy Greene's "Curation Revolution" as an inspiration, which I'll definitely have to check out.

Tech and Web Dev

To kick off this section this time, I'd like to shout out one of my favorite newsletters out there (not that they need it but anyway): The Collective. It's one of my main sources for putting together this section of the Gorilla Newsletter every week since they always share bangers. Their round-up this week was really chock-full of awesome links, so recommend checking it out:

Demystifying Cookies and Tokens

The negative connotations that we associate with browser cookies stems from the fact that over the past decade the tech has been heavily exploited for data collection, tracking, and advertising purposes. In particular third-party cookies that track your activity across different web pages you visit, even after you've left the domain that the tracker originated from.

In recent years this debacle has led to what is today referred to as the "Cookie Apocalypse", leading to major browsers disallowing and phasing out certain types of unnecessary cookies, primarily those that have been leveraged by advertiser for dubious tracking activity, in light of tighter privacy regulations. While Safari and FireFox have already disabled third party cookies, Google initially had the intent of doing so this year but seemingly postponed it for early January 2025 - an article on the topic that speculates some of the reasons behind this delay:

To get to the main point of this segment however: these small pieces of data that browsers store on your machine are not always malicious in intent, in a lot of cases they are even necessary to communicate information with the server that you're connecting to and provide a better user experience (first-party cookies). For instance, many web apps use cookies to remember that you're logged in.

Tommi Hovi provides us with an insightful article in which he gives us a run-down of the different types of cookies that exist (including session tokens and JSON Web tokens), how they work, and what legitimate problems that they solve:

The State of JavaScript 2023 Results

A bit late to the party, but the state of JS 2023 came out a couple of weeks ago (after a significant delay), and the turnaround's been quite surprising. The aggregated statistics show that the general sentiment towards the current JavaScript eco-system has had an observable dip into the negative - in particularly the libraries section of the global survey reflects this:

YouTuber Theo Browne tries to analyse these results and provides a few theories on why this might be the case in a video of his - he speculates that the negative sentiment is most likely due to most devs currently having to work with frameworks that they have not chosen for themselves:

Could be, but I'm not sure if I entirely agree. As some of the comments point out, it might just be the increasing complexity of the individual frameworks. Another reason could be, that it's just the natural entropy of things, that when you use something long you enough you eventually start hating it 🤷♂️

It's definitely going to be interesting to see which frameworks will end up coming out on top and rise to popularity in 2025. It's likely still a sage bet to hold on to your React and Next chops in the meantime.

CSS One-Liners for Every Project

Alvaro Montoro shares 10 of his personal favorite CSS one liners that are quite useful to add to your CSS vocabulary. They're not like ground-breaking things, more situational than anything else, but still might not be things that you'd have naturally thought of:

Also highly recommend checking out Alvaro's other posts, lots of CSS goodies in there!

Geoff Graham from CSS-Tricks piggybacked onto Alvaro's post, and shares some additional one-liners of his own:

Other Cool Tech Things:

And to close off this section, here's some other cool web & tech related thingies that might interest you:

- Hamburg HTML 5th yearly Meetup: If you're somewhere near Hamburg in Germany later in November of this year, you might want to check out the HTML meetup that's held there.

- Chris McCully's shares the story behind the making of Snippp.io, the tool that he's been chipping away at over the past months and also made accessible as a service for the public to contribute their own code snippets. It's been really inspiring to follow along on the journey.

AI Corner

Meta releases LLaMA3.1 405B

With the release of LLaMA 3.1, Meta is giving OpenAI, and the other closed source LLMs currently out there, a run for their money. Not only is the series of new LLaMA models on par, if not superior, in ability with the competition (according to the benchmarks), but they are also open source! Zuckerberg wrote a lengthy official statement for this occasion, explaining why open sourcing these types of models is important:

TLDR: Zuckerberg advocates for open source AI because it'll allow organizations to tailor models to their needs, let them stay in control, and protect their customers' data by avoiding dependency on third parties for this service. The bottom-line of it is that he sees no advantage to closing off access to the tech, which is not only beneficial to developers, but also to Meta and its partners. If you're curious about the models themselves, or want to take them for a test run, you can do so here:

Over the past couple of days I've actually been playing around more and more with Claude Sonnet 3.5, and it's quickly become my go-to model for programming purposes - I'm incredibly impressed with its ability in that regard, as it just beats Gippity 4o and Gemini by miles. It's going to be interesting to see if Llama 3.1 can rival Claude in that regard, or if some fine-tuned variant will do the trick. I'm sure we'll see a whole slew of customized models in the coming weeks/months.

I've been playing with the thought of fine-tuning my own model towards that end - to aid me in my writing every week, especially with the Newsletter, where I could often need someone to help me brainstorm. I do think that I've got enough data at this point 😅

If you don't feel like reading the linked article you can also check out Fireship's humorous code report on the LLaMA King:

Besides this, among the tech billionaires, Zuckerberg currently strikes me as the most likeable character (in case he isn't actually a lizard-man). I would still love to see that cage fight with Elon at some point, but I doubt that it will ever happen.

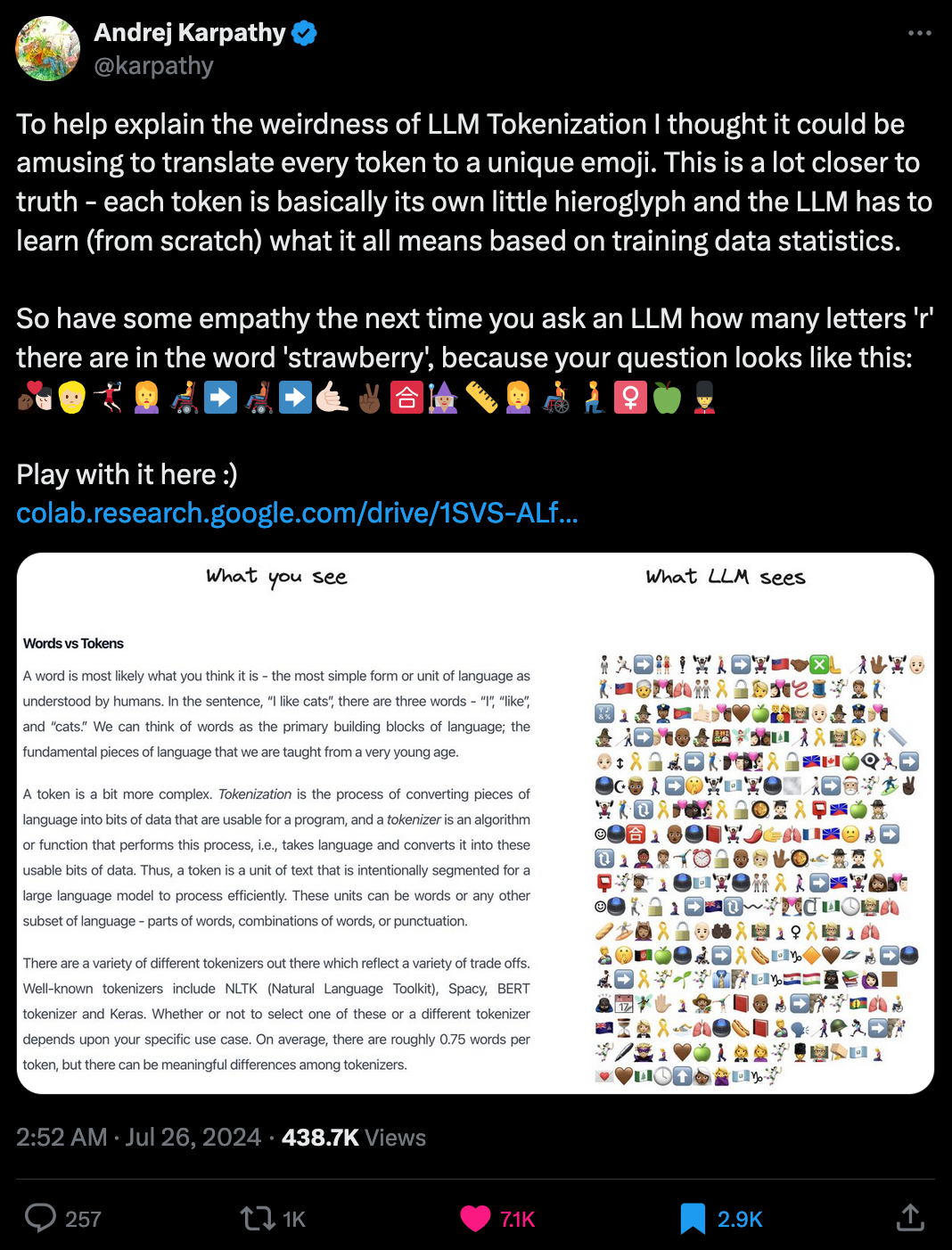

What LLMs actually See

Prompts aren't actually fed in as plain text into LLMs - the text is first processed such that individual words are converted into tokens, which are essentially hieroglyphs that the model can understand. In a recent tweet of his Andrej Karpathy likens this to representing a text as a string of emojis, where each emoji represents an individual word. For this purpose he's put together a little google colab, to give us an idea of what LLMs actually see:

Gorilla Updates

Here's some of the stuff I worked on this week - nothing outstanding, just some random ideas in search of something that I have in mind:

I also made these pixelized patterns as an offspring to the above idea - that kinda looked like organic, fungal thingies:

Not sure where this is going at this point, but I'm having fun. With all of this said, I'm heading off to my yearly trip to Tunisia tomorrow for a much needed vacation. There will definitely be another issue next week, alebit a little shorter. I might overall be a bit quieter over on social media, which is not to say that I'll continue to working on my projects while I'm there.

Music for Coding

This week I thoroughly enjoyed listening to The Paper Kites - an indie folk band from Australia that had their big break back in 2011 with their EP Woodland, a short and sweet atmospheric collection of 6 tracks that really captures the essence of the genre with its gentle, melodic tunes and introspective lyrics:

And that's it from me—hope you've enjoyed this week's curated assortment of genart and tech shenanigans!

Now that you find yourself at the end of the Newsletter, you might as well share it with some of your friends - word of mouth is till one of the best ways to support me! Otherwise come and say hi over on my socials - and since we've got also got a discord now, let me shamelessly plug it again here. Come join and say hi!

If you've read this far, thanks a million! If you're still hungry for more generative art things, you can check out last week's issue of the newsletter here:

And backlog of all previous issues can be found here:

Cheers, happy coding, and again, hope that you have a fantastic week! See you in the next one!

- Gorilla Sun 🌸