Welcome back everyone 👋 and a heartfelt thank you to all new subscribers that joined in the past week!

This is the 69th issue of the Gorilla Newsletter - a weekly online publication that sums up everything noteworthy from the past week in generative art, creative coding, web3, tech and AI - with a spritz of Gorilla updates.

If it's your first time here, we've also got a discord server now, where we nerd out about all sorts of genart and tech things - if you want to connect with other readers of the newsletter, come and say hi: here's an invite link!

That said, hope that you're all having an awesome start into the new week! Here's your weekly roundup 👇

All the Generative Things

Artificial Life Environment aka A.LI.EN

ALIEN is not only the most massive artificial life simulation out there, but also one of the longest ongoing software projects of that kind that I'm aware of; finding its beginnings with a paper of the same name back in 2008, it's been Christian Heinemann's brainchild ever since. A timeline over on the project's official page indicates that the first version of the software was released to the public in 2018, a decade after the initial paper had been published:

To explain ALIEN in a few words, it's essentially a massive particle based simulation engine that models digital organisms within an artificial ecosystem. The digital organisms in these simulations are modeled as particle clusters (networks/graphs of connected nodes) that emerge in an entirely evolutionary manner, replicating and re-configuring themselves autonomously to adapt to the environmental conditions that they're faced with.

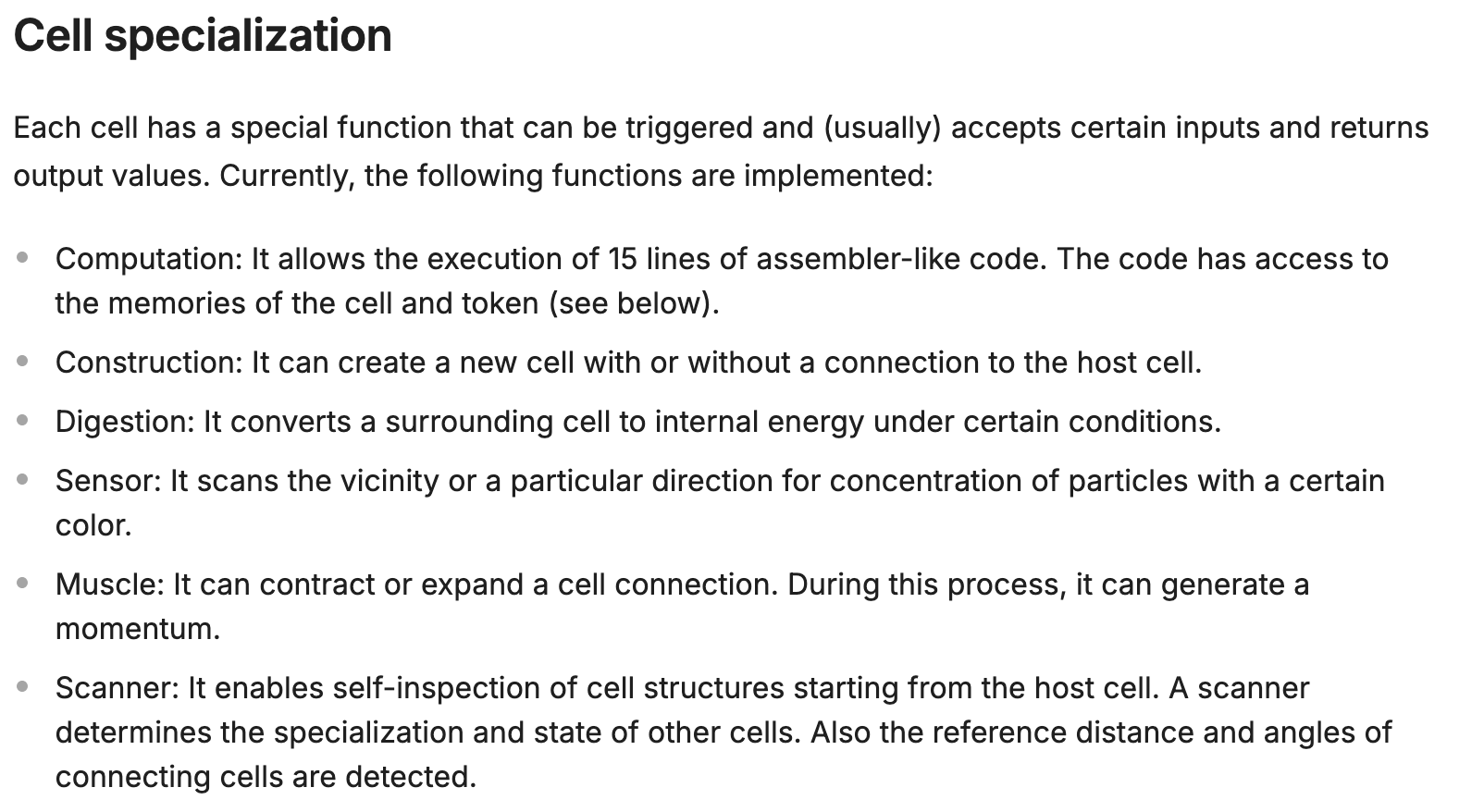

While I won't claim to understand a lot of the complexities of the project, skimming over the docs does reveal a little bit more about the technical details behind how it all works. The engine that powers ALIEN is structured in multiple layers, each of which handles a particular aspect of the simulation. For instance, the computation of physical interactions between particles is entirely decoupled from the information processing layer that's required for modeling the more complex behaviors that can arise between the different types of particles:

The software can handle millions of particles at a time, by virtue of being programmed in OpenGL and being CUDA accelerated. As for the digital organisms themself, the particles that composite them are unsurprisingly called cells, that are made out of programmable properties and functions:

But that's just scraping the tip of the iceberg, there's a lot more cool stuff to it, I recommend exploring the docs for yourself (they might be a bit out of date because the latest version of the software just introduced a number of new updates). A recent big milestone for the project that put it on my radar again, was it winning the Virtual Creature Competition prize at this year's ALIFE conference - and deservedly so, the trailer video is nothing short of stunning, also showcasing many of the fascinating emergent virtual creatures:

Beyond that, the software is also open-source and available under the BSD-3-Clause license - you can find the GitHub repo here:

Procedural Street Modeling with Tensor Fields

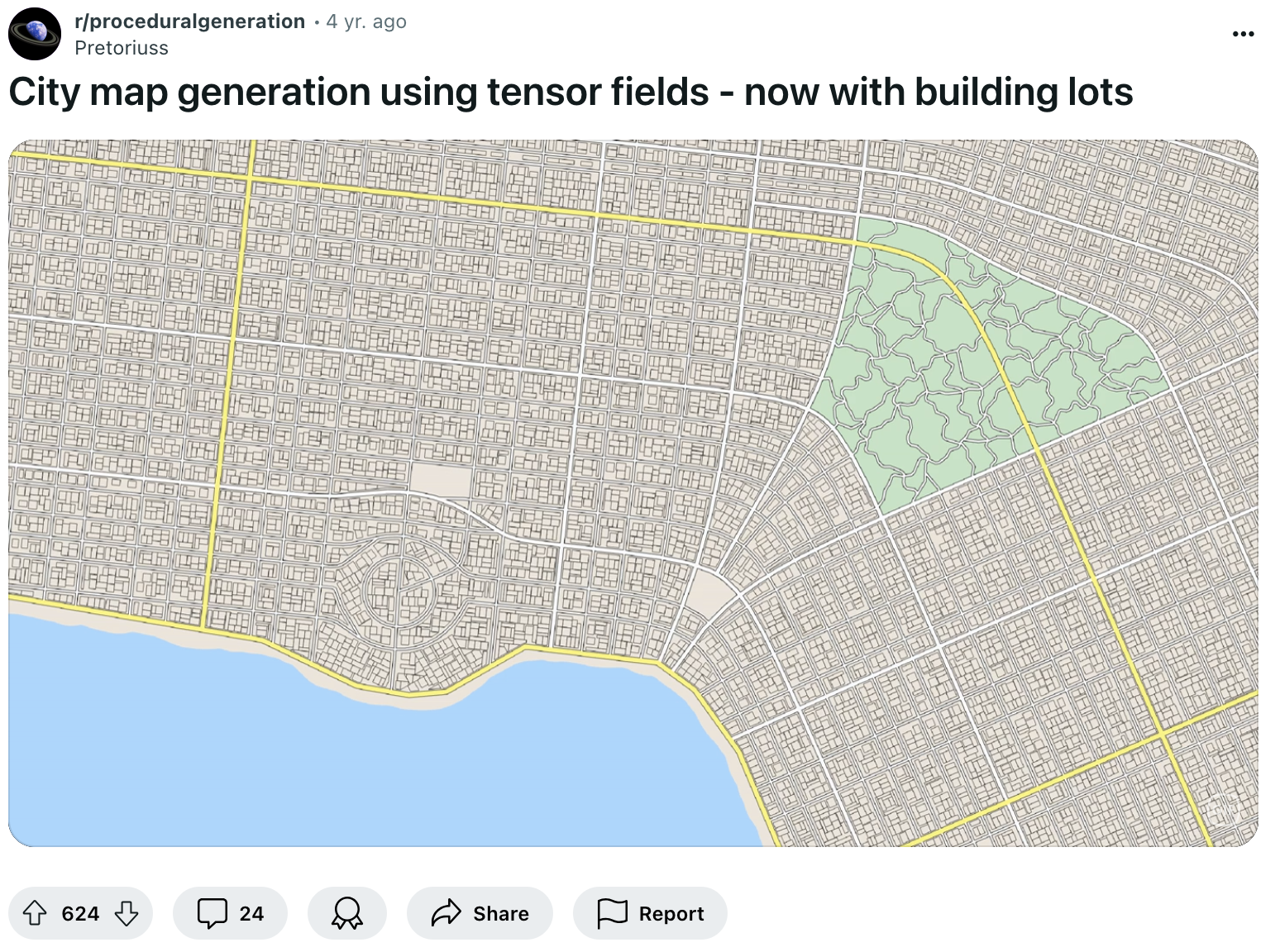

Coincidentally, this week I also came across another interesting paper back from 2008 titled Interactive Procedural Street Modeling, that revolves around leveraging tensor fields for the purpose of modeling realistic road networks that resemble real-life city maps. This rabbit hole ensued from a post over on the r/procedural subreddit that showcases a short GIF where a tensor field is transformed into such a city map:

Here you might ask, what are Tensor fields? And how do we actually turn them into road maps?

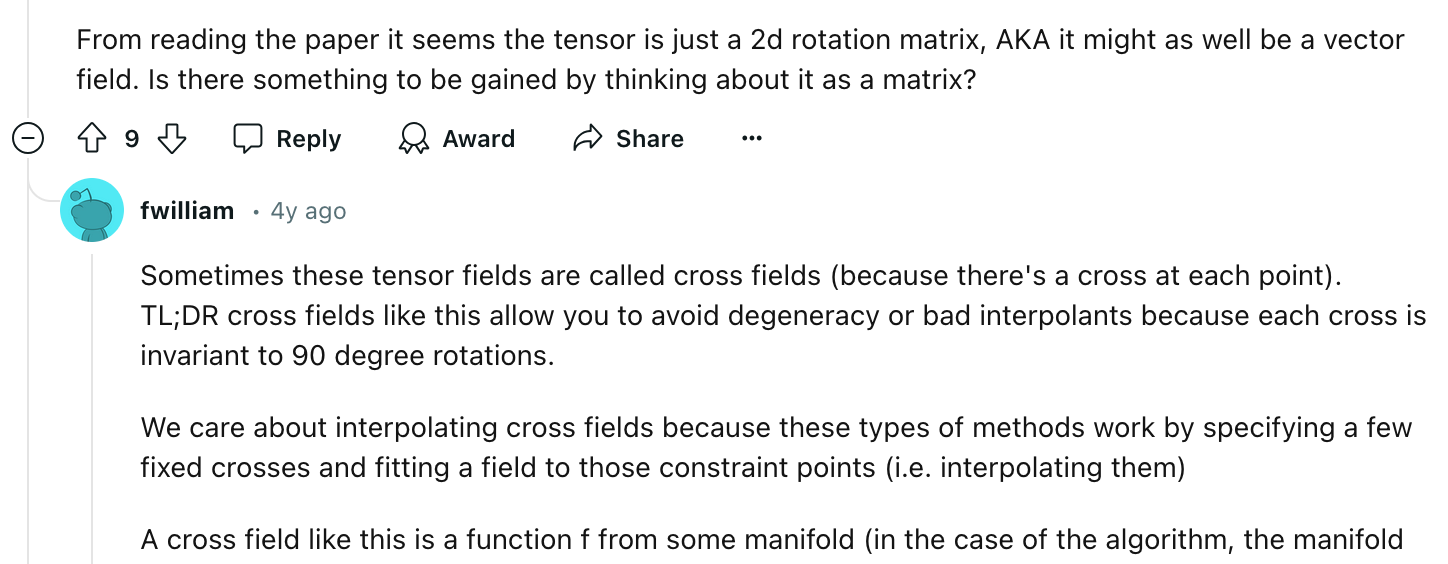

Starting with a single tensor, it's basically a small 2x2 matrix that draws its values from an angle in an underlying scalar field. Arranging many of these tensors together, we get a tensor field—nothing more than a grid where each point holds its own tensor. The interesting part here is that each one of these tensors describes its own eigenvector, which reveals the principal directions in which the tensors have the most influence - in other words, the direction in which the tensor is exerting some amount of force. If we now follow these directions, we can trace lines through the tensor field more commonly referred to as hyperstreamlines.

This probably sounds a lot like a flowfield at this point, but there's some differences; in a regular vector field a streamline is a curve that is tangent to the vector field at every point. Meaning that streamlines show the path that a particle would follow if it moved along the flow of the vector field. Attaching "hyper" as a prefix to the term extends the concept of a streamline to more complex fields, where individual points can describe multiple directions and magnitudes, and in turn require more complex representations. Long story short, hyperstreamlines are tangent to one of the principal directions indicated by a tensor at each point in the field - we then use these lines to define the layout of roads and blocks in the city map.

If you want a comprehensive resource about eigenvalues and eigenvectors in video format, I highly recommend 3blue1brown's in depth explanation:

The use of tensor fields is thus an elegant solution for the purpose of city maps, as it naturally accommodates the dual-directional nature of urban street layouts. Looking into the topic a bit more I came across another reddit post, from 4 years ago, that tackles the same topic but with the added perk of having a comment section where some of the involved notions are discussed in more detail:

Just as an interesting aside, before explaining their own approach the authors of the paper also point out a previous method described in a 2001 paper, which leverages L-systems for the purpose of modeling cities procedurally:

Who is an “Artist” in Software Era

I was going to cram this one into the AI Corner section based entirely on the title of the post, but after giving it a read it definitely pertains to generative art in general. Dr. Lev Manovich shares the second Chapter of a book that he's currently in the process of writing, titled "Artificial Aesthetics: Generative AI, Art and Visual Media" it tackles several of the contemporary issues and open ended questions that arise around these related fields.

Titled "Who is an “Artist” in Software Era?" the second chapter of the book raises important questions around the nature of creativity in this digital age, in particular the role that AI plays in it. Manovich explains that we need to reconsider the criteria by which we judge artistic achievement, especially as digital tools increasingly mediate the creative process - today's digital media externalize the creative process into discrete, programmable steps, fundamentally altering how art is produced and learned:

Given this context, Manovich also questions what it would mean for an AI to compete with human artists. Should AI strive to replicate the creative processes and outcomes of a pre-digital era, or should it be measured against contemporary digital practices? Furthermore, should we consider AI that assists human creativity as part of the evaluation? Manovich implicitly calls for a broader, more nuanced discussion about the intersection of technology, creativity, and aesthetics in the 21st century. I also recommend checking out his website where he shares more of his writing:

Tiny Glade Release + Graphics Programming Conference 2024

If you're a fan of Townscaper, a relaxing town building sandbox game, that I believe to be well known in the genart scene, you'll have to mark your calendar, because a new game of this kind is coming to... town! (ba-dum-ts 🥁) Tiny Glade! Being one of the most anticipated games over on Steam with over a million wishlists at this point in time, it's bound to be a gigantic hit right from day one!

I've been following Tiny Glade's development for the past couple of years now; whenever one of its two creators Tomasz Stachowiak (@h3r2tic) & Anastasia Opara (@anastasiaopara) showcase a progress video on Twitter, I've just been completely blown away by the seemingly magical proceduralism of the game and the incredibly satisfying manner in which geometries adjoin each other seamlessly.

If you'd like to learn about the story behind Tiny Glade, the early days, some of the challenges the duo has faced and a little bit about the technical bits, like the game-engine that's used and how it works, an interview with them was published earlier this year over on the 80 level website:

As an added bonus, through Tiny Glade's upcoming launch I also learned about the Graphics Programming Conference that's going to be held later this year in November - and from what it looks like it's going to include more than a few interesting talks about computer graphics, one of them about some of the tech behind Tiny Glade, you can learn more here:

Matt Henderson's Algorithmic Experiments

On this week's episode of how to torture your sorting algorithm; Matt Henderson probes how different sorting algorithms react to injections of random values, while they are in full swing. The resulting animations are surprisingly satisfying to watch, as the algos eagerly try to bring order to the seemingly unsatisfyable data that they're operating on:

I believe it a big missed opportunity to not have made a sonified version of these as well for endless procedural music 😛. While you're at it, make sure to check out Matt's profile, there's more than a few interesting experiments worth taking a second to look at.

Other Cool Generative Things

- Quick reminder that most talks given at the July 2024 genart summit in Berlin have now been released over on Youtube.

- Inigo Quilez has also been making YouTube shorts to showcase interesting tidbits; his most recent shows the interesting patterns that emerge when the pixels of an image, in raster order, are re-ordered along a Hilbert curve.

- Yohei Nishitsuji is yet another Japanese code golfing wizard, who's Tsubuyaki GLSL tweets have made quite the buzz - check out some of them.

- Lygia's p5js raymarching demo gets an update.

The Free Mint Meta

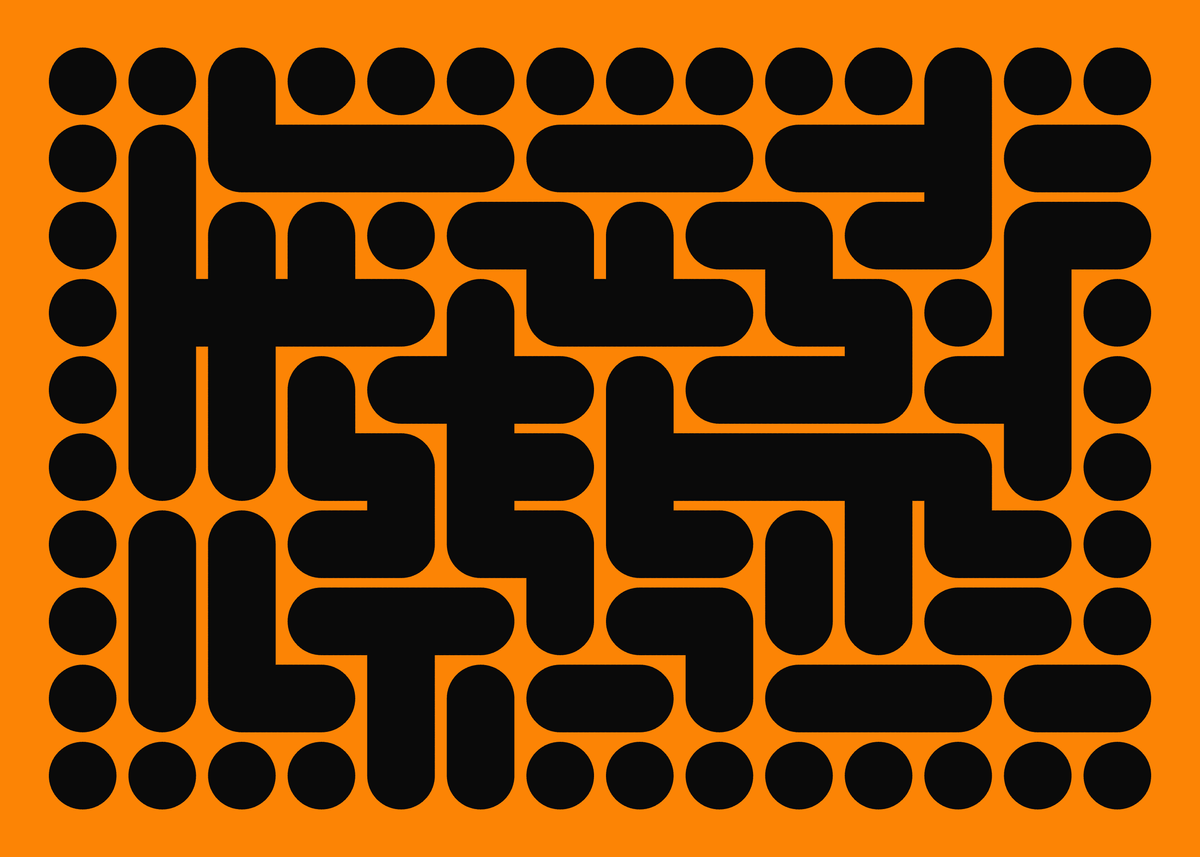

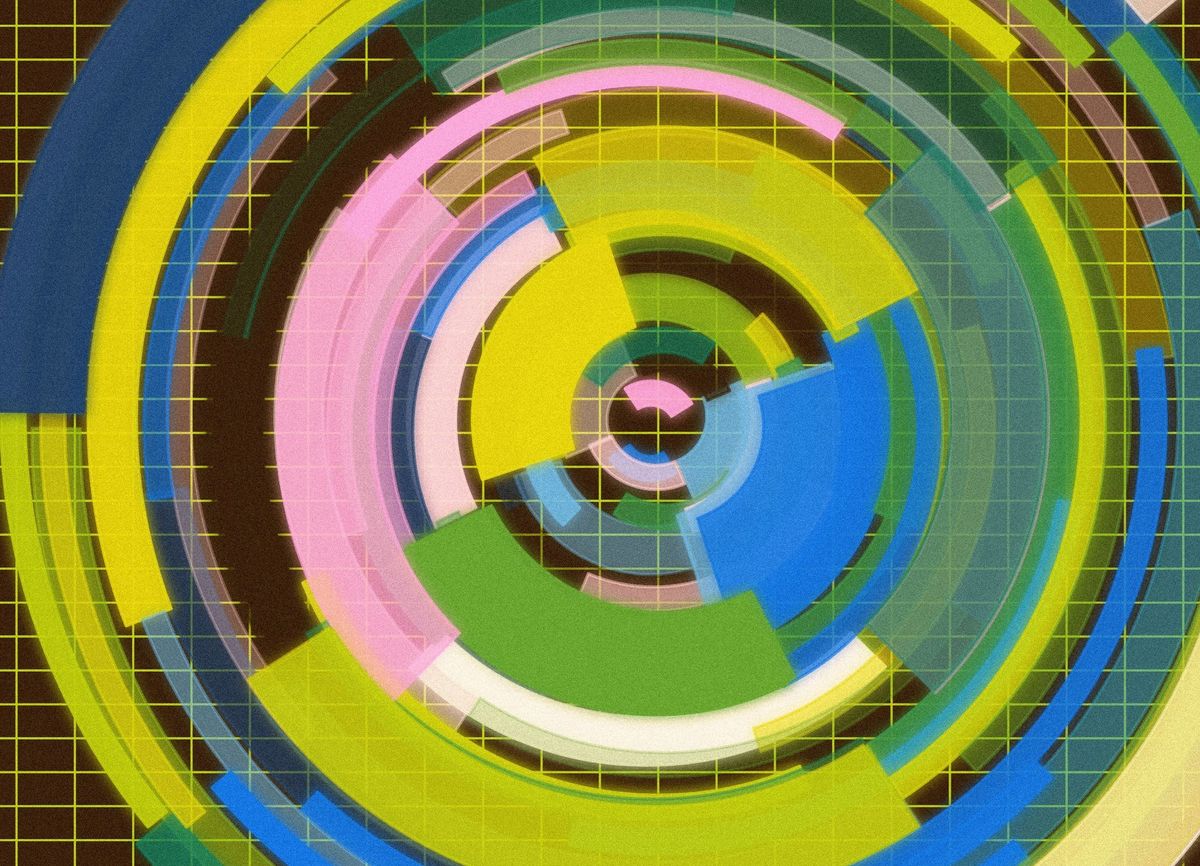

In light of Foundation's Rodeo taking off in the past weeks, a big polarized discussion around this type of micro-mints has taken shape; while some artists have wholeheartedly embraced free mints, others have expressed themselves negatively towards the paradigm. After all it makes sense, distributing your work with a virtually limitless supply and at a negligible fee does not bode well for the value of past and upcoming works. The question then is, what purpose do free mints actually serve?

Bruce voiced his thoughts on the matter and tries to answer this question, he explains that free mints can actually be approached as a means to enhance the value of an artist's body of work, instead of being a detriment to it, when leveraged the right way - community building and long-term engagement being key in this scenario:

Some of the lengthy replies to Bruce's post remain skeptical however. Others have chimed in as well and pitched their own thoughts: Marius Watz provides a different point of view, explaining that artists can repurpose these platforms to express a different body of work from what they usually do, Sofia Garcia and Jamie Gourlay also ask interesting questions on that matter:

Link to Marius Watz' Thread | Link to Jamie Gourlay's Tweet | Link to Sofia Garcia's Tweet

Tech and Web Dev

10 years of Dear ImGUI

If you're not familiar with Dear ImGui, it's an open-source, lightweight, and highly customizable graphical user interface library that's primarily used in the development of tools and applications where a quick and flexible UI is needed. With nearly 60k stars, it currently is the 13th most bookmarked Github repo overall - and is pretty much the industry standard when it comes to create editor tools, debug interfaces, and in-app menus.

The term imgui is short for Immediate-Mode Graphical User Interfaces which was first conceived by Casey Muratori - there's a famous talk from him back from 2005 where he explains the paradigm, but in essence it means that the interface elements are constructed and rendered in real-time, every frame, based on the current state of the application

The initial version of Dear ImGui was released exactly ten years ago, on August 11th 2014, it's creator Omar Cornut (@ocornut) wrote a lengthy and deeply insightful post to commemorate and celebrate this milestone - naturally in form of a Github issue on the repo itself - where he shows some stats, recounts the early days, and provides reflections on the past 10 years of maintaining a software project of this scale:

It's Time to Talk about CSS5

A recent article published over on Smashing Magazine titled "It's time to talk about CSS5" immediately hooked me — what do you mean CSS5? What happened to CSS4? Wasn't CSS3 the last major update to the language in... wait, let me check my calender — 2012?

Those are the questions that Brecht De Ruyte tries to address with his article, pointing out the lack of a cohesive and recognizable way to define the evolution of the tech since the CSS3 era — some of the features that were introduced over 10 years ago are still frequently described as being "modern", which is to say that the term CSS3 is a bit meaningless nowadays:

This is where CSS-Next comes in; the article introduces it as a community driven initiative to create CSS "eras" and categorize the features that have been introduced over the past decades by the eras in which they have been introduced. By defining these eras, it becomes easier for everyone involved in web development to keep up with CSS changes, much like how CSS3 once encapsulated a significant leap forward in web styling capabilities. This proposal is therefore not just about versioning for the sake of it; it's about creating a framework that developers, educators, and employers can easily understand and use. This would also acknowledges that CSS is a living language, constantly evolving with new features that need to be recognized in a structured way.

The overarching challenge will be in the adoption of these new terms however. The success of CSS3 was partly due to its strong branding and the significant leap it represented at the time. For CSS4, CSS5, and beyond to gain similar traction, the community will need to see value in these distinctions. This is where marketing and education will play a crucial role—ensuring that these new "eras" are not just technically accurate but also widely recognized and adopted.

The article ends with a call to action, asking developers to weigh in with their own thoughts on the matter and join the CSS-Next group. If you want to read more about this, two important posts are Jen Simmons issue that was opened back in 2020 over on the w3c repo:

As well as this article over on CSS-Tricks, fittingly titled CSS4:

AI Corner - Trainable Neural Network in tldraw

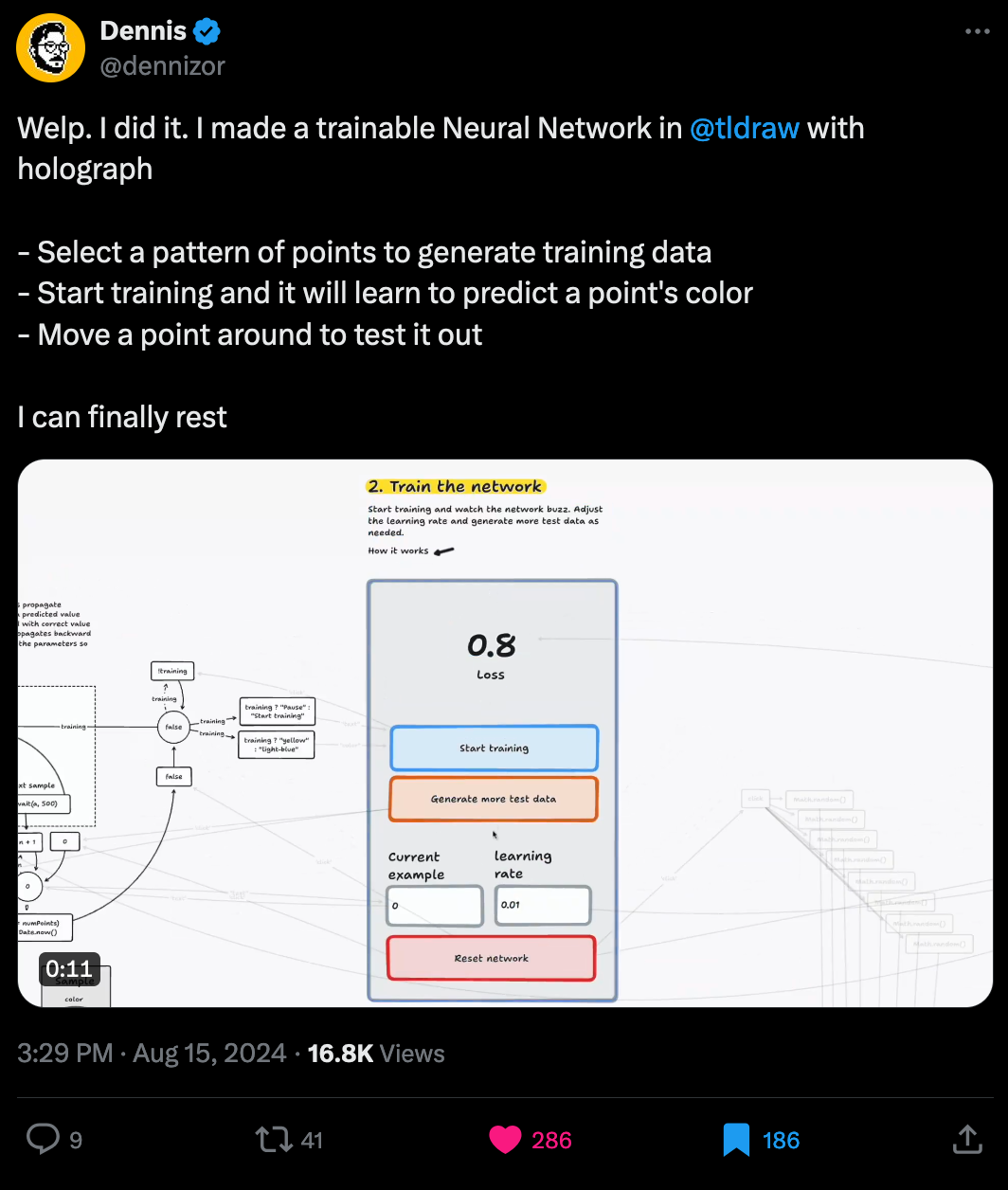

If you're not familiar with tldraw, it's a library for creating infinite canvas experiences for the web - basically a digital whiteboard onto which you can scribble down diagrams as well as create flowcharts of all sorts, and is simultaneously very customizable. TwiX user Dennis is the creator of holograph.so; a visual programming language that is built on top of tldraw - it's a bit reminiscent to Samuel Timbo's Unit, minus the physics part, that I featured in issue #38 of the newsletter.

In his most recent endeavor Dennis succeeds at the impressive feat of creating a fully functioning and trainable neural network, entirely within the constraints of the visual framework he's created:

On a related AI note, last year tldraw released an AI powered feature that made quite a buzz, titled "make real" it let you magically generate websites from your hand-drawn diagrams. You can read about it here.

Music for Coding

Artifacts is a real treat of an album, blending soulful jazzy sounds with hip-hop elements to create a thick lo-fi vibe that's hard not to get lost in. After some digging, I found out that SLUG isn't just one artist but rather a collective of musicians from the US and Europe, that work together under their own record label called Coleslaw Records - and they’ve already got a solid catalogue over on Bandcamp if you want to listen to more of them. But anyway, that's beside the point, enjoy these phenomenally jazzy sounds:

And that's it from me—hope you've enjoyed this week's curated assortment of genart and tech shenanigans!

Now that you find yourself at the end of the Newsletter, you might as well share it with some of your friends - word of mouth is till one of the best ways to support me! Otherwise come and say hi over on my socials - and since we've got also got a discord now, let me shamelessly plug it again here. Come join and say hi!

If you've read this far, thanks a million! If you're still hungry for more generative art things, you can check out last week's issue of the newsletter here:

And backlog of all previous issues can be found here:

Cheers, happy coding, and again, hope that you have a fantastic week! See you in the next one!

- Gorilla Sun 🌸