Welcome back everyone 👋 and a heartfelt thank you to all new subscribers who joined in the past week!

This is the 76th issue of the Gorilla Newsletter - a weekly online publication that sums up everything noteworthy from the past week in generative art, creative coding, tech, and AI.

If it's your first time here, we've also got a discord server now, where we nerd out about all sorts of genart and tech things - if you want to connect with other readers of the newsletter, come and say hi: here's an invite link!

That said, hope that you're all having an awesome start into the new week! Here's your weekly roundup 👇

All the Generative Things

Generative Floor Plan Design via Voronoi Diagrams

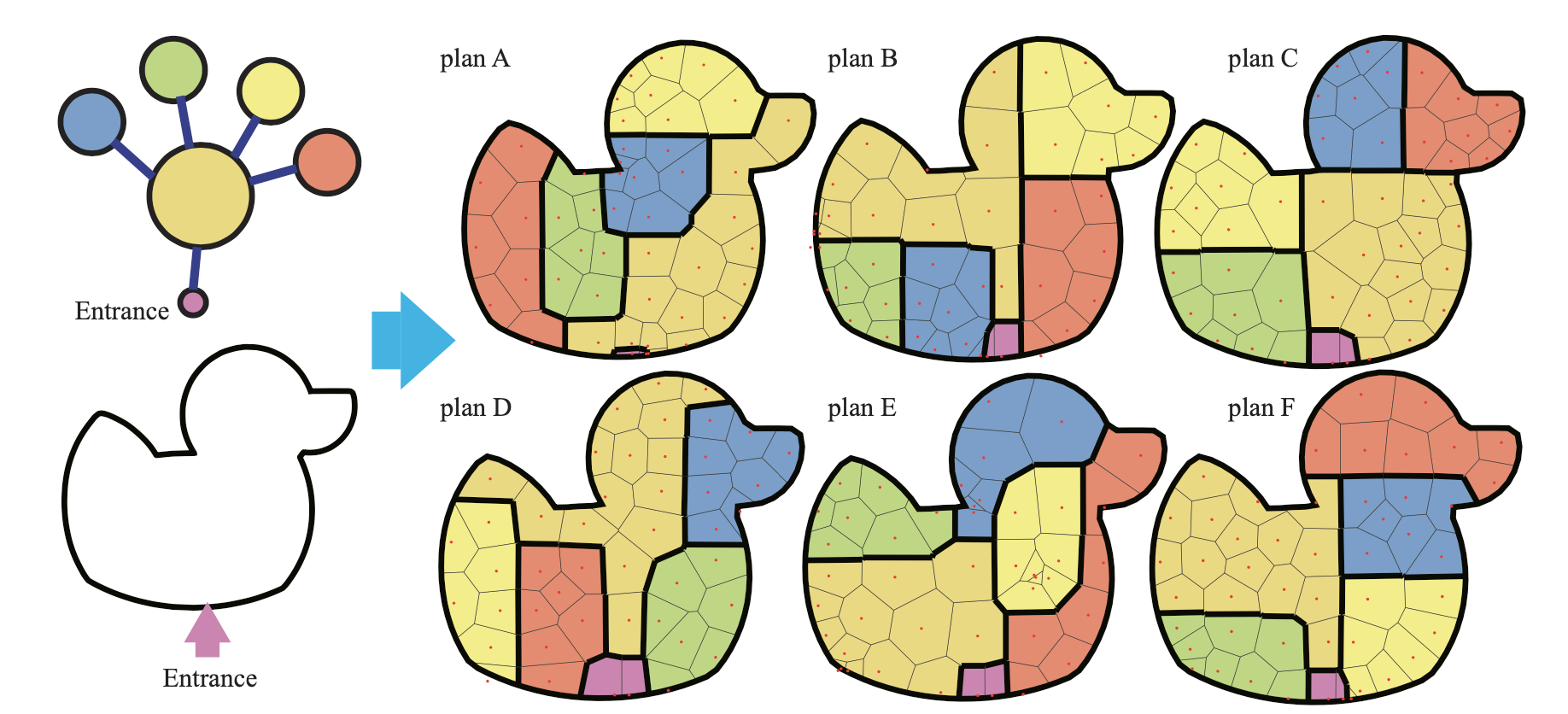

This week's highlight is a research paper that was presented at the Pacific Graphics 2024 conference by a team of researchers from the University of Tokyo. Titled "Free-form Floor Plan Design using Differentiable Voronoi Diagrams," the paper introduces an innovative method for the automatic generation of floor plan designs. Here's some of the visualizations that shared over in the Github repo accompanying the research paper:

Similar to Lloyd’s algorithm, the method is based on the fact that each Voronoi cells can be entirely defined by their centroid—meaning that the polygonal region of a cell is implicitly defined by its distance to the centroid. The challenge is then to figure out how to move the centroids such that rooms (represented by clusters of same-colored cells) take shape, and the boundaries between them align with the coordinate axes.

The procedure starts by randomly assigning room types to each cell in the diagram (represented by a color here), where the number of cells per room is based on the room’s relative size compared to the overall area that is meant to be subdivided. The method then iteratively adjusts the centers of the Voronoi cells until a meaningful floor plan takes shape. The only input required is an adjacency graph that defines the rooms and their relative sizes.

The paper treats this relaxation task as an optimization problem. If you're not familiar, in an optimization problem the goal is to find the best solution by adjusting certain variables (in this case, the positions of the Voronoi centroids) to minimize or maximize a specific objective, called a "loss function." In this case we have multiple objectives (multiple loss functions), to control various aspects of the desired emergent floor plan, such as the individual room sizes, the wall alignment between them, and how they connect.

By minimizing these losses, we can then determine how to nudge the positions of the centroids such that the system generates a well-organized and functional layout (by minimizing the loss function we compute a gradient that tells us in which direction the centroids need to be moved). In this case the authors actually end uses five different losses to guide the process:

- An Area Loss ensures that each room matches its target size by minimizing the difference between current and desired areas, while

- A Wall Loss simplifies and aligns the walls between rooms.

- A Lloyd Loss, inspired by Lloyd’s algorithm, that keeps the Voronoi sites evenly distributed to avoid distorted room shapes.

- A Topology Loss that maintains room connectivity, preventing adjacent rooms from disconnecting or splitting.

- A Fix Loss that locks certain key locations in place during the optimization process, such as the entrance of the plan.

There's a lot more to optimization problems that I'm glossing over here, but in essence these 5 indicators provide sufficient information for the self-assembly of a floor plan. The optimization then runs for several iterations until the loss function converges, where the cells stabilize and no further improvements are needed, which is when we should have arrived at a coherent layout. I actually couldn't resist trying my hand at re-implementing the algorithm, I believe that the technique could be quite a potent pattern generator—but turns out that it's going to take me a bit longer than just one Saturday afternoon.

Differentiably Reversing Conway’s Game of Life

Computing the next state for a given configuration of Conway’s Game of Life is trivial, you only need to determine what rule applies to each cell given the state of its neighbours. But have you ever wondered if it’s possible to compute the previous state for a given random configuration? This is the topic of a recent article that picked up speed over on Hacker News, where Kartik Chandra, aka Comfortably Numbered, investigates how to find predecessors of Life configurations that visualize portraits:

Turns out that this is quite a difficult computational problem: a given configuration can have multiple possible previous states (predecessors) or even none at all. This happens because the rules of the game lead to many-to-one transitions, where different initial states can evolve into the same configuration. Add to that, that certain patterns can “fizzle” out, where one or more cells simply die and remain inactive, this means that information was lost in an irretrievable manner.

Quite a bit of research has gone into the topic—one notable resource in that regard is a transcript of a talk from Neil Bickford back from 2012, given at the Gathering for Gardner biennial conference (that is organized by none other than Martin Gardner himself, we covered his Mathematical Games column back in issue #73 of the newsletter):

Luckily, we can actually cheat to achieve the effect Kartik describes, such that we don't need to treat it as a search problem at all! But rather, as an optimization problem where we use gradient descent to find a predecessor, iteratively nudging a starting configuration into something that could result in the desired portrait configuration. Here's how it works:

- We start with a random Life configuration, which we’ll call b.

- After applying the game's rules to b, we get a new configuration, b'. At this point in time b' looks nothing like the target image, at best it's just a random configuration.

- But we can make b' resemble a target image (like a portrait of John Conway as a Life configuration). To do so, we measure the difference between b' and the target image t.

- Using this difference, we compute a gradient that tells us how to adjust b to get b' closer to t.

- We then make small changes to b based on this guidance, run the Game of Life again to get a new b', and repeat the process.

- By continuously refining b through this iterative method, we gradually transform it to match the target image more closely.

Since Life’s rules aren’t directly differentiable (because it’s based on binary “alive” or “dead” cells), Kartik creates a differentiable approximation of the game by representing the grid with real numbers (0 for "dead," 1 for "alive"), then applies a smooth, continuous version of the rules, using Gaussians to approximate the conditions where cells come to life or stay alive. The resulting pattern look somewhat like Turing patterns.

What it means to be Open

Lu, aka Todepond, is one of the very first creatives that I started following over on TwiX when I joined the platform back in 2020, at a time when it was still known as the blue bird site. I followed because I was intrigued by the cellular automata experiments that Lu had been sharing—it seemed that Lu was exploring different code-based experiments on a daily basis.

I still frequently think about this mind-boggling exploration from time to time:

Now, years later, I learned that these were actually part of a daily coding practice, and has been ongoing for several years now, similarly to what Saskia Freeke is doing.

Back in September Lu gave a talk at Clojure 2024, that was recently uploaded to Youtube. It's by far my favourite watch of this week, not only because it gives insight into Lu's creative practice, but also because it's a beautiful tale about opening up and sharing one's creations online:

Other Cool Generative Things

- Frank Force is hosting an online Game Jam for the LittleJS game engine, accepting submissions from the 11th of November till the 12th of December. The topic will be announced at the launch of the jam.

- 3Blue1Brown recorded an hour long sit down with Ben Sparks (who you might know from the Numberphile channel) where Grant Sanderson shows us his workflow for his Manim python library, that he uses for creating the stunning visualizations in most of his videos.

- John Maeda is in the process of reprinting his iconic 2019 book “How to Speak Machine” in paperback. He mentions an interview that he gave back in 1999 for NYT about him combining computer science with the visual arts—turns out this interview still exists (as part of an ACT exam prep site) and he links to it here.

- The talks given at the XOXO Festival (an experimental festival for independent artists) are in the process of being uploaded over on YouTube, and while they don’t really revolve around creative coding, there’s more than a few interesting nuggets about creativity in there.

- Some week ago I shared the upcoming Graphics Programming Conference taking place in Breda November 12th-14th. They just updated their website with the schedule for the talks that will be given.

Tech & Web Dev

Plain Vanilla Web Components

My personal highlight of this week is a tutorial series by Joeri Sebrechts titled Plain Vanilla. It revolves around building Web Components with vanilla web tech, without relying on any external framework:

A web component is in essence a reusable, self-contained piece of code that encapsulates HTML, CSS, and JavaScript to create custom elements on a webpage. This approach to building UIs goes a long way when it comes to creating consistent and maintainable web projects, having the advantage that each component can be developed and tested independently. The concept of web components has been a bit elusive to me up until this point—I’d erroneously assumed that it is something entirely reserved to frameworks like React and Vue, but it turns out that you can also harness this ability with native web technologies. Joeri states:

The plain vanilla style of web development makes a different choice, trading off a few short term comforts for long term benefits like simplicity and being effectively zero-maintenance. This approach is made possible by today's browser landscape, which offers excellent web standards support.

Recap of the Wordpress Drama

And it wouldn’t be a week in tech if there wasn’t at least a little bit of drama—here’s a quick TLDR of the Wordpress situation as it is unfolding. In short, the founder of WordPress, Matt Mullenweg, is having a public disagreement with the managed WordPress hosting company WP Engine. Mullenweg has criticized WP Engine for profiting from WordPress without contributing significantly to its development. WP Engine has defended its business model, arguing that it provides valuable services to WordPress users.

If you want a quick recap in video format, I recommend FireShip's coverage of the story:

And here's the long version. If you’re for some reason not familiar with WordPress (Wordpress.org), it’s a widely-used free and open-source content management system (CMS) that allows you to create and manage websites easily. Created in 2003 by Matt Mullenweg and Mike Little, it rose to popularity due to the fact that it doesn’t require any programming knowledge. Now it boasts an extensive plugin and theme eco-system, making it power over 43% of webpages on the internet today according to statistics.

Wordpress itself has a commercial branch (Wordpress.com), that provides hosting and all other services required to publish a Wordpress website for a fee. This commercial branch exists under the company Automatic, of which Matt Mullenweg is the CEO and apparently contributes significantly towards the development of the open source software. WP Engine on the other hand was founded later on in 2010, offering a similar managed hosting service that takes care of all the technical aspects of running a Wordpress website, however with less contribution to the open source software.

Now the Drama started on the 20th September when Automatic requested WP Engine to sign a “Trademark License Agreement” with which WP Engine would essentially agree to give up 8% of their revenue to Automatic on a monthly basis. WP Engine naturally declined however, which then led Mullenweg to further escalate the situation the very next day, publishing an article criticizing the way in which WP Engine reuses the software, straight out calling it as a cancer to WordPress:

A long-winded legal back and forth ensued that culminated in Mullenweg banning WP Engine from accessing the resources of WordPress.org, in other words, WP Engine can no longer use the service to fetch plugins and themes, which is a service that is not covered under the open source WordPress license. On October 1st WP Engine deployed its own services for this purpose, and a good summary can be found here:

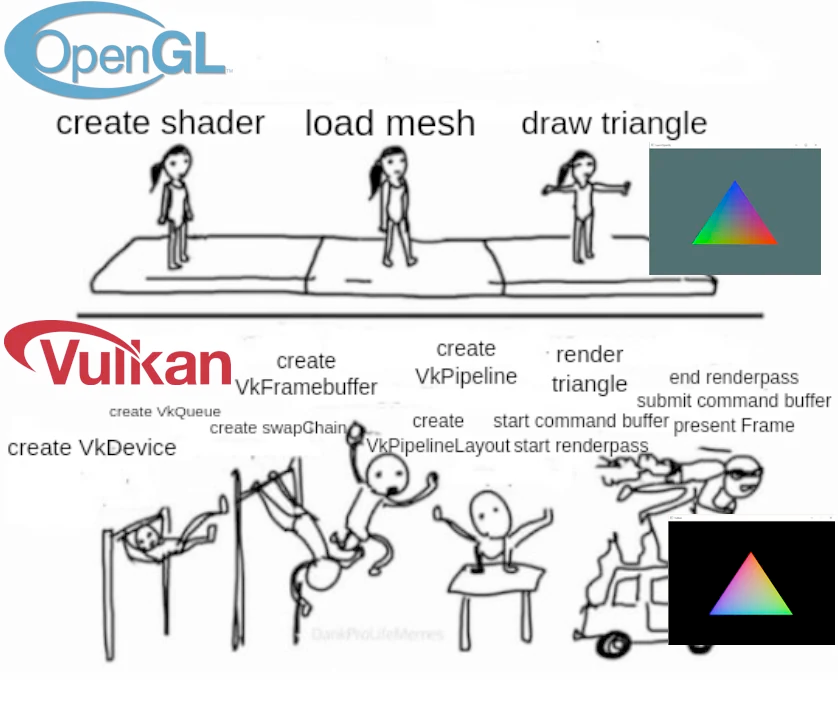

Learning Vulkan by Elias Daler

Vulkan has a bit of a bad rep in the graphics programming scene due to it being notoriously difficult to get into. It's quite complex and requires a lot of setup compared to simpler graphics APIs like OpenGL or DirectX—which is why there’s so many memes about the difficulty of just conjuring a simple triangle onto the screen:

On the flipside however, those who do end up getting into Vulkan end up really appreciating its powerful features. Elias Dahler is one of them, and wrote a super in-depth recount of his own Vulkan journey, from learning the basics all the way to building his own game engine in it, in addition to two small game demos in the short span of 3 months.

I really appreciate the introductory section that provides a quick explainer about the current state of graphics APIs in 2024, including a little comparison between OpenGL and WebGPU. If you’ve ever thought about getting into Vulkan, this is likely the first thing you should sink your teeth into.

AI Corner

2024 Nobel Prize in Physics goes to Geoffrey Hinton & John Hopfield

In October of every year the Nobel prize winners are announced, which are then awarded later on December 10th. This year the Nobel prize in Physics is jointly given to Geoffrey E. Hinton and John J. Hopfield for their contributions in artificial intelligence—their work in the 1980s has indubitably laid the foundation for today’s AI boom.

In 1982 John Hopfield created a type of artificial neural network, called the Hopfield network, that works like a memory system in which a grid of "neurons" (or nodes) can store and retrieve patterns. When you show it an incomplete or blurry version of something it has seen before, the network adjusts itself step by step to try to fill in the missing parts and give you the complete version—kind of like how your brain remembers and completes an image even when parts are missing.

Geoffrey Hinton built on Hopfield's idea and developed something called the Boltzmann machine. It learns to spot common features in data, like recognizing familiar objects in pictures. Which later became a big part of how AI systems can classify images or even create new ones. But this is just one of the things that Geoffrey Hinton has worked on in his career, he also contributed in developing the back propagation algorithm, that is arguably one of the most important notions in training neural networks today.

In recent years Geoffrey Hinton has also stood out for his advocacy of the controlled and secure development of AI technologies, which he also addresses in his speech. He states that he considers the prize as “a recognition of a large community of people who worked on neural networks for many years before they worked really well”:

Congrats to both Geoffrey Hinton and John Hopfield, well deserved.

This also prompted a return of the Schmidhuber meme—there’s a running gag in the AI community centered around Schmidhuber's frequent claim to be the “father” of many foundational ideas in AI:

Can LLMs actually Reason?

Victor Taelin, who you might recognize as the creator of the Bend programming language covered in issue #57 of the newsletter, made an interesting post about AI with a strong argument against the ability of LLMs to actually truly reason. He demonstrates this with a modified version of a simple binary tree inversion problem, where just a few simple modifications are sufficient to push the problem outside of the "memorized solution zone”:

This essentially emphasizes that the perceived intelligence of large language models stems entirely from their vast scale and the extensive data they have been trained on, effectively "memorizing" a significant portion of the internet. While LLMs can produce solutions based on existing knowledge, they cannot engage in abstract reasoning and would therefore not be able to contribute to research in the way that humans can.

Music for Coding

OMA, pronounced oh-em-ay, has gained significant recognition over the past year for its innovative reinterpretations of classic hip-hop tracks. I found myself listening to the following performance after the algo recommended it to me while listening to last week’s recommendation—it probably thought I’d enjoy another one of those live sessions recorded out in nature—and alas it was right. And while giving it a listen, it took me a moment to realize that each tune was actually a cover:

And that's it from me—hope you've enjoyed this week's curated assortment of genart and tech shenanigans!

Now that you find yourself at the end of the Newsletter, you might as well share it with some of your friends - word of mouth is till one of the best ways to support me! Otherwise come and say hi over on my socials - and since we've got also got a discord now, let me shamelessly plug it again here. Come join and say hi!

If you've read this far, thanks a million! If you're still hungry for more generative art things, you can check out last week's issue of the newsletter here:

And a backlog of all previous issues can be found here:

Cheers, happy coding, and again, hope that you have a fantastic week! See you in the next one!

~ Gorilla Sun 🌸