Welcome back everyone 👋 and a heartfelt thank you to all new subscribers who joined in the past week!

This is the 88th issue of the Gorilla Newsletter—a weekly online publication that sums up everything noteworthy from the past week in generative art, creative coding, tech, and AI.

If it's your first time here, we've also got a Discord server where we nerd out about all sorts of genart and tech things — if you want to connect with other readers of the newsletter, come and say hi: here's an invite link!

Before we get into this week's news — let's first wrap up Genuary 2025 👇

Wrapping Up Genuary 2025

First things first — mad props to everyone who completed the 31 prompts this year round! Although I cheated and secretly prepared a couple of prompts ahead of time towards the end of December 2024, I still didn't manage to keep up 🤣 this actually makes it the first Genuary that I don't manage to finish all the prompts on time.

No biggie though — I'm simply going catch up in the coming days, and tackle the leftover prompts that inspire in the moment. In the meantime, here's a batch of sketches for some of the prompts I tackled this past week:

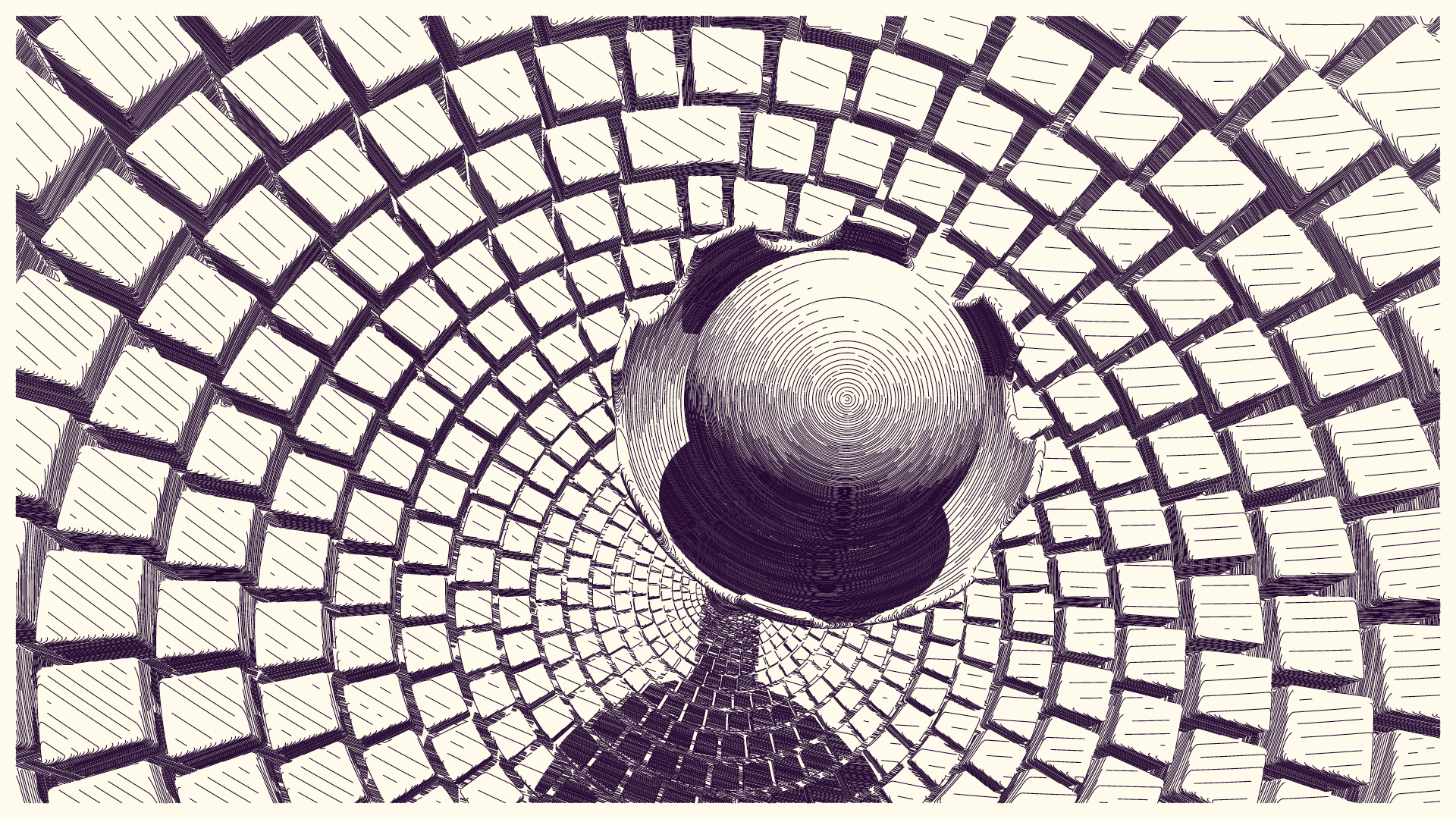

You'll notice that I got quite obsessed with OP art this time round, a consequence of browsing Pinterest that provides boatloads of excellent visual references for the topic. My sketches for prompts #14, #19, and #25 are pretty similar in approach, they all redraw an underlying input image with a simple geometric shape, albeit in a slightly different manner. In #14 I crank up the number of dashes drawn on the brighter areas of the image (almost like a stippling effect), whereas in #19 and #25 I simply modulate the stroke width — turns out are our brains are really good tricking us into seeing images.

In retrospect, it's kind of a sort of dithering... with extra steps?

For the 25th prompt I came across this really cool thread on the code golf stack exchange, where folks have a crack at exactly this task — redrawing images simply with a single line, a cool rabbit hole you might want to explore for yourself:

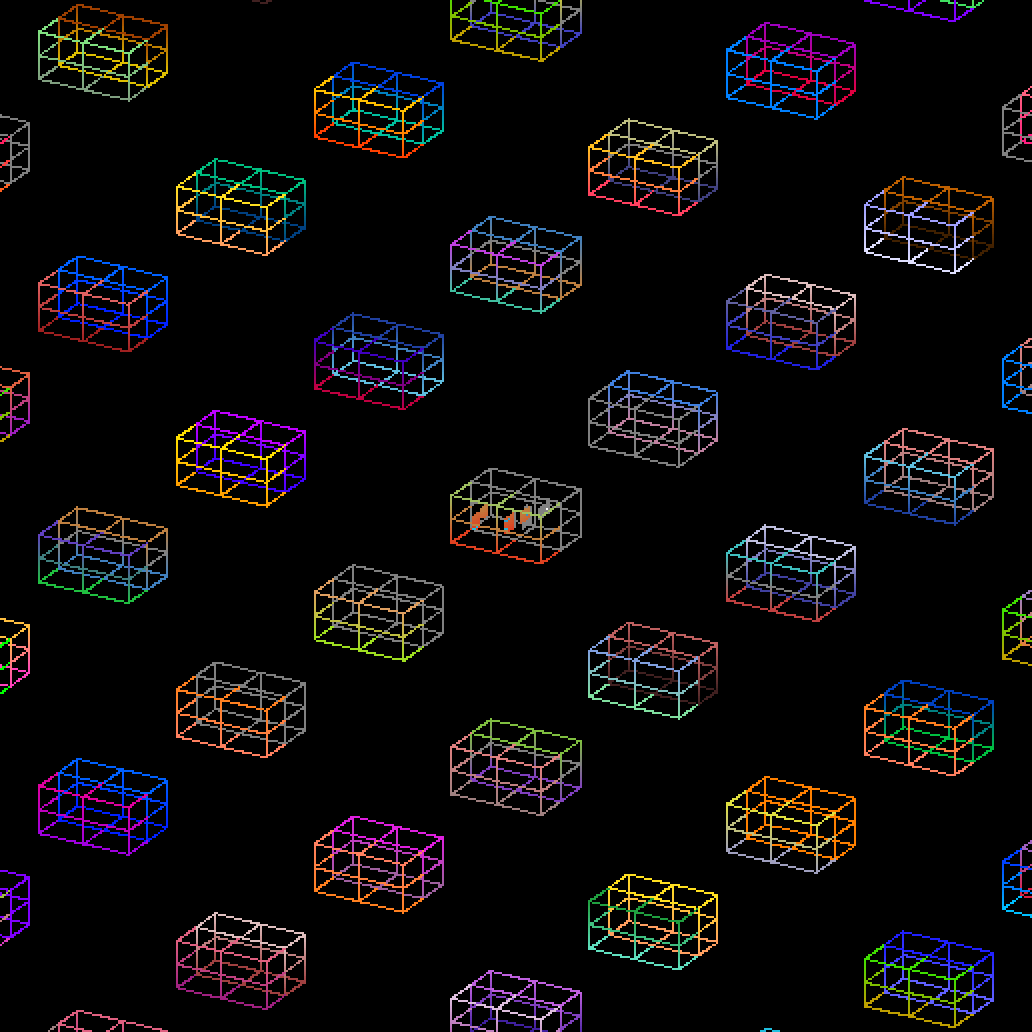

Beyond that I also released a project on the lovely EditArt.xyz, after Piero was so kind to hit me up and ask if I'd like to participate in the Genuary 2025 project marathon organized by Tezos. Collision detection algos are something that I've explored copiously in the past, and the project is in essence just an elaborate packing procedure that places rectangular shapes in a non-overlapping manner. I titled it Fït, as a sort of spiritual successor to the previous project I had minted on EditArt back in 2023 Blöck.

The challenge here was optimizing the code so that the packing algo runs almost instantly — something that's necessary for EditArt due to the sliders that let collectors modify their iteration prior to collecting it. You can play with the artwork for yourself here.

And that's about it for my own genuary shenanigans these past two weeks. Once I'm done with all of the prompts I might put together a recap post. Not promising anything at this point in time though, I might just end up being as busy 😅

As for my favorite sketches that popped up on my timeline — here's a few that stood out to me:

Stranger in the Q's day 25 creation is in a way similar to what I did for #19 and #25, where the image emerges through the thickness of the line — zooming in on Stranger's sketch, it also appears to just be a single line that's perturbed when it coincides with darker areas of an underlying image. What's really is that the pattern itself also seems to just be a single line or 3D wire that fills out the scene.

Pablo Andrioli is a shader wizard that's been at it for several decades, and I have absolutely no idea how he conjures up the fractal architecture in his sketch — but it's absolutely stellar. Highly recommend checking out his profile for more shader/fractal explorations.

Then there's none other that the creator of Genuary himself, Piter Pasma, with his photo-realistic renderer: for day 27 "no randomness" he humorously generated two dice. I've thought about this before, but what if we make a full fledged, realistic physics simulation in which we roll a pair of dice, would that technically be pseudo-true-randomness? 😆

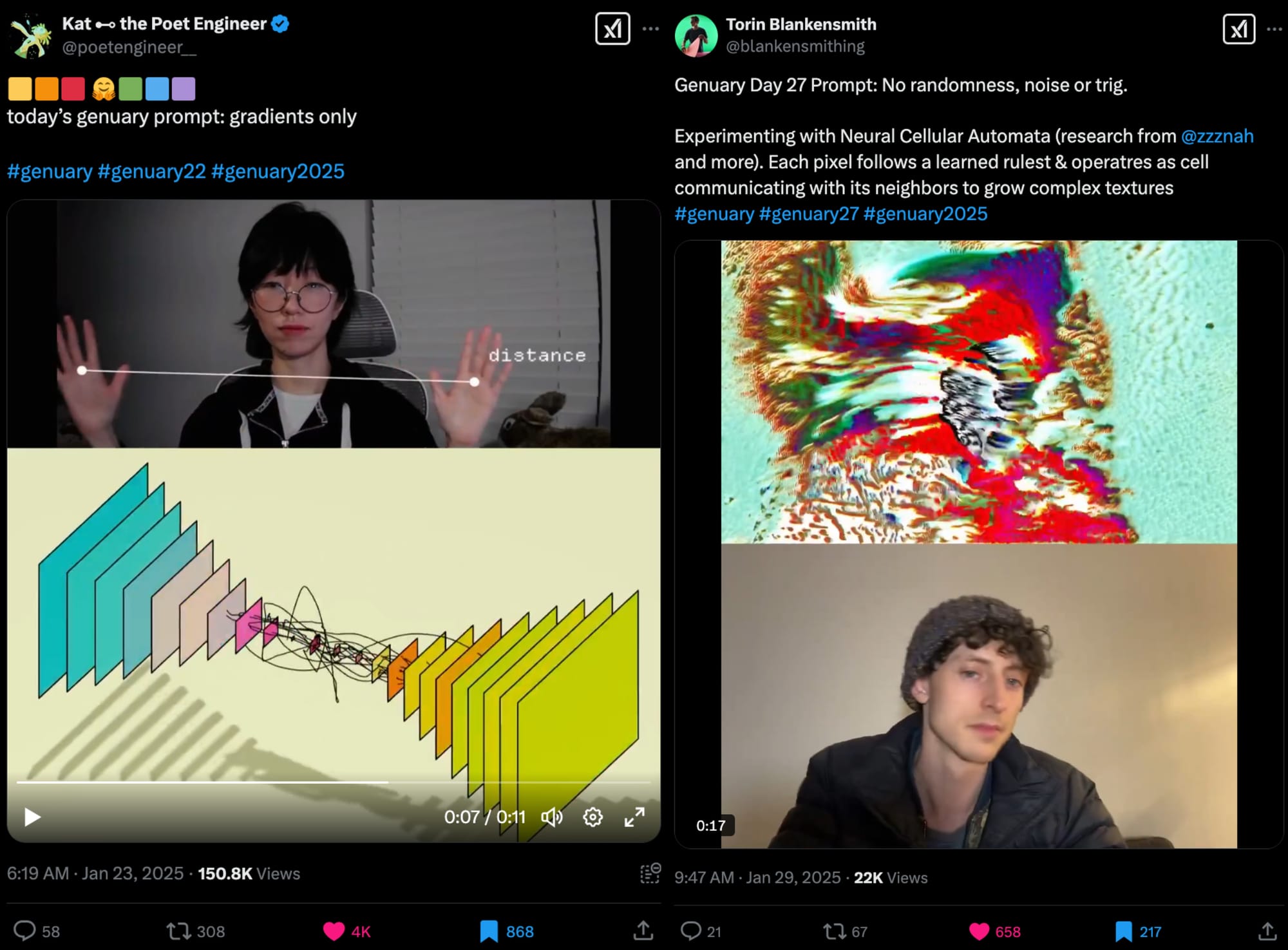

Two other creators that I want to highlight here are Kat, aka the Poet Engineer, and Torin Blankensmith, both of which are making cool interactive, gesture controlled sketches. Not only do they make me want to explore this myself, it seems that these sketches also have a big potential for virality, purely based off of the amount of engagement they rake in.

And that's it for Genuery related things! With the month having come to an end, that's also going to be it for these special segments at the start of the newsletter — I might bring back this broader, ramblier starting segment from time to time whenever there's something that deserves its own section.

That said — cue the news 👇

All the Generative Things

1 — Surface-Stable Fractal Dithering: purely by chance I featured Rune Skovbo Johansen in issue #86 of the newsletter for the procedural game that he's been working on for the past couple of years. This past week Rune made a splash on social media and Hacker News by publishing a video and Github repo about a new dithering technique he created, he titled it Surface-Stable Fractal Dithering for its ability to dither moving scenes in a stable, non-flickering manner — while also having maintaining its density in a fractal like manner.

This new technique is essentialy the outcome of Rune's quest in creating a 3D dithering pattern that remains stable as the player moves around the world. Rune was originally inspired by Lucas Pope's Return of the Obra Dinn — which might've already come to mind seeing the above thumbnail ☝️

It's an iconic game that's famous for its unique dithered aesthetic. Lucas wrote numerous articles documenting his explorations leading to the dithering technique that he ultimately used in the game; in his explorations he also found that standard dithering techniques are not particularly suitable for moving scenes, as they can cause a distracting flickering; arguably also nauseating to take in.

Lucas Pope ultimately ended up mapping dithered textures to a sphere around the player camera, so that the dither patterns are invariable as the player rotates, but still change during translation of the camera. This effect was visually acceptable and does not detract from the experience. Inspired by this approach, Rune wondered if it was possible to create a dithering technique that would stay consistent even during translation of the camera. I'll leave it to Rune and the excellent video he made to explain how the algorithm works — and why

As always it's worth checking out the Hacker News thread on the topic where you'll find more links to interesting realted things — in particular this discussion from not too long ago about the Lucas Pope's dithering technique.

2 — Wave Function Collapse: Daniel Schiffman is back in the trenches, and revisits the Wave Function Collapse algorithm. He first covered in coding challenge #171 two years ago — you can find the original video here.

If you're not familiar, WFC is an algorithm that lets you procedurally generate tile-based patterns by automatically resolving adjacency rules. I wrote a little bit about it in issue #70 of the newsletter, in addition to a newer more general technique by the same creator, Paul Merrell.

One aspect of the algorithm that Daniel Schiffman didn't cover in the original video revolves around an extension of the algorithm, wherein it can automatically detect adjacency patterns in an input texture, and then generate new similar patterns on its own — kinda what you can see in the thumbnail down below 👇

3 — Kim Asendorf's PXL DEX: Kim Asendorf made quite a splash in the genart scene with his newest work PXL DEX: an onchain generative artwork consisting of 256 editions, each an abstract 3D animation called a Deck that can be purchased as an NFT through a custom website. The innovative thing about the project is that it's tied to its own token — when collectors grab a Deck they also get 500,000 PXL, which they can then spend on the artwork to modify its aesthetics; essentially increasing the pixel density of the artwork.

This is a bit esoteric, hence I highly recommend checking out the custom PXL DEX website and exploring it a bit for yourself — it's really mind-boggling elaborate:

Naturally, Le Random are quick as always and already covered PXL DEX with their most recent interview.

This is without a doubt the direction that the onchain genart space is heading towards in 2025. The long-form paradigm that emerged in 2021, is now making way to a new breed of generative art on the blockchain that's much more tied to the underlying tech than before. With Bjørn Staal's Entangled last, and now Kim Asendorf's PXL DEX, the bar has been raised significantly — the generative artwork now needs to do more than just be a long-form if it wants to receive critical acclaim.

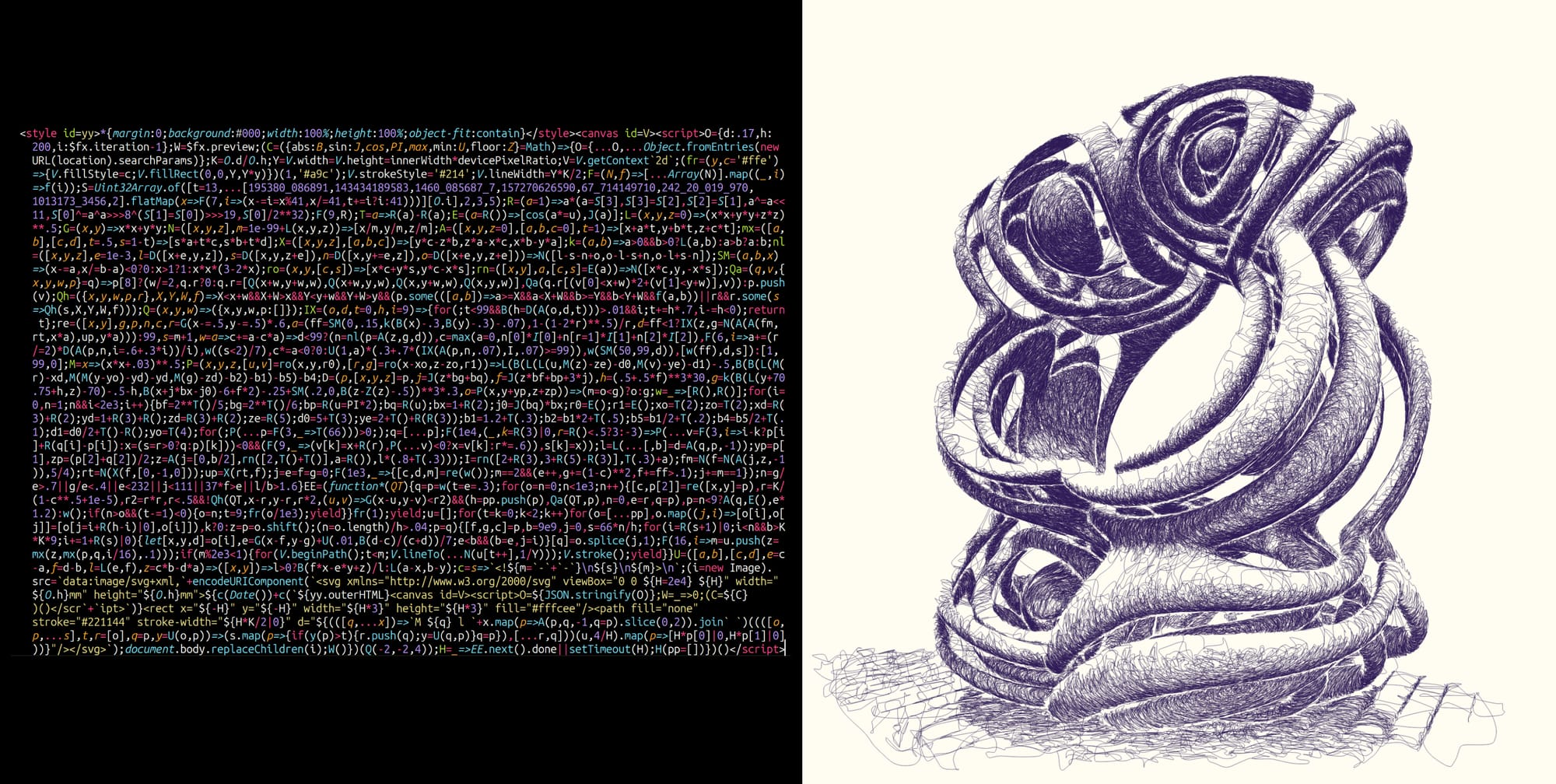

4 — Piter Pasma's Impossible Sentinels: I might be biased here, but Piter Pasma's Impossible Sentinels is just such an impressive project, that I need to gush about it for a bit.

Over the past two weeks I spent a lot of time writing about the Cure3 projects — that actually go live on fxhash today! In case you're not familiar, Cure3 is a charitable art fundraiser that supports Cure Parkinson's; in 2023, as well as this year, the fifth edition of the event, they're featuring a digital section where the sale of generative art on fxhash supports the cause. One of the participating projects is Piter's Impossible Sentinels — the backstory behind it is quite fascinating, I recounted it briefly in a thread over on the main fxhash TwiX account!

Back in 2022 Piter developed a framework for his project Industrial Devolution, essentially an algorithm that lets him turn 3D scenes (generated by using SDFs) into plottable line drawings in SVG format — he wrote an article about this framework back in 2022, giving it the fitting name "Rayhatcher". Later this same idea evolved into The Universal Rayhatcher, where collectors could input their own SDF formulas that would then be rayhatched; culminated in this large large-scale collaborative artwork, with some really mindblowing outputs.

Impossible Sentinels is in essence the latest evolution of this idea, with the additional twist that the shape is now traced with a single uninterrupted line, rather than a hatch pattern — Piter wrote a little bit about it, as well as the compressed, hyper-optimized code that powers the artwork, in a post over on his website.

5 — On Signed Distance Fields by Render Diagrams: since we're talking about SDFs, here's a not-so recent article back from 2017 that explains how SDFs work for the purpose of approximating shapes. Signed distance fields are essentially shape defining mathematical functions — a technique that became popular after Valve (studio behind Half Life and Counter Strike) introduced them in a paper of theirs in 2007 at SIGGRAPH, in particular for the purpose of text rendering. Highly recommend giving this post a read and playing around with the interactive examples — it really illustrates the idea excellently.

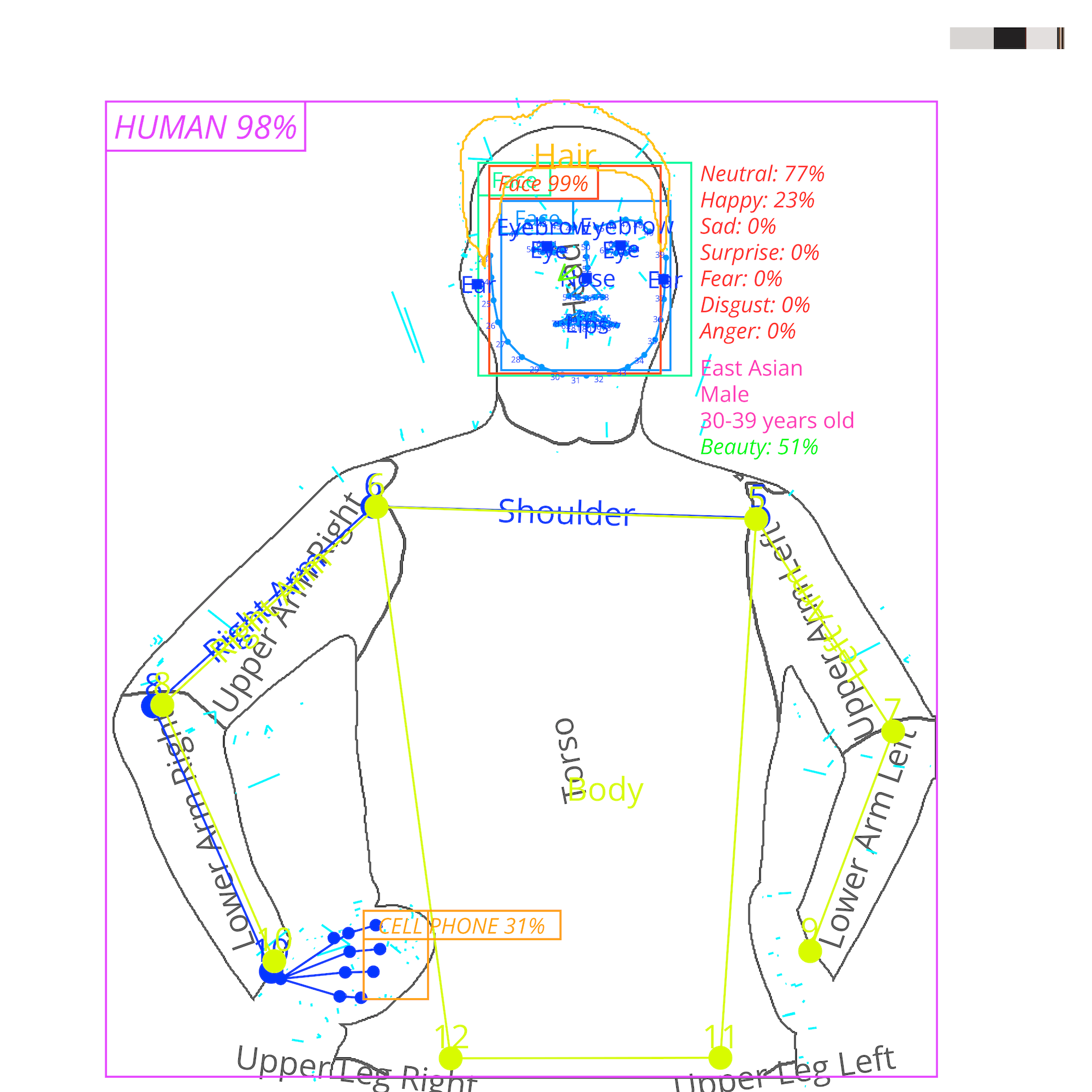

6 — Analytical Portrait: I guess this week's issue is just about highlight cool projects — I discovered the work of Shinseungback Kimyonghun this past week that really spoke to me aesthetically. In particular their recent project Analytical Portraits, that reminds me a bit of fxFactune — I really love artworks that incorporate pseudo-scientific elements. In this case it's cool because those elements are traces of the process by which the artwork is created in the first place.

Tech & Web Dev

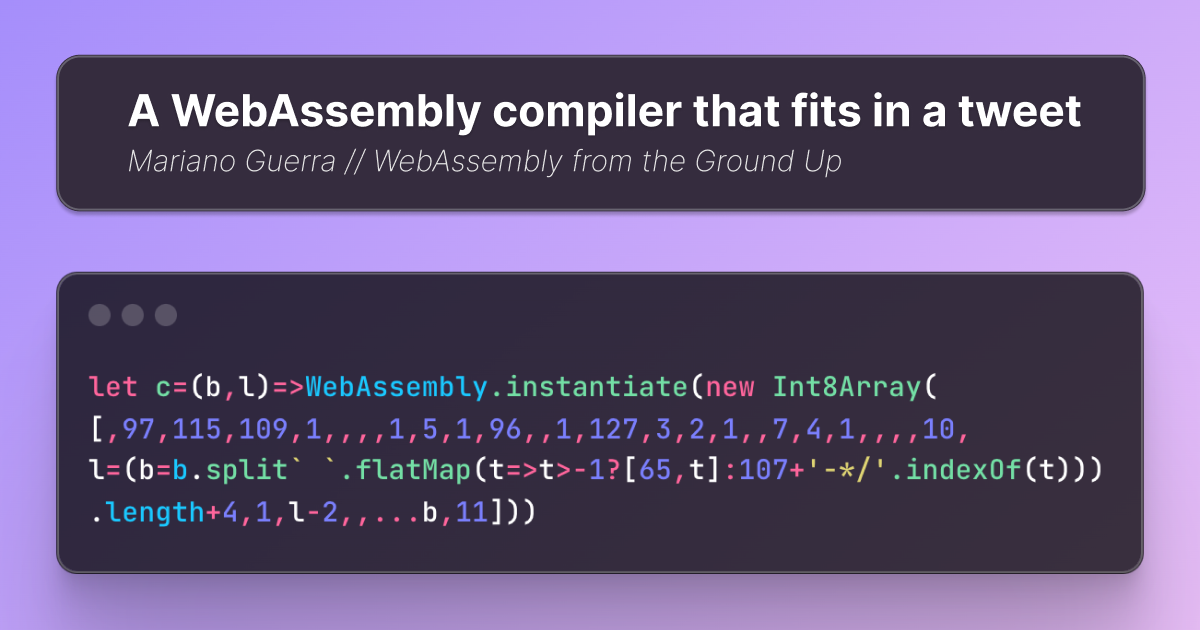

1 — WASM Compiler that fits in a Tweet: my favorite find this week is this article about creating a WebAssembly compiler in just 269 bytes — code so short it fits in a Tweet! If you're not familiar with WebAssembly (WASM), it's a portable binary format that allows running compiled code, from languages like C, C++, Rust, and others, in web browsers — a WASM compiler translates this compiled code into a binary format that can be run in the browser.

Here's the tweet-sized compiler discussed in the article:

let c=(b,l)=>WebAssembly.instantiate(new Int8Array(

[,97,115,109,1,,,,1,5,1,96,,1,127,3,2,1,,7,4,1,,,,10,

l=(b=b.split` `.flatMap(t=>t>-1?[65,t]:107+'-*/'.indexOf(t)))

.length+4,1,l-2,,...b,11]))Interestingly it's broken down with a reverse approach, by unobfuscating the code step by step, rather than starting out from an understandable piece of code that's then slowly obfuscated. While the article doesn't go into too much detail about WebAssembly itself, it does cover many useful JavaScript code-golfing tricks — if you want to learn more about WebAssembly though, I recommend checking out this big github repo that collects more than enough resources to get started.

2 — The Modern Way to write JavaScript Servers: in a recent post of his, Marvin Hagemeister explains a new, simpler way to build JavaScript servers. When using Node.js, you have to set up a network connection (or “bind to a socket”) to make your server listen for requests — meaning that your code has to open a specific port and wait for data to come in; which makes testing slow and complicated.

His modern approach uses a style similar to the browser’s fetch API: instead of binding a socket, your server is just a function that takes a request and returns a response. Testing is now much faster because you can directly call your server function without setting up a network connection.

3 — Accessibility Essentials every Front-end Developer should know: accessibility in front-end development is not just about compliance; it's about improving user experience for all. Martijn Hols shares a fantastic resource that outlines essential accessibility principles — an cheat-sheet for when you're doing your next accessibility audit:

4 — Tetris in a PDF Explainer: In the last issue I shared Thomas Rinsma's DOOM in PDF, and prior to that his Tetris in a PDF experiment. This past week he wrote a quick post on his blog that explains how these experiments work — spoilers: it's basically just JavaScript!

AI Corner

Holy Moly, we got a lot of ground to cover this week in AI! 🤯

1 — DeepSeek is a Game Changer for AI: DeepSeek R1 is the new competitor in the AI arms race, that seemingly came out of nowhere from a small Chinese company; and somehow it's rivaling, if not outperforming, the multi-million dollar models of OpenAI, Anthropic, and Google — all while being open-source! It's made quite a big buzz these past two weeks, and the the big AI companies are in shambles.

What makes DeepSeek so good, while being trained at a fraction of the cost? Afaiu, DeepSeek optimizes several parts of the LLM pipeline, from training to running on prompts out in the wild — for instance, it employs a technique called Mixture of Experts, where it activates only specific parts of its learned weights for different prompts/tasks, that are related to the specific subject, making it overall more efficient to train and run. A recent Computerphile video provides an excellent breakdown of this:

But that's not the only thing that people are excited about — it's mainly the Chain of Thought method that DeepSeek employs to arrive at conclusions, it's also why it's called a reasoning model in the first place. If you used o1, it's basically the same thing, where the LLM engages in a sort of reflection step before delivering answers.

Another key aspect of this kind of thought model, is that it allows for Model Distillation, which allows a large reasoning model to train a smaller, regular version of itself to solve the same tasks at an acceptable performance, while also being able to operate on standard hardware. This ultimately means that more people can access and use advanced AI without needing expensive equipment.

Some folks have also taken it upon themself to run the most powerful DeepSeek model on local hardware — here's a tutorial if you want to take a stab at it:

2 — OpenAI releases o3-mini: the release of DeepSeek has definitely thrown OpenAI into a scramble, most likely forcing their hand to release their newest series of o3 models early — as well as opening up their previously 200$/month o1 model to copilot users free of charge. I played around with it a bit, and it's actually quite an improvement at their regular LLM models.

3 — AI-generated Art can't get Copyright Protection: to cap off this crazy week in AI, there's also been a development with regards to copyright of AI generated art. In essence, the US copyright office declared in a broad report that purely prompt based generated outputs can't be copyrighted, no matter how elaborate the prompt is, this doesn't however include art created with the partial assistance of AI, as far as I understand. The Verge covers this in detail in a recent article:

Considering that in recent years many artists have made a career out of AI generated imagery, the implications are quite severe. I'm also wondering about the ramifications this could have for generative art as a whole (whether you subscribe to the idea that it's an umbrella term or not) — what does this mean for art created through autonomous systems in general?

I might have to throw the report into ChatGPT and have it answer that for me 😜

Music for Coding

Jon Hopkins is a British musician and producer celebrated for his signature sound that blends ambient textures with pulsating rhythms. His innovative work—featured in albums like Immunity and Singularity—has earned him acclaim and collaborations with artists such as Brian Eno and Coldplay, marking him as a distinctive force in modern electronic music.

Hope you've enjoyed this week's curated assortment of genart and tech shenanigans!

Now that you find yourself at the end of this Newsletter, consider forwarding it to some of your friends, or sharing it on the world wide webs—more subscribers means that I get more internet points, which in turn allows me to do more internet things!

Otherwise come and say hi over on TwiX, Mastodon, or Bluesky and since we've also got a Discord now, let me shamelessly plug it here again. If you've read this far, thanks a million! And in case you're still hungry for more generative art things, you can check out last week's issue of the newsletter here:

You can also find a backlog of all previous issues here:

Cheers, happy coding, and again, hope that you have a fantastic week! See you in the next one!

~ Gorilla Sun 🌸